A Re-examination of the "Data Consumer"

What if we've had the wrong mental model of this persona and how to help these folks succeed?

New podcast episode! In it, Julia and I speak with the CEO of Clickhouse, Aaron Katz.

ClickHouse, the lightning-fast open source OLAP database, was initially released in 2016 as an open source project out of Yandex, the Russian search giant. In 2021, Aaron Katz helped form a group to spin it out of Yandex as an independent company, dedicated to the development + commercialization of the open source project.

Enjoy the issue!

- Tristan

Data Analysts FTW

All of the recent conversation about the data analyst recently has me jazzed. Let me do a quick lightning round before pivoting:

I’m extremely sympathetic to the idea that data analysts sometimes create more value than analytics engineers and should sometimes get paid more. Specifically, I think the two roles create value in different distributions. This is well-argued here and here.

I totally agree that, even though analytics engineers can theoretically “do it all,” there really are benefits to having both roles as your team grows. We typically choose to seed data teams with analytics engineers at the outset, but as teams scale you’ll need researchers as well as librarians. Some of us prefer to curate knowledge to be used by others; some of us prefer to be the ones discovering net new insights about the world. That diversity of motivation is healthy.

Time allocation matters so much, and we could be doing a lot better here.

There’s been a lot more floating around but I’ll leave it there. I want to extend the conversation from the data analyst to a maybe-slightly-unexpected place: the “data consumer”. As the recent focus on the data analyst surfaces, the technology industry often fetishizes the new and the technical. Just as we should be clear about the value that data analysts provide, I want to explore the role of this enigmatic persona who is even one step further down the analytical value chain.

Who the hell is a “data consumer” anyway?

The term “data consumer” is just slightly below “business user” on my shit list of mostly-useless terms that people in technology use constantly. It’s endemic in the data technology industry—insiders deploy it to describe people who use the data assets that are created by others. If data analysts and engineers and scientists create the analytical assets, “data consumers”…consume them.

This surfaces this strange dichotomy—data asset producers and data asset users. Sometimes this division used cynically by those with tremendous technical skill in the creation of data assets to talk down to folks who don’t possess those same technical skills. There is a common assumption that all data skills are about creating data assets.

The thing I find so strange about this attitude is that…if you stop and think about it, “consuming” data is the entire point. The right number of data pipelines, data models, notebooks, and dashboards to create is: as few as possible. And yet the industry spends so much time thinking about these activities and the people who do them, while focusing almost none-at-all on the humans at the end of the process who are actually processing the information they contain.

If it feels like I have a bit of a chip on my shoulder, that’s fair. I’ll tell you exactly why: I currently identify as a data consumer. Gasp! How decidedly un-cool. Let me tell you the brief story about how this came to pass and what it’s taught me.

Out of the Game

I have this Monday morning routine—I block 1-3 hours first thing on Monday mornings every week to do a dashboard review. Our company expectation is that every function has a small number of dashboards that are review-able every week and these get automatically shared to Slack every Monday morning at ~6am ET. My kids are off to school at 7:30 and I’m sitting down with coffee going through dashboards at 7:35.

Two years ago, when the company was ~40 people, I had made many/most of those dashboards myself. I had also built the dbt models behind them. I knew them end-to-end at the kind of level you only can when you’ve hand-crafted every part of the experience. Data-team-of-one kinda stuff. Very empowering. If I needed some kind of alteration, at any level of the stack, to pursue a business question I had in my dashboard review I would just make it on the spot.

But then we grew…a lot. We’re now just shy of 300 people. We have a growing data team led by Erica Louie. And as the job of CEO has evolved, I just don’t spend as much time in our dbt project or in our BI layer (to my great dismay). I still spend hours every Monday reviewing dashboards, but as our data models and semantic layer have shifted, I haven’t kept my mental model of them up-to-date. This is a natural evolution, but this past week was…a bit jarring.

Erica’s team has been heads down on a project over the past few months to improve the way we measure recurring revenue. We’ve switched from MRR to ARR, and we’ve made some significant improvements to scenarios that at a smaller scale felt niche but now at a larger scale come up all the time. Handling credit card failure churn gracefully. Upgrades from Team to Enterprise. Etc. In the process, all of the dbt models that I had built many years ago got summarily thrown in the dump, replaced by new-and-improved ones. Critically, the final fact table which had once been a grain of one-row-per-account-per-month moved to a grain of one-row-per-account-per-day. This was needed to handle some intra-month reporting requirements, but it makes monthly aggregations tricky.

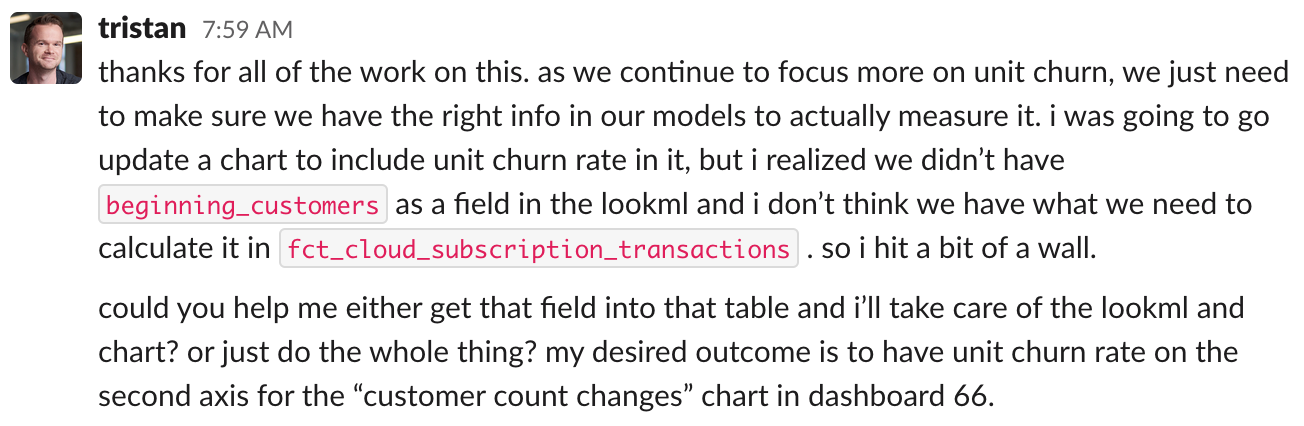

Anyway. This past Monday I was doing my standard dashboard review and found myself wanting more information on account churn. After poking around a bit, I realized it was going to take more of an investment for me to figure out how to do this the right way than made sense. So I sent this Slack message to Erica:

I kind of hated sending that message—I experienced a mix of guilt, frustration, and a bit of imposter syndrome as I hit enter. But it also made me think a lot about all of the other people who send messages very similar to this to their data team counterparts all time time. For the first time, I was on the other side of this data-asset-producer / data-asset-consumer divide and I felt a lot of empathy for the other folks who live here.

I don’t want to overstate my identity with this group for fear of alienating those who are in a very different position. I have the privilege of understanding at a very deep level how the machinery of the modern data stack works and how to inspect the assets I’m looking at. But it turns out that knowledge of the overall mechanism isn’t enough to actually write code—for that, one needs to have the loaded the mental model of your DAG in your brain.

I have never been a professional software engineer, but I imagine it’s the same way: the minute you get too far away from a fast-changing codebase, you lose your ability to make useful commits without great effort. I’ve heard technical founders and engineering managers talk about this transition as being rather disconcerting.

A Message from the Other Side

This experience triggered some reflection. All of this is fairly half-baked, but here’s where I’m at.

Reviewing a great dashboard takes real work. Benn spoke about analysts’ work being augmented with AI, and I think of dashboard review as a bit similar except it’s human intelligence. An expert human reviewing a well-designed dashboard is the interface between structured knowledge (the dashboard) and networked knowledge (the human cortex). The dashboard surfaces data streams to your cortex, and your cortex uses all of its associative power to notice patterns, create hypothesis about causality, and express emotional responses (exuberance, terror) that help guide attention. The human cortex participating in this exchange is just as much a part of the knowledge-processing as the person who created the data assets.

In a way, my distance from the underlying implementation details has been helpful, not harmful. In my weekly dashboard reviews I am no longer traversing 3-4 levels of implementation detail—I’m purely thinking at the level of the business. This focus produces better thinking. This will consistently be a strength of folks who aren’t responsible for creating the assets.

Right now the industry is obsessed with figuring out how to get data consumers to create their own assets without requiring technical knowledge, but “self-service” shouldn’t be the only only goal for empowering data consumers. Effective analytical asset design anticipates the needs of users and often shouldn’t require any new assets to be created to answer questions. Great analytical assets anticipate and answer questions as they occur in the mind of the reader. Most data practitioners in the MDS (myself included) pay too little attention to asset design, instead emphasizing the ease of self-service. We should question this.

Just as data asset creation requires real skill, being a great data consumer requires skill. Organizational context, quantitative reasoning, a ton of practice… I have coached many folks about this over the years and I am 100% confident that we don’t know how to train people to be good at this. And notably, it’s a totally orthogonal skill to data asset creation: being technically strong does not imply you are good at identifying the implications of the assets you create. If we know how to train people to become analytics engineers, how do we train them to be great data consumers? I do not think this involves taking a stat class.

What would products look like that focused on the dashboard review process? What products are superior at creating more designed consumption experiences?

One final thought. In the conversation about data analysts, it seems to be assumed that the most central job-to-be-done for this role is to have heroically-insightful, save-the-business level insights. What if, in wanting those insights to come from data analysts, we’re ignoring the much much larger set of humans who are already (or could be) well-positioned to think on this level?

Sure—let’s pay data analysts fantastically well (my 22-year-old-self will be forever grateful). But let’s not lose track of the fact that most insights generated from our data will need to come from folks who don’t sit on the data team. Let’s ask hard questions about how we more effectively augment our collective data processing capabilities with their cortexes!

What if the data analyst, like the analytics engineer, has a facilitative role to play? If the analytics engineer’s facilitative role is to surface useful datasets and make them accessible (via a variety of tactics), what if the data analyst’s facilitative role is designing data experiences that enable data consumers to draw conclusions themselves?

From elsewhere on the internet…

No editorialization required; it’s a great post. Nate: nice work. Excited for what’s brewing!!

🙌🙌

I really enjoyed this overview of forecasting @ Grubhub. Independent models per region, interesting thought about what inputs go into each model, straightforward mechanism to go from dev to production… Lots of good stuff in here. Maybe what’s the most interesting that the post doesn’t specifically go into is the criticality of forecasting. In a lot of digital native businesses, forecasting is interesting but not critical. Even in early-stage ecommerce where inventory is shipped from a single central location the forecasting demands there are fairly inexact. But Grubhub’s forecasting accuracy is clearly critical to its financial performance and its employees’ happiness (their ability to get paid). If you could magically forecast a single thing about your business, would that thing be a nice-to-have or would it be mission critical?

Gergely Orosz writes a guide on how software engineers deploy code to production that is concise & readable yet still extensive. It’s worth your time—there are things that each of our data teams need to be thinking about in this post. Where is your team today / where do you need to go next on your evolution?

Running an at-scale Airflow deployment is hard. Shopify shares their insights.

Netflix Engineering just released “A Survey of Causal Inference Applications at Netflix” after gathering scientists from across the company involved in causal inference work. Years after Judea Pearl created focus on the lack of causal inference, it is slowly filtering into practice although most of us haven’t had the opportunity to work on a project like this directly. This article reviews four projects that took creative approaches to assessing causality.

"...being a great data consumer"

This is a very interesting topic that I have only seen discussed little so far. It also comes with the twist that data consumers are often more senior in the company than data analysts and frequently have a say when it comes to performance cycles which makes it even more complicated.

We've always tried to meet new senior data consumers as they just join the company and convey the message: It's best to bring in analysts as early on as possible and help them solve a problem with you instead of specifying that you want a chart with X and Z on the axis."

This makes sense when viewed from the perspective of the data analyst but when viewed from the lens of the data consumer, sometimes what you really want and get value from is actually a clearly specified chart (as in your case with the message to Erica).

When I've seen it go really bad is when the data producer have little or no sense of the work required and what they think will be a quick data pull is in fact potentially days of work for the analyst.

This post inspired me to update an older post of mine where I try to dissect the term "data people"

https://arpitc.substack.com/p/data-people