Avoiding Traps

How two failure modes can sap the velocity of your data team.

This week Julia and I spoke to Justin Borgman, CEO @ Starburst. Starburst is responsible for Trino, a compute engine that lets you process queries using standard SQL across multiple different disparate datasets. It’s a fascinating model, very different from the load-all-data-into-a-single-place model so common today.

Enjoy the issue!

- Tristan

“Like throwing more money on the fire”

Emily discusses data team scaling. I found Emily’s description of the problem to be so visceral:

Data teams are always under-resourced, but simultaneously can be seen by those who hold the purse strings as an already expensive investment. Companies hire data teams to drive growth and results, but even modern data teams are struggling to scale fast enough to support the operational cadence of their organizations. (…) Business leaders might feel as though they have already approved a substantial investment in data (...). Asking those same business leaders to allocate even more resources might feel, to them, like throwing more money on the fire (…)

We already spend so much money on our data team…and now you’re saying we need to spend more?!

With the public markets doing what they’re doing right now, many organizations of all shapes, sizes, and growth trajectories are thinking harder about costs. Six months ago the main thing public markets valued was top-line growth; now it turns out that costs matter after all!

The cost of software is in the maintenance

This is a truism in software engineering that I think we’re just internalizing in data teams. It informs so much of modern software engineering best practices today, and I think it’s possible that data practitioners should spend a lot more time thinking about it.

Let’s divide the job of a software engineer into roughly two camps:

releasing new user-facing features (yay!)

everything else (boo!)

Everyone wants to be doing the first; no one wants to be doing the second. But it turns out that if you only do the first, you will fail. Conventional wisdom in software engineering suggest that teams should be spending roughly:

50% of their time on net new capabilities

20% on “KTLO” (keeping the lights on…scaling systems, incident response)

20% on quality improvements

10% on internal tooling

Software engineering teams are paid to build user-facing features, but in order to do this they must do the other stuff too.

In the early days of a software product, almost all time is being spent on user-facing features. There are few users and few existing capabilities, so there’s no on-call rotation, dev tooling is very off-the-shelf, no scaling work to do… This is when everything is fun and exciting, and features seem to just fall out of your fingers! But this balance shifts as you build up a set of existing capabilities and a group of users—all the sudden you need to invest in your own internal custom dev tooling, you have real incidents to respond to, you need to invest real time and energy in scaling. None of this feels good—it detracts from your roadmap velocity!—but if you stop doing it your application will fall over or your internal productivity will slow to a crawl.

Understanding how and when to allocate time across these buckets is hard. Pressure from the business always pushes a VP Eng to focus on new features, but it’s well-understood that doing this at the expense of the other buckets is a failure mode. As a result, one of the core jobs of the VPE is to advocate for time on these items despite pressure from basically every other leader at the company. The extent to which they are successful in having this conversation often dictates how healthy the entire engineering function is at a business.

Translating this insight to data

The above doesn’t directly translate into our context as professionals on modern data teams, but I’m sure you can see the parallels. Let’s divide our activities into the following high-level buckets:

Data platform (data movement, performance optimization, cost optimization)

Data modeling (building new and maintaining existing data models)

Enabling self-service (documentation, training, tooling, support)

Data-as-a-service (data team members conducting analysis on behalf of business stakeholders)

Data products (building productized experiences for data consumers)

Imagine all of these drawn out in some kind of pyramid, maybe like this example:

Let’s call the bottom two activities “infrastructure” and the top three activities “analysis” for our purposes here (I didn’t draw data product into this version). Note that this way of looking at the world has some distinct properties:

It is a hierarchy of needs. You can’t skip infrastructure. You have to do at least some data platform work and some data modeling to be able to actually deliver the analysis.

The bottom stuff and the top stuff create value in different ways. I don’t care how proud you are of your dbt models, they don’t create direct business value. All data modeling is performed so that data can be surfaced downstream in some way: either via self-service, data-as-a-service, or data products. The same is true of data platform work.

There is a multiplier at work. The better your infrastructure, the more efficient your analysis activities will be. Great data models make self-service far more achievable and make data-as-a-service far more efficient. But creating these efficiencies requires a real time investment, so there is a real tradeoff to be made.

The above diagram could be drawn in so many different, and equally valid, ways. Think about how you want to draw this diagram for your team. Here are some questions you’ll need to answer:

How do I want to fill my analysis bucket? Do I want to go all-in on self-service, with just a sliver of data-as-a-service? Do I want to invest in a few key data products?

How do I measure and target time investments between infrastructure and analytics activities? Should I be spending 70% of my time on infra? 40%?

Do different functional areas of the business have different answers to these questions? Maybe we make very different tradeoffs in product vs. in finance.

Anticipate the maintenance

One of the outcomes of thinking in these terms is that you are forced to realize that the minute that you take ownership for a single new dataset, for a new functional area of analysis, that you need to be responsible for it forever. Even if your team only ever builds a single dashboard with data from your HRIS, if that dashboard is widely used then you’re on the hook to do maintenance and support on all of the underlying infrastructure. Incident response, support, scaling, etc. There is a real cost to this, and it quickly saps the forward-moving velocity of your team if you’re not prepared for it.

In software engineering, it’s typical to construct teams that “own” their systems and are resourced to do so. This is baked into the two-pizza-team concept: on a team of ~8, you’ll often have ~4 people dedicated to on-call rotation, scaling, and other KTLO and ~4 people for forward-looking roadmap items. The minute you staff this team with only 4 people (because headcount is always tight!) you’ll realize that you didn’t cut your forward velocity by 50%, you in fact cut it by 100%: you are not making any forward progress at all. This is because you cannot un-staff the foundational activities that are supporting your code running in production—the minute you do you destroy the utility of everything you’ve ever created and destroy trust with your end users.

Software engineering teams know this. In response, they’ve learned how to say no. As in: “no, we can’t take on that new surface area,” “no, we can’t get to that this year.” Of course, the good ones act like good partners and try to help stakeholders think of creative solutions in these scenarios, but they don’t overextend their teams by taking on new surface area that they can’t effectively own through its entire lifecycle.

Stuck in a trap

Returning to Emily’s original post: when I think of data teams who are “struggling to scale fast enough to support the operational cadence of their organizations” I think there are two failure modes.

Data teams who have taken on too much surface area too quickly in an effort to be good partners, and who are then subsequently eaten by the maintenance burden. All of their activities are “stuck below the water line”—they’re spending their time owning existing infrastructure in production and aren’t staffed to do that as well as maintain forward progress.

Data teams who have under-invested in infrastructure and whose analytical work is therefore so inefficient that they’re never able to escape the Jira ticket queue and actually invest in the infrastructure activities that would create efficiencies.

The art of running a high-achieving data team is the art of figuring out how to avoid falling into one of these two traps. Because once you do, it’s tremendously hard to extricate yourself.

From elsewhere on the internet…

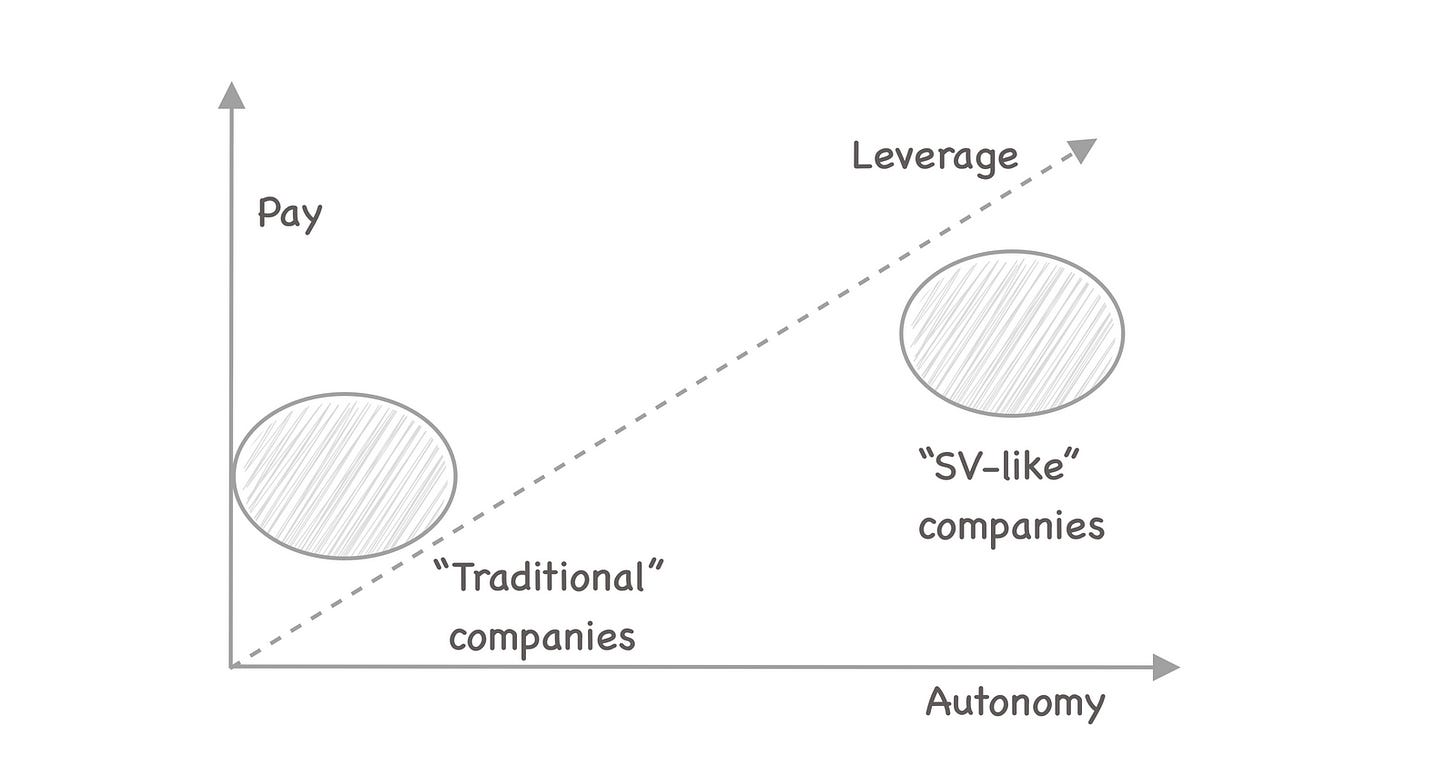

This whole Katie Bauer thread is :chefskiss:, and the original tweet links to a truly fantastic blog post as well. The big point: much of the evolutions in the software engineering (and now data) workflow are focused on scaling the ability to make an impact beyond single hero practitioners. This has implications for team construction, behavioral and management norms, tooling, and career progressions. This might be the single biggest change happening in data today. Absolute must-read. Just one great image from the original post:

David discusses caching. It’s a great overview of an important topic, and one that has an important role to play in the ecosystem we live in today. One of the things that the modern data stack largely jettisoned at the outset was caching / post-processing, instead preferring to push all compute to our friendly neighborhood cloud data platform. But as compute costs ballooned, efficiency became a focus again. Most of the major BI players now have some type of post-processing and/or caching layer, although it’s nowhere near as central to their design as prior generation “local extracts.”

As the technology ecosystem continues to mature, we should expect this topic to continue to get more attention.

I’m excited about DuckDB! Jordan Tigani, long-time product owner of Bigquery at Google, just announced that he’s founding a serverless cloud version of DuckDB called MotherDuck. He’s being joined by longtime Google coworker Tino Tereshko. There’s a lot to love about DuckDB—its performance for analytical workloads on single-node machines is stellar. And maybe—just maybe—we don’t actually need a large majority of workloads to be distributed across multiple machines.

More cool DuckDB news: lots of SQL ergonomic improvements. Why isn’t group by all available everywhere?!

In other database news, Google Cloud just launched a new database: AlloyDB. One of the features listed:

Hybrid transactional and analytical processing (HTAP)

Whoah…that happened fast! From not-a-lot of progress over the course of many years, it seems like I’m now reading about progress in HTAP databases in a bunch of places since Drew covered them here. As far as I know, AlloyDB is the first widely-available, cloud-hosted HTAP database and I’m very excited to see how it’s received. It’s Postgres under the hood, but

up to 100 times faster for analytical queries.

That is…really significant. Time to fire up the Postgres dbt adapter? This could potentially have very meaningful implications for data products.

Iirc MySQL heatwave is also HTAP and in the cloud.

The team that has expanded their surface area too fast and can't keep up with maintenance is probably a team that made the transition from explore to expand in the Kent Beck 3x model. The good thing is that they found something like product-market fit but then they can't fix the bottlenecks being discovered with the transition.

There is probably some important analysis to be done between the difference of standard maintenance and new bottlenecks. There is probably something about tech debt (a term that triggers me a bit as a PM) too...

I've been really interested in maintenance, especially with a new book from Stewart Brand coming out about it soon.