Build or Buy? Data driven feature backlog. Risk based thinking.

The Analytics Engineering Roundup goes full on Product Analytics! Also in this issue: benchmarking activation rates by product category.

This week’s roundup (rather unintentionally!) has a strong product analytics slant to it, and I’m here for it 🙌

In this issue:

Build or Buy: How We Developed a Platform for A/B Tests by Olga Berezovsky

Using Data to Elevate Features in Your Backlog by Greg Meyer

select 'Risk-Aware Data Management' as next_old_thing by Ashley Sherwood

What is a Good Activation Rate? by Lenny Rachitsky and Yuriy Timen

👋 Enjoy the issue!

-Anna

PS. Have you taken the Analytics Engineering Survey yet?

It’s closing in a few days, and your input will be extremely helpful for the entire community.

No PII — we promise! — and we’ll share the results with the community when we’re done. We also hope to make it an annual thing so we can benchmark the progress we make as an industry year over year. 🙏

A recent highly scientific study I read said that survey responses are better if you include cat pictures, so here’s one:

Thank mew! 👉 👈 🥺

Build or Buy: How We Developed a Platform for A/B Tests

by Olga Berezovsky

It is so important to talk about the realities of choosing to roll your own internal solution versus buying something off the shelf, and the tradeoffs that come with this decision. Olga does a great job describing a neat custom solution for A/B testing, and the many ways their consulting client benefited from this solution.

Before you get excited about whether or not this is a good idea, it’s worth mentioning that A/B testing is quite commonly built in-house because it resembles the existing structure used for feature flags in many code bases… at least on the surface.

Inevitably, two key problems come up with internal A/B testing frameworks (and most other kinds of bespoke internal solutions):

Scope creep — when you build one internal tool, you frequently have to do a bunch of extra work to make it integrate with several other of your business systems:

An ancient problem in analytics merging all the sources pushed us back in delivery […] Our scope was changed from “build a new A/B testing platform” to “bring in all the data, develop dozyllian pipelines and APIs, integrate them and validate, do the cleaning and prepare the foundations, and then build a new A/B testing platform”.

The cost of maintenance often exceeds the cost of buying something off the shelf:

…the complexity of a tool takes a high cost (and expertise) to maintain and keep it running. Given that it was closely integrated into their front-end and back-end, any further migration, optimization, or tuning forced my team to step in to make sure there was no interruption to the user experience or site productivity.

Neither make this an intrinsically bad choice for an organization, but they both carry a real cost in human time that goes far beyond the initial development. The trouble is that as humans, we’re generally not very good about estimating how long it takes us to do something, and therefore whether it’s more cost effective for us to pay for something to be done for us, or do it ourselves ッ

Using Data to Elevate Features in Your Backlog by Greg Meyer

Who doesn’t love a good color coded interactive spreadsheet? And if it helps you make better product investment decisions, all the better 😉

First, I want to compliment the author of this piece. This is well written, and the choice of example (a SaaS project management tool) is 🧑🍳 😘👌 — everybody who has used one of these tools has thought about the list of features they miss from some other project management tool.

Second, I really appreciate the simplicity and utility of this framework. Whether or not you agree with the specific levers proposed in the post, you have to admire the cleanliness of the framework behind it (h/t John Cutler). It’s composed of scores that help address two fallacies, or mental shortcuts — 1) a user overweighing of the value of an existing solution they already have, and 2) a company overweighing of the value of the solution they are selling.

Because we overweight the value of the solution we’re selling, we plot the competitive score against the use score to determine the best match. We want our most competitive offering to match the feature the prospect values the most.

I buy that.

The average user overweights the benefit of the [existing] solution they have by 3x, so providing a solution the checks all of these boxes is critical to getting them to try your feature and to continue using it.

I didn’t initially buy that, but then I read the linked HBR article. I’m going to take the liberty of quoting it here for you:

Many products fail because people irrationally overvalue the benefits of the goods they own over those they don’t possess. Executives, meanwhile, overvalue their own innovations. This leads to a serious clash. Studies show, in fact, that there is a mismatch of nine to one, or 9x, between what innovators think consumers want and what consumers truly desire.

On other words, sometimes we have to build things that aren’t very exciting because they’re fundamental to the user experience or the purchasing decision. Like the ability to “copy a project” in the toy example — it has by far the highest utility, and a rather low competitive score — but when you add the scores up, it puts this feature at the very top of the prioritization queue.

The author’s toy example is actually really interesting in itself! Based on the mock data, I think I as a product analyst should be recommending focus on “messaging other users” next after enabling the ability to copy a project — it has the second highest use score, and second highest competitive score. I could write an entire article about whether or not project management tools should allow you to message other users, but of course this is just an example so I shan’t get distracted. ;)

What the example does make me think about that’s missing (IMO) in this framework: the level of effort required to build the feature.

You could argue this is best left to the actual Engineering, Product and Design squad to figure out because they’re closest to the problem. And you might be right! But if you compare the #1 and #2 features in the toy example, implementing messaging has a much higher engineering lift than enabling copying a project. Which means that you aren’t actually comparing apples to apples when making a decision using this framework.

Every EPD team balances investments across a few categories of work: maintenance of existing features and platform (keep the lights on type of work), development of small incremental improvements (quick wins), vs development of radically new functionality (something that enables your business to have a new s-curve). There’s an ideal mix of those three buckets for every stage of a business, and ideally you’re evaluating your features against others in the same bucket.

But then, the best frameworks are often very simple, and layering on effort is often not simple at all.

select 'Risk-Aware Data Management' as next_old_thing

by Ashley Sherwood

“Yip!!” is roughly the sound I make whenever I get a new article from Ashley Sherwood in my inbox. Ashley always encourages me to think differently about something, and this article is no exception.

This time, it’s about risk:

Risk-based thinking asks the same questions—but inside out. Not “what good thing will happen if we do this” but “what bad thing will happen if we don’t?”

Making money is good. Not making money that we could have made is bad.

Making customers happy is good. Losing customers that we didn’t make happy enough is bad.

Here’s where we can start to tease out the unique benefit of risk-based thinking. Making happy customers happier may provide less value than making disgruntled customers ambivalent.

I’ve always thought of myself as a generally a pretty risk averse human. Sort of a function of my background — I’ve spent the majority of my life in somewhat unstable countries both politically and economically. And I’ve always thought of it as a personal limitation: I’m always thinking about and planning contingencies to mitigate risk. Some amount of this is good — too much can be debilitating. The same is likely true for a business.

But Ashley has just gone and introduced a very unusual framing of risk for me — “risk-based thinking” which puts risk inside a very manageable framework. And I love me some frameworks.

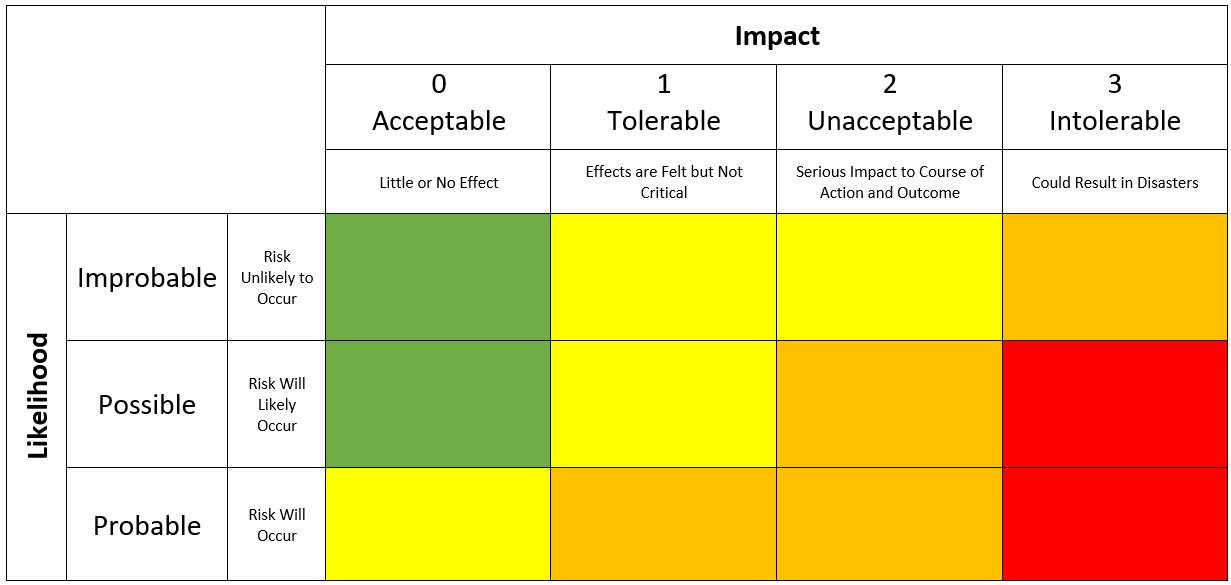

Obligatory matrix below:

Risk is a product not just of impact, but also of likelihood, and we data humans tend to overindex on impact a lot more than likelihood — which can and does lead to over-engineering.

I’m just going to quote this example, and leave you to read the rest of the article because it is excellent:

So when you have data pipelines failing due to upstream changes, it’s important to ask two questions for each source:

How often do we expect these failures to occur?

What’s the bottom-line impact when these failures occur?

Let’s say we expect a source to fail once a year when the product team releases major revisions that rename things. It takes about a week of scrambling to fix this, delaying the quarterly readout, but not significantly impacting decision quality.

We’ve also estimated that it will take about four weeks of back-and-forth to settle on a “data contract” (i.e. API interface with SLAs) for this data ingestion.

Our break-even on this time investment is four years—will we even need this data for that long? Are there other areas that would benefit from those extra three weeks of time this year?

What is a Good Activation Rate?

by Lenny Rachitsky and Yuriy Timen

🙌 Thank you Lenny and Yuriy for running this 💥 survey and freely sharing your findings! What a gem.

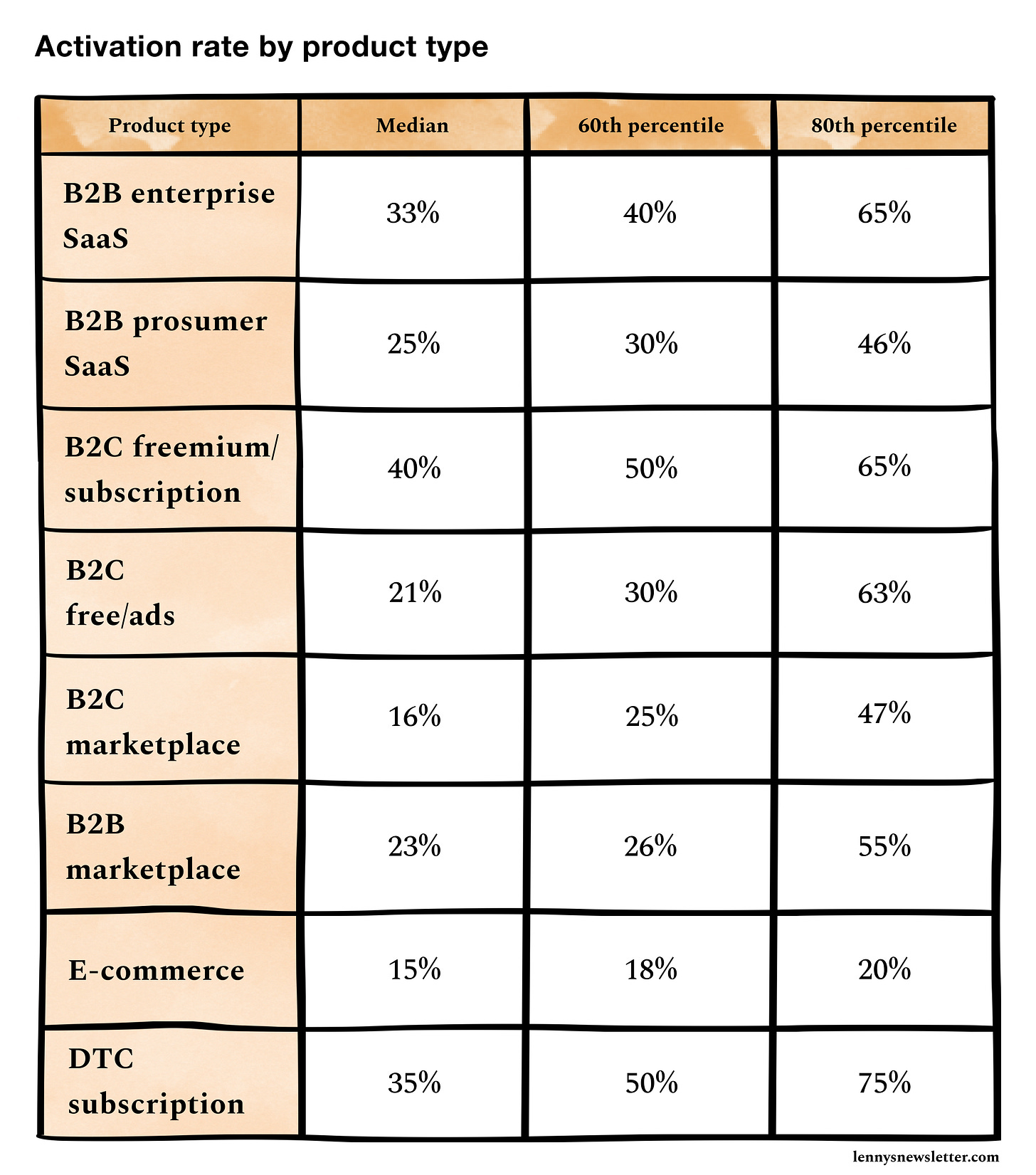

👉 As a refresher, here’s what we mean when we say ‘activation rate’:

activation rate = [users who hit your activation milestone] / [users who completed your signup flow].Your activation milestone (often referred to as your “aha moment”) is the earliest point in your onboarding flow that, by showing your product’s value, is predictive of long-term retention. A user is typically considered activated when they reach this milestone.

Growth hacking is an artform, and every percentage point increase achieved usually comes with a lot of elbow grease. This is why the below table of results is so incredibly interesting — given the effort behind each percentage point, seeing spreads of up to 40% between the median and the best products in their category is staggering.

Things that immediately jumped out at me:

E-commerce activation rates are so much lower across the board than literally any other product category! 🤯 The spread between the median and 80th percentile is also not very wide — merely 5%. In other words, not only is it way harder to activate an e-commerce user in general, it’s also an extremely competitive space, with folks in the 80th percentile only a little ahead of the competition 🥵

On the other hand, check out the spread between the median marketplace (whether it’s B2C or B2B) and both the 60th and 80th percentiles: above average marketplaces do only slightly better at activating users than the median marketplace in either category. But the folks who are successful, are very successful — the 80th percentile activation rates are nearly double that of the 60th percentile. 😗🎶 My guess is that this difference is due to the lift from a very healthy two sided marketplace.

The best B2C freemium/subscription services do about as well as the best B2C ad supported services (>60% activation rates). But the median activation rate is actually much higher for the subscription model! 🤓 It’s very cool to see the influence of perceived value in clear numbers like this. And it makes intuitive sense — all other things being equal, if you’ve already paid for a subscription, you’re more likely to use it; and freemium products generally gate actions that would make the product long term useful which (if you believe the research, and this lovely Hubspot infographic) makes you value them more than if they were available for free/with ads.

I would not have been able to predict that the Direct to Consumer (DTC) Subscription businesses (ever get a monthly subscription of treats for your furry coworker?) would come out as having the highest activation rates for the best in the class products. It makes sense, because most of them offer the first box at a steep discount. That doesn’t make DTC subscription businesses the best thing to start or run, of course — price points and ACVs still matter in all these categories! But just think about that number for a second: 75% (that’s 3 out of every 4!) customers who register going through with the subscription! 👀 👀 👀 👀 👀

Finally, prosumer vs enterprise SaaS. To me, these numbers tell a clear collaboration story — no matter how good the prosumer solution, because you’re working alone there’s less incentive to activate. Enterprise SaaS, OTOH, is generally aimed at enabling people to work with each other, and regardless how well this is done, pulls other folks in once one person adopts it. This is probably why the median Enterprise SaaS business actually has a higher activation rate than a better than average prosumer SaaS business. 😲

That's all I got for you this weekend 👋 Thanks for reading!