Data "downtime"

or why I can't wait to be able to declare data incidents

In this issue, I put a feature request out into the universe. I also cover:

Why is polars all the rage? by SeattleDataGuy and Daniel Beach

How Duolingo reignited user growth by Jorge Mazal

What happened to the data warehouse? by Benn Stancil

The art of onboarding by Jerrie Kumalah

Enjoy the issue!

-Anna

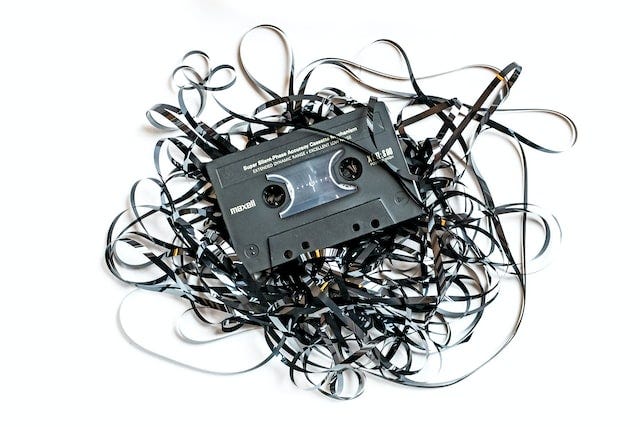

Data “Downtime”

What do you do when there’s a problem with an important dataset? Let’s say you’ve got a regression in your data lineage somewhere, and as a result, the business is undercounting monthly active users on your platform, or overstating your ARR.

Here’s what I’ve done for most of my data career:

fire off a Slack message in one or more internal channels to let whomever is online know there’s a problem and where to watch for a fix

hope that enough folks see the message and course correct on any reporting they’re working on at the time

(eventually) implement the fix, and if I’m lucky and there’s no permanent data loss involved, rewrite history to be able to restate numbers

go over analysis conclusions from the affected period, rewrite them, recreate charts etc… for things I know about.

This post isn’t about prevention of data problems — much has been written about this, and let’s assume for today that we’re doing a decent job on testing, anomaly detection and alerting.

This post is about the inevitable point when something falls through the cracks, and an unanticipated problem blows up your carefully designed DAG.

The impact of data “downtime”

In this newsletter, we talk a lot about the parallels between software engineering and data work. Incident impact and handling seems like a process you can lift and shift from one discipline to the other, until you consider the impact of data “downtime” to the business.

In the majority of software incidents, functionality is actively broken and preventing an end user from performing an action. The more users affected by the problem, and the more critical the action to the value proposition of the product, the more severe the data incident for the engineering team. Data, on the other hand, is stateful. There is a right value and a wrong value for a given point in time. Data incident severity is therefore a function of how far off the present value is from the expected value, and the reach of use of that dataset in the business.

Put another way: when your software breaks, you have to wait for a fix before you can go about your day. When the fix eventually arrives, you don’t normally have to think about undoing something you’ve already done, just catching up on lost time. When your data breaks, you’re able to go about your day unaware that there’s a problem until someone with sharp eyes points out that something seems “off”.

When your data breaks, you’re able to go about your day unaware that there’s a problem until someone with sharp eyes points out that something seems “off”.

Incorrect data is bad for the business because it makes the business make incorrect decisions. This is especially meaningful in 2023 where the margin for error in a business is the lowest it has been in decades. Did you, or did you not, generate enough qualified leads to be able to feel good about your revenue target this quarter? When the business needs to make critical decisions in an unstable environment, every number is being watched extremely closely. There is no room for accidental double counting or sql fanouts.

If something looks “off” enough times, end users stop being able to trust it. And by extension, they stop being able to trust other things that are produced by their data team. The consequence of this is far reaching — at best, you start to get more requests from end users about verifying a data set. At worst, the datasets and insights produced from that data don’t end up getting used at all in running the business.

Addressing data incidents without eroding trust

My hot take for 2023:

Trust in data accuracy is going to be the next biggest problem in our space after getting folks to actually use the data we produce to make business decisions.

The more datasets we produce and the more they get used, the more it becomes important to prevent folks from using critical data that is known or suspected to be incorrect. There’s two ways to address data incidents in a way that doesn’t erode trust in the underlying data platform:

Option #1: Intentionally letting data go stale and making it extremely obvious that data is stale through “defensive” dashboard design.

The idea is to use a combination of aggressive testing and validation on critical datasets to intentionally let your pipeline fail to execute on a given time period if there is suspicion of a large enough error. This is paired with dashboards that are specifically designed around only whole time periods (i.e. a complete day, week or month).

Option #1 gets you to incident impact that is similar to that of a software incident: folks are blocked on making a decision, but they’re less likely to make an incorrect decision.

The problem with Option #1 is it slows down the business until the data team is absolutely sure about their numbers. It can also create many restrictions on exploring data for end users — the only way to make sure someone doesn’t write a query that pulls a more “fresh” version of data that you’re not confident of, is to prevent the user from being able to write the query wholesale. This is a pretty big step backwards in enabling more of the business to confidently engage with data.

Option #2: Alerting the end user about a problem where the user is doing their work.

This looks like a real time alert, popup, or some other notice that describes that there is a known issue that is impacting what the end user is trying to do. Imagine writing a SQL query, and having your SQL editor tell you in real time that an incident has been declared that impacts a data source you’re using and where to go for more information.

Option #2 is more valuable IMO than Option #1 because it empowers your end users to make a decision about how a known issue impacts their work, or ask you for help. This is how you build trust in your data without slowing down the business and trying to achieve perfection.

Option #2, as far as I can tell, doesn’t exist yet, but I would very much like it to.

The 1,000,000 dollar question, of course is: “Which part of the stack should this functionality life in?”

In other words, which one of us is going to build it? :) Is this the job of a data catalog?A BI tool? A semantic layer and integrations with the semantic layer to pick it up?

I have thoughts here, but I’m extremely curious what you all think. Does this problem resonate with you? Where do you wish it lived in your stack?

Elsewhere on the internet…

SeattleDataGuy and Daniel Beach introduce us to Polars, the new kid on the data block. It’s written in Rust (i.e. instant cool points), built on top of Apache Arrow (so you know it’s Wes McKinney approved), and it’s the best genetic mix of Pandas and Spark you’ve always wanted but were too afraid to ask for.

If you’ve ever been addicted to Duolingo (or know someone who is!), you’ll want to read Jorge Mazal’s post about how how Duolingo reignited user growth through gamification. Gamification felt like it was everywhere 7-10 years ago — from Waze gamifying your driving experience through maps to straight up Candy Crush and Angry Birds addiction coming to your smart phone. And then all of a sudden it wasn’t there anymore because it became too much. Or maybe it got replaced by the endless scroll of TikTok, IDK. Duolingo, though, have managed to thread this needle in a way that’s just right with thoughtful user experience, and there’s some cool charts in this post that show off the impact of the decisions they’ve made along the way. Well worth a read!

Benn Stancil writes about a missed (maybe?) opportunity to pull a Steve Jobs on the idea of a data warehouse and I’m here for it. This isn’t a takedown post or even a subtle critique. It’s an observation of an emerging industry opportunity framed by the latest trend of returning to data management basics (think duckDB and operations on s3 that don’t suck). I’m intrigued and smashing “subscribe” on how it all unfolds in the coming years.

Finally, it’s lovely to see a new post on the Locally Optimistic blog after a bit of a break with Jerrie Kumalah’s the art of onboarding. One of the things that I really enjoyed about this post: it’s not actually full of “a-ha” moments and life changing advice that you probably never heard before. It’s a sensible, detailed guide to approaching one of the most stressful career inflection points — starting a new job on a new team with a new culture and new way of doing things. And in this way, it’s invaluable because it provides a gut check and reminds you that it’s OK not to know how this thing works right now. I especially liked the section that helps you deconstruct the new company culture you’re experiencing — every company culture is unique in its own way, no matter how familiar it may seem from the outside. This guide helps you avoid making assumptions, and it’s already bookmarked in my quiver of reusable managerial resources.

That’s it for this week! Thanks for playing :P

We did your Option #2 model at Uber a few years ago and it was definitely helpful.

Here was the napkin-architecture:

The query-and-dashboard tool (Querybuild/Dashbuilder) talked to the data catalog (Databook) and pulled in metadata about the tables you were working with: schema, column types, etc. and let you easily open up the data catalog (Databook) to see more about them.

The data quality testing harness (Trust) would publish test pass/fail statuses into Databook. So when you were searching for / reading about the tables you planned to use in your query, you could see how many tests were on it, and whether they were passing or failing. From the lineage graph you could see if any parent tables were failing, all the way up to the original Kafka topics / MySQL snapshots.

This way you could create tests in the test harness, see tests statuses in the catalog, and expose a very summarized "healthy / not healthy" type indicator right in the query editor. We never got the warnings pushed into the dashboards, at least while I was on the team, but it was an obvious next step.

We also cloned the existing incident management tool (Commander) but the things you need to do during incident management for a data pipeline problem turn out not to be wildly different from the usual kind, so it wasn't a great use of effort. The main leverage for "Data Commander" came from using our lineage graph + query logs to automate communications to downstream affected users without the incident-responder needing to know who they were manually.

Related to your questions about data quality warnings:

I would say your "option 2" kind of already exists e.g. in Tableau as data quality warnings:

https://help.tableau.com/current/online/en-us/dm_dqw.htm

I agree with you that it should exist there in the stack where it directly reaches the users. So there is no final answer, but it depends on the specific setup of each company how users interact with data.

E.g. in a self-service environment based on Tableau I think the Tableau solution is pretty good. If there are more users closer to the SQL world, maybe even decentralized users, then having similar data quality warning mechanisms available in your SQL editor like dbt cloud may be a nice feature, too. Similar to Tableau I could imagine options to either manually set such warnings or have them automatically created e.g. based on rules on how to react to certain test results.