Data Science Roundup #74: Forecasting at Scale @ Facebook, the SHA1 Attack, and more!

Last week was the single best week ever for the Roundup: 5k opens and 1,600 clicks. I’m going to stick with the format: two posts that are not-to-be-missed for that week, and 6-10 more links. Let me know if you have feedback, would be curious to add some anecdotal reactions to the raw data ;)

- Tristan

PS: Referred by a friend? Sign up here!

Two Posts You Can't Miss

Prophet: Forecasting at Scale @ Facebook

Major new release from Facebook Research:

Today Facebook is open sourcing Prophet, a forecasting tool available in Python and R. Forecasting is a data science task that is central to many activities within an organization. For instance, large organizations like Facebook must engage in capacity planning to efficiently allocate scarce resources and goal setting in order to measure performance relative to a baseline.

Producing high quality forecasts is not an easy problem for either machines or for most analysts. [And such,] demand for high quality forecasts often far outstrips the pace at which analysts can produce them. This observation is the motivation for our work building Prophet: we want to make it easier for experts and non-experts to make high quality forecasts that keep up with demand.

Google Online Security Blog: Announcing the first SHA1 collision

This is not a newsletter about security, but I’m fascinated by just how much the internet has exploded with the first successful attack against SHA1. It’s not news that SHA1 has had insecurities, but I’m fascinated by just how hard it was to exploit them. Here are the resources that were used in the effort:

Nine quintillion (9,223,372,036,854,775,808) SHA1 computations in total

6,500 years of CPU computation to complete the attack first phase

110 years of GPU computation to complete the second phase

…wow.

security.googleblog.com • Share

This Week's Top Posts

A Brutal Teardown of an Embarrassingly Bad Chart

This ABC News chart seemed to have taken over the top of my Twitter feed so I better comment on it. Someone at ABC News tried really hard to dress up the numbers.

The amount of work required to skew these numbers is downright comical and begs the question: what (or whose) purpose is this serving?

junkcharts.typepad.com • Share

DeepMind just published a mind blowing paper: PathNet.

Massive ensembles of neural nets + effective transfer learning = artificial general intelligence?

We can imagine that in the future, we will have giant AIs trained on thousands of tasks and able to generalize. In short, General Artificial Intelligence.

Tech jobs are already largely automated

Daniel Lemire effectively pops the bubble on Mark Cuban’s assertion that software jobs will become increasingly automated leading to fewer jobs:

Will software write its own code? It does so all the time. The optimizing compilers and interpreters we rely upon generate code for us all the time. It is not trivial automatisation. Very few human beings would be capable of taking modern-day JavaScript and write efficient machine code to run it. I would certainly be incapable of doing such work in any reasonable manner.

Software Engineering vs Machine Learning Concepts

Not all core concepts from software engineering translate into the machine learning universe.

This is a very thoughtful post from an experienced software engineer on the mental models one needs when writing traditional code vs machine learning code. Insightful.

www.machinedlearnings.com • Share

My Journey From Frequentist to Bayesian Statistics

I came to not believe in the possibility of infinitely many repetitions of identical experiments, as required to be envisioned in the frequentist paradigm. When I looked more thoroughly into the multiplicity problem, and sequential testing, and I looked at Bayesian solutions, I became more of a believer in the approach.

What’s so interesting here is that this is written as if the author had lost his faith in a religion. Is the frequentist model just an outdated dogma that we will all someday be a little embarrassed about having believed? One more quote:

Unlearning things is much more difficult than learning things.

Finding the most depressing Radiohead song with R, using the Spotify and Genius Lyrics APIs.

I’m not sure that this was a problem that was screaming for a solution, but the methodology and the results are definitely interesting :)

What is a Support Vector Machine, and Why Would I Use it?

Support Vector Machine has become an extremely popular algorithm. In this post I try to give a simple explanation for how it works and give a few examples using the the Python Scikits libraries. All code is available on Github.

Know the difference between a Pearson and Spearman Correlation? No? This post is for you.

Data viz of the week

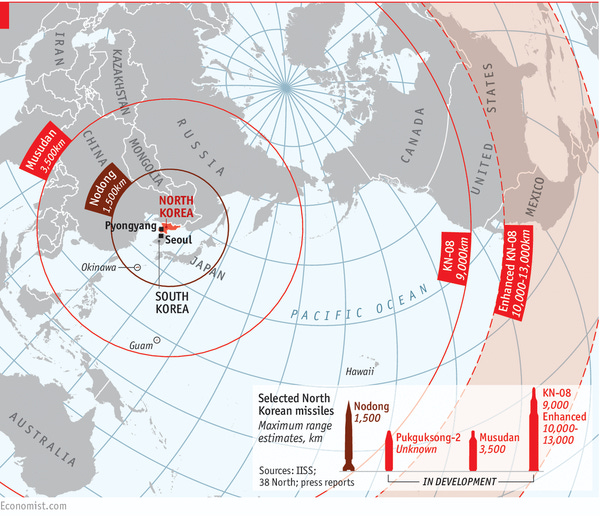

"Where can missiles from N. Korea reach?" Such a good answer.

Thanks to our sponsors!

Fishtown Analytics: Analytics Consulting for Growth

Fishtown Analytics works with venture-funded startups to implement Redshift, Snowflake, Mode Analytics, and Looker. Want advanced analytics without needing to hire an entire data team? Let’s chat.

Stitch: Simple, Powerful ETL Built for Developers

Developers shouldn’t have to write ETL scripts. Consolidate your data in minutes. No API maintenance, scripting, cron jobs, or JSON wrangling required.

The internet's most useful data science articles. Curated with ❤️ by Tristan Handy.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue

915 Spring Garden St., Suite 500, Philadelphia, PA 19123