Data Science Roundup #87: Improving your Productivity, AutoML, and the Growth of Python

Smarter workflows = more productive data scientists! Or, simply automate the process of model-building with AutoML. Enjoy the issue, and for those of you in the US, hope you have relaxing holiday weekends!

- Tristan

❤️ Want to support us? Forward this email to three friends!

🚀 Forwarded this from a friend? Sign up to the Data Science Roundup here.

Two Posts You Can't Miss

How to Improve Data Scientist Productivity

I spend a lot of time thinking about data pipelines. One of my main gripes about the leading tools is how heavyweight they are, leading to slow iteration cycles and a lack of experimentation. This article hits the nail on the head:

Regular pipeline tools like Airflow and Luigi are good for representing static and fault tolerant workflows. A huge portion of their functionality is created for monitoring, optimization and fault tolerance. These are very important and business critical problems. However, these problems are irrelevant to data scientists’ daily lives.

Yes!! Pipelines need to be fast, responsive, and flexible—the less configuration, the better—so that users can quickly make adjustments and experiment. This article introduces a more agile pipeline called DVC and walks through its use in collaborative data science settings. It does so through the lens of improving your productivity as a data scientist—it turns out that tooling like this can absolutely make significant improvements in how, and how quickly, you can work.

Highly recommended 👍👍

Full disclosure: at Fishtown Analytics, we’re building a tool called dbt that empowers SQL analysts with the same type of workflow. I use dbt all day every day, which is why I’m so bullish on this approach.

Using Machine Learning to Explore Neural Network Architecture

Designing neural networks is hard: it takes lots of time from lots of highly trained researchers. Which, of course, is why Google is trying to automate it:

In our approach (which we call “AutoML”), a controller neural net can propose a “child” model architecture, which can then be trained and evaluated for quality on a particular task. That feedback is then used to inform the controller how to improve its proposals for the next round. We repeat this process thousands of times — generating new architectures, testing them, and giving that feedback to the controller to learn from.

This blog post is short and sweet and very accessible. My favorite part is the diagrams of the human and machine-designed networks: it’s impressive how similar the topologies are, but also interesting the small and non-obvious ways that the machine-designed networks improve over the human ones.

Also a good read on this topic: this week Airbnb had a good writeup of their usage of automated machine learning. Airbnb seems to think of the technique as more of a workflow enhancement, saving data scientists time, rather than an area of basic research allowing them to push forward the boundary of algorithm development. Their approach is probably more day-to-day useful (if less groundbreaking).

research.googleblog.com • Share

This Week's Top Posts

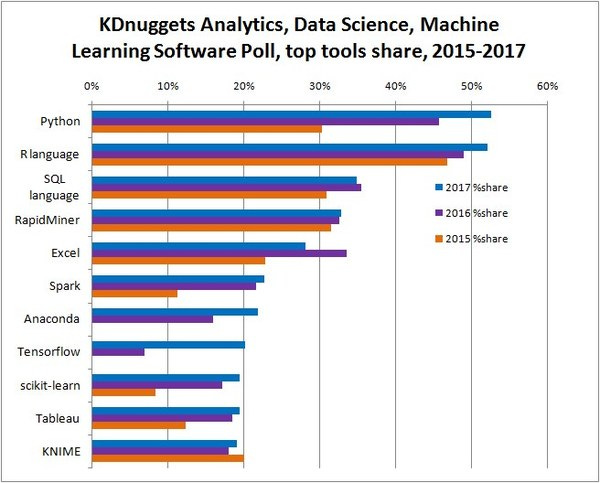

2,900 respondents, plenty of interesting results. Not exactly scientific, but probably a solid gauge of where the industry stands. Python usage is growing rapidly, as is Tensorflow; Excel is the only tool whose usage is shrinking. Worth the 2-minute read.

Usage patterns and the economics of the public cloud

Super-interesting summary of a recent paper. Currently, resource demand in the public cloud has fairly low variability and pricing and capacity planning are, as a result, fairly straightforward.

My read into this is that data science workloads are still a small overall percentage of total cloud workloads—large data science jobs, while having the potential to be absolutely massive, are typically batch-oriented, with resources spun up specifically to train a particular model. Will this ratio change in the future as data science workloads become ever-more-common? Will this change how cloud resources are delivered?

Spotting a Million Dollars in Your AWS Account

At Fishtown Analytics, we spend all of our time analyzing data from various operational data sources. The one source I’ve never seen anyone dig into is cloud utilization and spend: for being the service that powers all data collection and processing, cloud usage is a typically a black box itself.

This article from Segment walks through how they found over $1mm in savings in their AWS bill, including all of the instrumentation the effort required. Fascinating.

Nested data structures within SQL data warehouses are a relatively new phenomenon. Sure, you’ve always been able to throw random JSON data into a large VARCHAR field, but that isn’t the same thing as using a database that innately understands the underlying data structure. This article explains exactly why that difference is important.

If you work with a SQL data warehouse, this is a must-read.

The Hitchhiker’s Guide to d3.js

This is the single best overview for folks learning d3 that I’ve seen. Well worth your time if d3 is on your list.

Data Viz of the week

Amazing interactive Markov-based look at time use.

Thanks to our sponsors!

Fishtown Analytics: Analytics Consulting for Startups

At Fishtown Analytics, we work with venture-funded startups to implement Redshift, Snowflake, Mode Analytics, and Looker. Want advanced analytics without needing to hire an entire data team? Let’s chat.

Stitch: Simple, Powerful ETL Built for Developers

Developers shouldn’t have to write ETL scripts. Consolidate your data in minutes. No API maintenance, scripting, cron jobs, or JSON wrangling required.

The internet's most useful data science articles. Curated with ❤️ by Tristan Handy.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue

915 Spring Garden St., Suite 500, Philadelphia, PA 19123