Data Timeliness. Materialized Views. Reverse ETL. CONNECT BY. Communicating About Data. [DSR #246]

❤️ Want to support this project? Forward this email to three friends!

🚀 Forwarded this from a friend? Sign up to the Data Science Roundup here.

This week's best data science articles

Visualizing Data Timeliness at Airbnb

Metadata!

This post is so timely for me. As dbt’s transformation capabilities become increasingly mature, we continue to be very focused on understanding the adjacent problems, many of which can be summed up under the category of metadata. And as usual, Airbnb is living in the future.

This post describes the tool that a team at Airbnb built to track and analyze timeliness and SLA compliance for their various datasets. The author describes some really meaty problems: the implicit understanding of the DAG required to answer the question “why was this dataset late?” The criticality of locating bottlenecks. More.

The entire ecosystem will have this type of tooling, and soon.

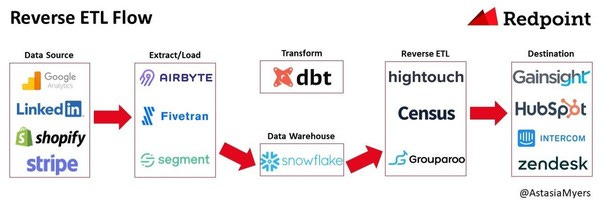

The category of reverse ETL, which empowers operational analytics, has been a good idea for a little while but has truly captured the zeitgeist in just the past six months or so. I wrote about it in my ecosystem post late last year and Astasia @ Redpoint dives in much more deeply in this post. She makes some fantastic delineations that I hadn’t fully processed yet.

We’ve also heard customers consider Tray.io and Workato as alternatives to reverse ETL solutions. Tray.io and Workato are visual programming solutions to configure data pipelines while reverse ETL is declarative. They send all the data on every run rather than only sending differences from the last run. Reverse ETL solutions use batch APIs and handle only sending the rows that have changed.

This is the most complete description I’ve read yet on this important tooling category; highly recommended.

A Data Pipeline is a Materialized View

Another topic I’m really excited about: materialized views! Again, I’ve written about this before but this author goes much deeper. I was on a call earlier today with the team at Materialize and am pumped to see the momentum happening there.

This is the best post I’ve read to-date on this topic, and it’s one that is going to make a very meaningful change in the way that we think about our data systems in the coming few years.

What would you do with a real-time, SQL-based pipeline?

Congrats to the Chartio team!

This is an interesting one for me (aside from my personal affinity for the folks there) because of the ongoing evolution in the BI space. Products of the 2010-2016 vintage that have been acquired: Looker (Google), Redash (Databricks), Periscope (Sisense), Chartio (Atlassian). Products that have not been acquired: Mode, Superset, Metabase.

Products in other layers of the stack have generally reached larger scale and escape velocity than have those in the BI layer. I don’t know exactly what to make of this–is there still a Snowflake / Databricks-of-BI yet to come? Or does the platform layer inherently have a larger addressable market? One of my core beliefs is that “there is no one right way to analyze data” and I wonder if that’s limiting the overall TAMs here and leading to acquisitions instead of IPOs.

SQL and AdventOfCode 2020, on Snowflake

Felipe Hoffa, long-time developer advocate @ Bigquery who recently changed teams to Snowflake, solved most of the AdventOfCode puzzles on Snowflake using SQL. This would typically fall for me in the category of neat-but-not-revolutionary except that there were a few things in his solutions that I truly had been unaware of. Chief among those: the connect by clause! Recursive processing in idiomatic SQL! If you’ve ever had to parse through a set of hierarchical relationships encoded in a single table with a parent_id, you’ll immediately recognize the benefits here.

If you’ve used connect by in your work, I’d love to hear from you. Any gotchas?

towardsdatascience.com • Share

How to Drive Effective Data Science Communication with Cross-Functional Teams

Analytics teams focused on detecting meaningful business insights may overlook the need to effectively communicate those insights to their cross-functional partners who can use those recommendations to improve the business.

That made me lol. What I read there: “some of y'all are pretty terrible at talking about your work.” This is a true statement, and it’s not because data people are hopelessly bad communicators, but because communicating about data is actually hard. It requires navigating multiple levels of granularity, having tremendous empathy for the listener, and actually prioritizing it against other alternative uses of time.

Important post, if a little dry.

Thanks to our sponsor!

dbt: Your Entire Analytics Engineering Workflow

Analytics engineering is the data transformation work that happens between loading data into your warehouse and analyzing it. dbt allows anyone comfortable with SQL to own that workflow.

The internet's most useful data science articles. Curated with ❤️ by Tristan Handy.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue

915 Spring Garden St., Suite 500, Philadelphia, PA 19123