Season 2 of the Analytics Engineering Podcast is here! In Episode 1 Julia and I talk to Ashley Sherwood and cover a lot of ground. The most surprising point to me occurred at the very beginning of the episode:

(…) I think it depends in an org on whether that work is truly data first or not. And I think especially when you're a data person, data first feels like the right way to do things. For me, it's much more what's the right fit for your org. I would say that HubSpot is very human first. If a problem can be solved by having a slightly longer and more empathetic conversation with a customer, that's how the company wants to handle it (…) And that means data isn't first.

🤯 I love this self-awareness / alignment across the company in what has become a fairly contrarian take.

Is Two DAGs Too Many?

Ananth Packkildurai has been hitting home runs recently in the Data Engineering Weekly, and this week he wrote another great one:

The data community often compares the modern tech stack with the Unix philosophy. However, we are missing the operating system for the data.

We need to merge both the model and task execution unit into one unit. Otherwise, any abstraction we build without the unification will further amplify the disorganization of the data.

There’s so much good stuff in here that I can’t even begin to summarize it. The one point I want to make, though, is that Ananth did a fantastic job of distilling down the most interesting part of the conversation about bundling/unbundling of Airflow. When you have a single orchestrator and a single DAG, you have a single consistent, cohesive lineage that naturally falls out. The minute that you have multiple DAGs, lineage and observability become much harder.

If you want more depth on this, read the full post (it’s not long). What I’m hoping to do instead is pose a few questions directly to you, Ananth, in the spirit of pushing forwards the conversation. Let me set them up first though…

IMO you define the problem exactly correctly: different DAGs leading to lots of downstream problems. I think of the DAGs as being for different personas: one for analytics & BI practitioners (including analytics engineers) and one for folks doing ML/data science. You say that this is about the style in which these workloads want to be expressed (SQL vs. “fluent”)…I think that’s certainly a part of it but there’s also some fundamentally different needs that these two personas have in terms of what kinds of computation they perform.

One of the big things we’re thinking about right now is if we can bring non-SQL languages into the dbt DAG since they’re now being supported on most of the modern data platforms. Our big rule has always been: localize the compute to the data, so the answer to “What language does dbt support?” has always been “What languages do the data platforms support?” and historically that’s (mostly) just been SQL. But now with Bigquery’s Serverless Spark and Snowflake’s Snowpark (and of course Databricks has been in this world all along), there are lots of options for doing “pushdown” in non-SQL languages in the MDS.

So to my questions:

How much of the problem you’re describing here would go away if dbt Core natively supported non-SQL languages? Could we go back to just having a single DAG (now in dbt) or are there other barriers?

Do you think the “task execution” and the “model” are actually different? Or can the concept of a dbt model be extended to handle what has historically been written as a task with minimal friction?

As we think about how to push dbt in this direction, what do you think should be top of mind for us that we may not be considering?

Just to manage expectations—this is not a definite. It’s a meaningful shift for dbt and is heavily dependent on the capabilities of the underlying platforms. One of the complexities that we need to work through is that the capabilities of the different data platforms vary so widely that it’s hard to create a single abstraction layer that gives dbt users a consistent experience. We’re not going to put anything out into the world if it can’t create the same magically simple experience that dbt-on-SQL creates today.

There are several brains thinking about this stuff right now, though (including mine), so it’s a useful conversation to have. Transparency always wins.

I also want to publicly demonstrate some humility here. Our experience as a company and community is heavily centered around the BI & analytics part of the data ecosystem; we have less expertise in the ML & data science world. This is why I think this conversation is so critical! If dbt is to play a role in that part of the ecosystem as well, we have a lot of learning to do and a lot of conversations to have. The team and I are ready to listen, ready to learn.

From elsewhere on the internet…

🌶️ Sarah Krasnik helps us all choose a data quality tool. She starts:

This post is not a sponsored post. When I was evaluating data quality tools, I felt there wasn’t enough vendor neutral content to understand what kind of approaches are common and what tools exist.

It’s a really fantastic post. I field questions all the time from folks trying to understand data quality / observability / lineage and this is now the resource that I will point them to. Make sure to read all the way to the end where Sarah almost goes Gartner-magic-quadrant on us.

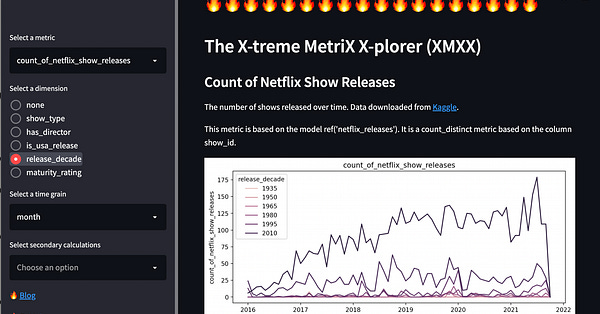

I loved seeing Stephen’s experimentation with dbt’s new metrics functionality. My favorite bit:

“To shepherd data from raw source to key metric,” is as apt a description of analytics engineering as any, and it can now be managed coherently in a single workflow.

Every once in a while a Josh Wills tweet travels throughout the entirety of data Twitter. I don’t spend a lot of time thinking about how data is transferred over wire protocols, but I learned a lot from this whole thread. Lots of fantastic humans weighed in.

Vicki Boykis talks about ownership of software problems:

A good developer cares about the code they’re putting out into the world not only because it has to work for them, but because their code will spend most of its time being read and implemented and worked on by others, and as developers, we want to care about those people, too. By observing someone who does one or more of these over time, you can spot the telltale signs of someone who cares about their work.

Roxanne Ricci writes about BackMarket’s introduction of BigQuery to their stack, a large replatform from Snowflake. The thing that seems to have driven this is their ability to federate usage / ownership of data among distributed teams, including letting teams pay for their own compute:

we create for each consumer (we consider a consumer to be a team of people) a dedicated GCP project where we’ll create the authorized views they need based on the tables ingested in our Data Engineering project. It’ll allow them to have only access to the data they need and use and to pay for their own queries.

Very data-mesh-y! Love it. The thing that surprises me is that I think you can achieve this same structure in Snowflake with multiple accounts and shares, but I’ve never tried it myself. Curious to know if anyone has and how it has gone for you. I think the main thing I’m uncertain about is if multiple Snowflake accounts at a single org can have separate billing…this seems like a really important feature from a data mesh perspective.

David Jayatillake wonders how we can integrate the different parts of our pipelines more tightly. Strong agree—the ingestion and transformation stages of pipelines will almost certainly be able to be reasoned about more holistically over the coming 12-24 months.

Spencer Ho from Salesforce writes about Activity Platform, an internal system in the Salesforce ecosystem. The demands on it due to scale and particular requirements are unique:

To serve activities as a time series, Activity Platform needs a big-data store that supports mutability, global indexing, and stream-like, from write to read data visibility

Mutability, in particular, violates much of the foundational thinking in the OLAP world and violates the design principles of many existing data stores. The article goes deep on the architecture of databases and how the requirements for a given system play out in the choices an architect makes when designing one. While you may not have enough scale to build your own database, it’s a very similar thought process you need to go through to select the right one for your task at hand…just more degrees of freedom.