Embedded ML. Trustworthy Analysis. ML @ Twitter. Snowflake & BigQuery. [DSR #140]

❤️ Want to support us? Forward this email to three friends!

🚀 Forwarded this from a friend? Sign up to the Data Science Roundup here.

The Week's Most Useful Posts

The Future of Machine Learning is Tiny

I’m convinced that machine learning can run on tiny, low-power chips, and that this combination will solve a massive number of problems we have no solutions for right now.

This is a fascinating read. To give you a quick sense of how small we’re talking:

…the MobileNetV2 image classification network takes 22 million ops (each multiply-add is two ops) in its smallest configuration. If I know that a particular system takes 5 picojoules to execute a single op, then it will take (5 picojoules * 22,000,000) = 110 microjoules of energy to execute. If we’re analyzing one frame per second, then that’s only 110 microwatts, which a coin battery could sustain continuously for nearly a year.

I learned a lot about neural network power consumption in this post—it’s really the only place I’ve ever seen this discussed in depth before. Most of us spend our time running jobs on huge clusters in far-away data centers, so power consumption is not a common consideration.

The impacts on future product design could be significant.

I love this post, and this line in particular:

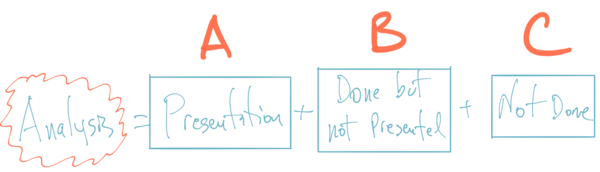

Almost all presentations are incomplete because for any analysis of reasonable size, some details must be omitted for the sake of clarity.

Presenting one’s analysis is an important part of the analytical process, and because presentation nearly always involves summarizing information content, analysts have to make choices about what information to include and what to exclude. This is where trust comes in: does your audience trust the choices you’ve made? Have you done the work, just chosen not to present it? Or have you not actually done the work?

This is a foundational, how-to-think-about-your-job-better, post.

In this blog post, we will discuss the history, evolution, and future of our modeling/testing/serving framework, internally referred to as Deepbird, applying ML to Twitter data, and the challenges of serving ML in production settings.

Long, detailed post about the history of ML @ Twitter. Worthwhile to see the trajectory—if your company is going through the ML learning curve today, there are plenty of lessons. Technical.

Two classic problems in sales and marketing are simply applications of reordered lists: ranking sales leads and triaging churn risk.

The most recent post on Locally Optimistic does an interesting reframe on recommender / scoring algorithms, essentially thinking of all such algorithms as “reordering lists”. This is an interesting framing in itself, and there are many interesting insights that the post teases out from it. I particularly liked this one:

Merely presenting items in a list may not indicate whether a highly-ranked item is one that has a high likelihood of an outcome (movie you will rank highly; customer who will churn), or one that has a high propensity to benefit from some sort of interaction. These are not the same thing.

www.locallyoptimistic.com • Share

How Compatible are Redshift and Snowflake’s SQL Syntaxes?

I just went through the process of converting 25,000 lines of SQL from Redshift to Snowflake. Here are my notes.

I’ve been working on this post for a while. It summarizes my experiences on a very successful project I wrapped up fairly recently. One of our clients was on a Redshift + dbt + Looker stack with a sizable Redshift installation, and I helped them transition from Redshift >> Snowflake. The results were impressive: concurrency, which was previously the biggest pain point, is just not a problem any more.

We’re starting to see a lot of companies migrate from Redshift to Snowflake. If you’re thinking about making the move, this post is worth the read. I haven’t seen anyone else write about this process yet.

BigQuery vs Redshift: Pricing Strategy

Keeping with the above theme, this is a great post about BigQuery cost-reduction strategies. It goes into detail on how cost calculations work in BQ and techniques that users can employ to reduce costs, including date sharding / partitioning and creating rollups.

IMO, one of the biggest reasons why BigQuery adoption isn’t faster is that its cost mechanism simply isn’t well-understood in the market.

Data viz of the week

Love the narrative overlaid on the image.

Thanks to our sponsors!

Fishtown Analytics: Analytics Consulting for Startups

At Fishtown Analytics, we work with venture-funded startups to build analytics teams. Whether you’re looking to get analytics off the ground after your Series A or need support scaling, let’s chat.

Stitch: Simple, Powerful ETL Built for Developers

Developers shouldn’t have to write ETL scripts. Consolidate your data in minutes. No API maintenance, scripting, cron jobs, or JSON wrangling required.

The internet's most useful data science articles. Curated with ❤️ by Tristan Handy.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue

915 Spring Garden St., Suite 500, Philadelphia, PA 19123