Four frameworks for self-service analytics

Why "self-service" means four different things. Who owns data quality. The latest data libraries and helper functions. Modern data stack milestones.

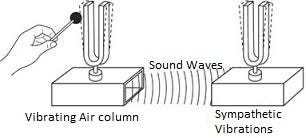

Whenever I read something truly important, I find that it continues to resonate in my brain for days later with this steady hum. Important ideas expressed simply are kind of like tuning forks for the brain. An important idea forces my brain to focus on its pitch and I often find myself unconsciously adjusting the instrument of my thoughts around it.

John Cutler’s article this week is my current tuning fork, and frameworks are the pitch my brain is humming. And the thing that the framework pitch has resonated with in my brain in particular is self-service analytics. What happens when we think about self-service analytics as a framework?

Also in this issue:

Chad Sanderson on why data quality should be the responsibility of the data producer

Every narrative’s powerful friend by Ted Cuzzillo

Jamin Ball breaks down what the current macro-economic climate means for our industry.

An open source data diff tool by Datafold

dbt YAML checker, an open source helper library by Kshitij Aranke

Continual.ai is GA and raises a Series A! Congrats!

Four frameworks for self-service analytics

TL;DR: whenever you’re making a decision, you’re always using a framework. Whenever you’re taking steps to do something, you’re always using a process. The difference is only in how conscious you are of the framework or the process, and by extension, how conscious you are about the implicit biases and limitations of your framework and process.

Let’s define some of these words and establish some shared baselines. Here’s how I think about frameworks and processes:

Framework: literally a frame for work. A heuristic that helps you understand something and make a decision for how to act. “righty tighty lefty loosey” is a framework. Stereotypes of all kinds are a framework. Traffic rules are a framework because they help you make decisions on the road (stop sign turn taking, the Pittsburgh left). Ruby on Rails is a framework because it is opinionated about how you should build a web application.

Process: a decision tree or series of steps on executing something. A process always has a framework behind it, whether or not it is explicit.

John’s post is a framework for thinking about and evaluating frameworks. And my brain absolutely loves meta commentary. John argues that it’s important to interrogate and question frameworks we come across and I strongly agree. Three things stood out to me as important variances/questions to ask when evaluating a framework:

asking why the framework exists in the first place. What’s the problem it’s designed to solve? Why is this an important problem to solve?

whom is the framework for? If the answer is “everybody” it’s probably neither a good nor helpful framework.

what are the framework’s limits? Where and when does it stop being applicable? What happens in those situations.

In the rest of this post, I’ll take each of those questions and use them to interrogate the different frameworks (yes, plural) I think are behind the term we talk about so often: self-service analytics.

What is the why?

John has this to say about why frameworks often fail in practice, no matter how well designed or intentioned they are:

It is tempting to make “adopting the framework” (or selling the framework) the goal, instead of using the framework as a tool to achieve a goal. Companies talk about Doing X as the goal, not about what Doing X will enable. Time passes, and the company forgets the Why, and it is all about process/framework conformance.

John Cutler, The Beautiful Mess

Now swap out the X in the paragraph above with “self-service analytics”: when we talk about self-service analytics being a goal, what does it enable? What is the self-service analytics “why”?

Here’s a few “whys” I’ve heard over the years:

Freeing up data teams to focus on the “important” questions. This reasoning implies that without self-service analytics, data teams will be overwhelmed with answering unimportant questions.

Assumptions: data humans know what is “important” to know about the business and folks who don’t work in data do not. Folks outside of the data team need help asking “good” questions.

What it looks like: mapping out commonly asked questions and building resources to answer them. The data team spends a lot of time cleaning, modeling and documenting data for these questions. Paradoxically but unsurprisingly, the least important data (by the data team’s measure) ends up getting the best documentation and support. When a truly important and urgent question comes up, the data team often still has to scramble to find, clean and model data they need to answer the question.

Success measures: reduction of questions in the queue.

Data “democratization” / breaking down data silos. In this mental model, self-service analytics is enabling anyone to work with data who needs to.

Assumption: working with data is difficult, especially if what you’re looking for is outside of your existing domain of expertise.

What it looks like: friendly and accessible landing pages describing how to get started with specialized datasets. Data probably still lives in several different systems owned by different functional areas. There may be multiple data stacks. Data education content is focused on getting folks up to speed on how to use the data stack of choice for a particular business area.

Success measures: number of humans querying data from outside of the functional area that owns the dataset.

Governance/single source of truth for knowledge in a business. In this model, work to enable self-service analytics is directed at the most important questions for the business.

Assumption: the entire business should use the same definitions for important concepts, and careful curation is required to enable this outcome.

What it looks like: prioritizing work that establishes single sources of truth, like entity resolution. Focus on a smaller set of core datasets rather than attempting coverage of the large surface area of potentially answerable questions you see in model #1 above. Standardizing on a single data stack and set of tools. Accuracy of data moved by reverse ETL becomes critical.

Success measures: coverage of important business concepts and entities, data quality, how quickly data lands in the warehouse and is useable.

Empowering the entire business to make decisions with data. This is perhaps the most ambiguous of the mental models I’ve observed.

Assumptions: the business isn’t already using data to make decisions. Using quantitative data to make decisions is better than making decisions some other way.

What it looks like: Who in the business needs to make decisions with data? What are those decisions? What happens if they don’t use data? The answers to those questions frequently determine what this looks like in practice. Businesses subscribing to this mental model can be at risk of over-rotating on quantitative metrics based on existing and available data, and under investing in generating new data through research (surveys, qualitative interviews etc.)

Success measures: often focused on measuring how well different people in the business perform the act of using data. As a result of this incentive structure, lots of data signaling is observable in internal communication (gratuitous usage of stats and charts). Validity of the insights being reported can be at risk if it is not made an explicit priority.

What models for self-service analytics have you seen? What are their tradeoffs?

Who is it for?

Most frameworks weren’t designed for broad use. They were designed for a particular context, with lots of implicit context.

John Cutler, The Beautiful Mess

The next logical question is who self-service analytics is for. Depending on which model your organization subscribes to, your answer will be different. Is it..

Folks who don’t know [insert language or tool of choice used by the data team]?

Other teams that need to use data in a different part of the organization?

Business executives and decision makers?

Here’s one framework you can use to think about whom self-service analytics is for in the business:

Let’s imagine that there are broadly two kinds of questions: questions that help determine what the business (or part of the business) should do next, and questions that evaluate how well something was done.

Now, let’s imagine that there are different types of and layers of organizational stakeholders you might interact with. The big categories I personally think about a lot are: individual teams (maybe functional teams or project teams); functional areas (e.g. the Marketing organization, the Customer Success organization, the Community organization); folks making decisions about go to market motions (GTM) in your business; folks making decisions about what to build next in your product; folks making decisions about how the business is spending money (profit and loss, or P&L).

Now if you think about the type of question, and then the type of stakeholder, you, you can do research on the specific jobs to be done at the intersection of the two. You can then decide how you want to allocate your data team’s resources to support those jobs to be done.

In the example below, I’m making an explicit decision that the business is best served when functional areas and individual teams largely self-serve their analytics, while the analytics team is focused on helping evaluate go to market motions and success of the product with the user base. I’m also making the decision that questions about what to do in the GTM and product worlds are best served by qualitative research, and that anything to do with how the business spends money is the domain of a strategic finance function:

Your framework might differ from this one! But now I have a framework that I can use to help me think about designing my self-service data experience. For example, I can now reason about things like:

If I know I want my analytics humans focused on performance of the product and go to market motions, I know I need a well-modeled layer of the core parts of the GTM motion and things like product usage and adoption. This is what my analytics engineering function is likely spending most of their time curating. I also know when I see a “what should we do next” type of question about the product, that I should redirect it to my qualitative research counterpart, and any questions to do with P&L are the domain of my finance team.

What do individual teams and functional areas need to measure their own success? How can I deliver it in a way that is repeatable? In this world I’m prioritizing things like team OKR templates, taking top line business metrics and focusing on helping functional areas with attribution of their impact to those metrics. For example, I will aim to break down and model product data in a way that helps individual product managers or product areas measure their own impact on overall product usage and adoption.

What are its limits?

How many frameworks do you know that explicitly mention when you should stop using them? Not many.

John Cutler, The Beautiful Mess

This is probably my favorite part of John’s post because the question of when something is not applicable is often forgotten. Let’s try to answer it for self-service analytics.

When should someone stop trying to self-service and reach out for help? In other words, when do you dial 0 to talk to a human? ;)

If you subscribe to model #1 above (data teams assists on important questions) you might expect folks to reach out to you when the question is “important” enough. The trouble is, how do your stakeholders know when that is? This is especially tricky if you also assume your stakeholders don’t know how to ask good questions. You come up with a complex decision tree (process) that helps folks figure this out before they file a ticket with you. The number of tickets in your queue gets reduced! Success! Except it’s not actually a success. Your process is heavily biased by your framework, and folks get turned off by it. You find yourself missing out on being a part of important business decisions. You resort to things like watching various slack channels and other business communication and continually find yourself asking to be in the room when “important” decisions get made rto help influence them.

If you subscribe to model #2, you care about folks outside your team using your data resources. You encourage folks to reach out when something doesn’t work as expected. Your model of interacting with self-service stakeholders is kind of like a customer support team — you file tickets for bugs in data, gaps in your materials, and so on. Those tickets help other folks work around the problem until you fix it, which is realistically probably never.

If you subscribe to model #3 (single source of truth for important business data) you also want people to reach out to you about bugs and problems, but this time you’re very motivated to fix them because getting something wrong in your model likely has an extremely high impact on the business. Folks reach out to you when your data model doesn’t cover something they think is important, and you go through an exercise with your stakeholders on a regular basis to figure out what needs to be added/changed/removed. You’re incentivized to set up monitoring and check in on progress of business metrics to get ahead of any questions and problems that arise, enabling you to continue to focus on proactive and not reactive work.

And if you subscribe to model #4 (help business decision makers make data driven decisions), folks are usually reaching out to you for help becoming more data driven. If you also care about the validity of the decisions being made, you spend a lot of your time helping check folks results. You end up getting pulled into random business problems and working on a large cross section of questions. Focus in this model becomes difficult, you largely work reactively and your ability to make an impact is one step removed compared to model #3.

Is one model better than the others? I don’t necessarily think so. They all have upsides and downsides, but I’ve found it so helpful to just lay them out using John’s framework on frameworks, think through the pros and cons, and make a decision about what works for me. And now when I hear someone else talking about how they made their version of self-service analytics successful, I’ll always be thinking about — which model do they subscribe to? What are their assumptions?

Elsewhere on the internet…

Chad Sanderson on why data quality should be the responsibility of the data producer

If you talk to almost any SWE that is producing operational data which is also being used for business-critical analytics they will have no idea who the customers are for that data, how it's being used, and why it's important.

This becomes incredibly problematic when the pricing algorithm that generates 80% of your company's revenue is broken after the column is dropped in a production table upstream.

I’ve found this statement to be largely accurate but it obfuscates the reasons we see this outcome. And those reasons are just as important, if not more important, than the problem. One of several things could be behind this:

a good faith lack of awareness that the problem even exists. In this case it’s very much on us data folks to help the right leaders in the organization understand the business impact!

engineering leaders are typically not incentivized to prioritize non-user facing applications of their work. Again, this is often in good faith — there is always more work to do for an engineering team than there are time and resources to do it. Prioritization goes something like: build new things > closely followed by fix important user facing problems > likely an on-call rotation > hiring… Which brings me back to the first point: to get on this tight priority list, we need to express the importance of proactively preventing certain kinds of data quality problems in terms the entire business understands.

a lack of experience reasoning about this type of downstream application of engineering effort. This is largely the result of 1 and 2 above. And the way to solve this is… we need to work with our engineering counterparts to develop frameworks for managing production data and how it lands in the warehouse.

To quote another part of Chad’s post:

you should work with your product and data teams to own production schemas for action-based events and entity CRUD events. These schemas should be decoupled from your typical operational workflows and treated as APIs. Not only would this be radically more impactful to the company, but it creates a foundation for a true event-driven architecture - highly consumable event streams that map closely to real-world behavior that can be leveraged in any application.

My main reaction to Chad’s post is “yes and”. Yes, and it’s on us to work proactively with our engineering counterparts on this problem. We have to learn to explain the why here in a way that resonates with the needs of the business.

You might also be interested to read his post from a couple of months ago with Barr Moses that goes into more detail about what this data as immutable APIs world looks like.

Applying John Culter’s framework on frameworks to Chad’s proposal: there’s a limit to the applicability of the immutable event driven data warehouse. This is a solution designed to solve the problem of quality and parity between product data and the actual product of a software company. There are all kinds of other data that land in a warehouse that can’t be event driven (not yet anyway), like your sales data, customer ticket data, marketing campaign data etc. And transformation work still needs to happen to connect your event driven product data, even if it is highly enriched with context about the event, to the data generated by the rest of the business.

It is a good solution for the context it is aimed at, and I’ve seen examples of this implemented in software companies. However, just like John cautions us about over-rotating on the framework and not the goal behind it, I’ve found folks sometimes forget why these types of event driven systems were setup in the first place, and you end up with poor quality data soup anyway.

The main point being — it’s not the framework of an immutable event driven warehouse that will get us to the end state of high quality data. It is the continual articulation of why this is important, even after you’ve set up your processes, and embedding that sense of “why” in the fabric of how your entire business operates.

Every narrative’s powerful friend, by Ted Cuzzillo

This is a short and sweet summary of a book that’s going on my much too long summer reading list, with a great reminder: the best kind of data is data that has a story that people want to tell and retell. Some great tips for how to achieve that in the link above!

Clouded Judgement, by Jamin Ball

One of the very amazing things about working at a startup like dbt Labs is the caliber of investors and advisors we get to rub shoulders with. This week at an internal all hands, Jamin Ball came to speak with the company about how he thinks about what’s happening in the economy right now, and what it means for businesses like ours. Jamin’s blog post this week is a pretty good overview of the main points he covered in his discussion with us. Highly encourage a read!

Datafold releases an 🤯 open source tool to help diff datasets!

Why this is cool:

if you’ve ever had to work on a data migration (yes, people move database and cloud services pretty regularly, and yes it is painful) you know how tricky it is to make sure everything in the destination matches your source. data-diff is very helpful here in automating something that would otherwise be lots of querying to identify the offending differing rows, plus it pushes compute into your database to make this a lot faster.

dbt YAML check, an open source helper library by Kshitij Aranke

Why this is cool:

linters like SQLFluff are mostly focused on verifying that your SQL is correct, and conforms to some standards you’ve set for readable code. That’s super important… but what about internal consistency of your dbt project? Did you remember to reference all your sql data models correctly in your YAML? How about that hard to find typo?

Enter the YAML check! 🦸

Thanks Kshitij for expanding the ecosystem of things that work with dbt!

And finally, Continual.ai is GA and raises a Series A! Congrats friends!

Until next time! 👋