From Rows to People

What does it take to make sure that a single row in a single warehouse table corresponds to exactly one human?

👋

Hope you’re having an excellent weekend. Make sure to subscribe to the podcast, as in the most recent episode Julia and I talk about developing junior analytics talent with Brittany Bennett of the Sunrise Movement. Brittany has thought more about growing the humans on her team than potentially any data leader I’ve met.

That’s it for now, enjoy the issue :)

- Tristan

Let’s talk about identity

Well, who are you? (Who are you? Who, who, who, who?)

I really want to know (Who are you? Who, who, who, who?)

Tell me who are you? (Who are you? Who, who, who, who?)

Because I really want to know (Who are you? Who, who, who, who?)

- The Who, Who Are You?

If you have never had to hand-code basic entity resolution in a dbt model, well…I cannot help but be envious. Entity resolution—one of the most dry subjects imaginable (you’re welcome)—is one of those problems that seems, on its surface, to not be that hard. And then you just keep digging and digging and never hit the bottom.

Let’s pause for just a second to define the term:

Entity Resolution is a technique to identify data records in a single data source or across multiple data sources that refer to the same real-world entity and to link the records together. In Entity Resolution, the strings that are nearly identical, but maybe not exactly the same, are matched without having a unique identifier.

The more specific version of entity resolution that deals specifically with humans is called identity resolution.

If you’ve spent any time looking at the customers table of an ecommerce store with guest checkout enabled, you’ll identify with this problem—buying something online without logging in is a nightmare for data analysts. Hopefully a customer uses the same email each time they buy from a store, but very frequently you’ll find that the exact same person uses multiple different emails over the course of their relationship with the site. Sometimes you can use other fields to recognize this (address, name) but people also move and even change their names. It’s imperfect and messy.

Without that login process establishing a clean user_id, figuring out which customer orders correspond to a single human is a surprisingly challenging problem. And the fairly simple version of the problem (unauthenticated ecommerce purchases) is the least of it…in large organizations with dozens-to-hundreds of systems touching customer data, mapping entities across systems is shockingly hard. In fact, in the modern data stack, there actually is no good answer today. Sure, you can do incredibly basic versions of this with SQL (and thus in dbt), that’s a long way from what “good” looks like. I pointed this out in 2017 and, despite all of the activity in data tech since then, no one had really payed much attention to this problem in an MDS-native way…until just now.

Open source Zingg is entity resolution for the modern data stack. I don’t know founder Sonal Goyal (yet!) but I do identify with her story—agreeing to do something for a client, realizing it was harder than you thought, figuring out a way to hack something together, immersing yourself in the problem space, getting obsessed, and iterating on the product until you find yourself (somehow?!) something of an expert.

Here’s my favorite bit:

How tough could it be? - the programmer in me thought. I took a sample of 25,000 records from the 2 datasets and wrote a small algorithm to match them, which crashed my computer! I had no basics in entity resolution at that time and did not understand how hard it could be to match everything with everything. I changed my approach and rewrote it. Let me throw a bit of hardware to this, I thought. So we built something on hadoop with some kind of fuzzy matching using open libraries, a refinement of my single machine algorithm..specifically tailored for the attributes we had and barely managed to get it running. We shifted to Spark, and it worked better. We were able to deliver something that saved us, but I wasn’t too proud.

The readme in the repo actually does a great job of explaining why the problem is hard and how Zingg attempts to solve it with two models: the blocking model and the similarity model.

1. Blocking Model

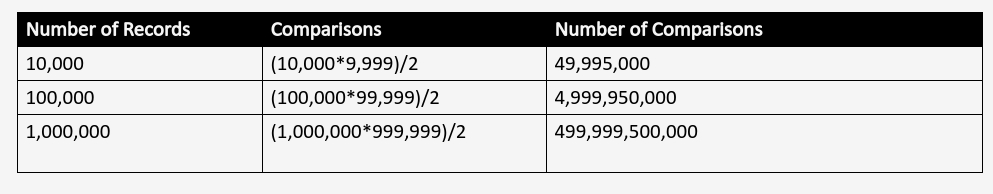

One fundamental problem with scaling data mastering is that the number of comparisons increase quadratically as the number of input record increases.Zingg learns a clustering/blocking model which indexes near similar records. This means that Zingg does not compare every record with every other record. Typical Zingg comparisons are 0.05-1% of the possible problem space.

2. Similarity Model

The similarity model helps Zingg to predict which record pairs match. Similarity is run only on records within the same block/cluster to scale the problem to larger datasets. The similarity model is a classifier which predicts similarity of records which are not exactly same, but could belong together.

To train these two models, Zingg ships with an interactive learner to rapidly build training sets.

The product requires Spark for data processing and can read/write from most cloud datastores, so it is architecturally more complex to run than much of the modern data stack but it does presume that both the begin and endpoint of the data lives in the cloud. Fortunately, the data that needs to be moved from place-to-place is often people or companies, so relatively low cardinality relative to something like an events table (and thus not so bad to pipe from place to place).

Is this actually a problem?

It turns out it is. If this isn’t a problem for you, well, you probably work at a digital native company where users primarily log in to interact. Here’s how this plays out in the rest of the world:

(…) the US healthcare system could save $300 billion a year — $1,000 per American — through better integration and analysis of the data produced by everything from clinical trials to health insurance transactions to smart running shoes.

In financial services:

Connected customer data is also vital for establishing personal credit ratings and financial stability. These ratings could be used to make informed decisions on issuing loans, handing over credits, and detecting fraudulent activities. Fuzzy data matching is employed to connect the KYC data with customer investments. The KYC information along with the credit ratings can prove to be very helpful in detecting and avoiding debt frauds.

…and so on.

My own personal experience with this is in an unauthenticated ecommerce context, and the first time I ran into it I was pretty shocked at how quickly it ground the entire project to a halt. Everything about ecommerce analytics relies on a solid customer ID. Lifetime value? Repeat purchase rate? Marketing attribution? Without solving identity resolution you may as well just draw a revenue line for the business and then admit defeat, because that’s about all you can do.

Why is entity resolution so late to the MDS party?

There are other companies that do entity resolution—the one that gets brought up most frequently is Tamr. But, similar to the transition from traditional warehouse appliances to Redshift, these products generally require massive sales cycles and large contracts to use. One of the fundamental aspects of the modern data stack (IMO) is its ease of deployment and (relatively) permissionless adoption model.

So why did it take ~9 years from the launch of Redshift to get to an MDS-native solution here (albeit an extremely early-stage one)? I think it’s a hierarchy of needs thing. Just like you didn’t really need Fivetran until you had Redshift and Looker, and you didn’t need really dbt until you had all of the above, we just hadn’t gotten there yet as an industry. You have to experience the problem before you can solve it.

Plus, so many users of the modern data stack have, until recently, been digital native early-adopters. And that’s a group of companies for whom this problem tends to be less glaring. Just as large enterprises join the party with large numbers of poorly-keyed data sources, voila!, folks are now paying attention to the problem ;)

One of the open questions for me is how this gets slotted in, both logically and technically, to workloads that dbt runs for you. dbt isn’t well-suited to orchestrate non-dbt (and thus non-SQL) workloads today, but entity resolution ideally sits right in the middle of a typical dbt DAG: stage your tables, then run entity resolution, then proceed with the rest of your DAG. If you wanted to do this today you’d have to pursue what I would consider to be a suboptimal solution involving external orchestration and model selectors. Long-term I think there has to be a better answer here.

I’m excited to see another capability get pulled into the MDS, if only to make sure I never have to write the bad version of this in SQL again. How long until there are three well-funded competitors all going after it? 😛

Elsewhere on the internet…

🧠 JP Monteiro outlines what I believe will be the future of BI in his first Substack post (welcome to the party!). I’ve never met JP and have never written about this publicly, but he’s clearly read my mind. The core ideas:

There will be many good and useful data analysis and consumption experiences, whether for different use cases or different personas or different verticals…whatever.

These will inevitably be built by a wide range of consumers.

They will all be “thin” solutions, insofar as they rely on all of the infrastructure from lower layers of the MDS and exclusively innovate on user experience.

They need to be “standardized” in some way so as to integrate nicely together into a single experience. JP presents this as a “portal”…I think of it as a single “feed”.

I truly believe this is what we will see come into existence over the next ~5 years. There’s a lot more to say about it and would love to do a brain dump, hopefully soon now that it’s an idea out in the world (thanks JP!). I’m excited about dbt being an increasingly-mature part of the underlying infrastructure because I’m so excited about all of the downstream innovation this will unlock.

💡 Bobby Pinero writes that “curiosity is a practice.”

It’s not a skill. It’s something that’s ongoing and only as good as the practice itself and its surrounding feedback loops. It can be lost. It can be ignited. It can ebb and flow. But the more it’s practiced the more it compounds. Curiosity has momentum.

I desperately love this—it resonates deeply with me.

✊🏾 Benn’s most recent post is on the painful lack of diversity in the analytics community. Important.

👀 Fivetran raised a lot of money. And they bought HVR (also for a lot of money). I still remember the original HN job posting we saw while I was working at Stitch where we realized this new startup “Fivetran” was pivoting to compete with us. We’re all growing up! 😢

Fivetran could go public in two years “as long as we keep executing,” Fraser said.

💵 More funding news, this time from Bigeye. I’m a…bigfan.

🥞 Peter Bailis, CEO of Sisu Data, takes on consolidation. i.e.: aren’t there too many layers in the modern data stack right now? I think there’s a lot that the post gets right, although IMO Peter creates a delineation between data engineering and data analysis in his recommended approach that doesn’t actually exist and is harmful. Integrating these two workflows and teams is the most important thing that dbt does.

I had the unique pleasure of spending the first 10months in a new Data Analyst role working on inter-temporal entity resolution!

The company was Ritual: a Toronto-based order-ahead-and-pickup-lunch app. When I joined, the company, every new data hire would be questioned if they had any good solutions to maintain an accurate and current data set on all the restaurants that exist in every major city in the world. *ahem*

It was a proverbial "Sword in the Stone" problem: the company was like a village waiting for a hero to swoop in and pull the sword out. I certainly was NOT that lone hero, but I had some ideas and was put on a pod with a couple very talented people to try and collectively haul out the sword.

Our solution was built on a Python library called Dedupeio: https://github.com/dedupeio/dedupe, which I got turned onto by investigating ways to constrain the explosive complexity of pairwise comparisons.

But guess what? Even with a serious amount of model training from us and folks at Mechanical Turk; it still didn’t work that well. We still had duplicate restaurants, or closed restaurants in our sales pipelines and our city launch priority estimates.

The best solution we got to was to pay for the most comprehensive data source, rather than try and blend 5 different, less-complete sources.

Reflecting now, it was an amazing problem to work on, but most of all because it taught me that sometimes there’s a limit to what an engineered solution can do: sometimes you just have to go about it another way.

Final thought: I’ll never forget talking about the problem with a good friend, on a sunny afternoon in a park, about a month into working on it.

I explained the intricacies of the problem: the incomplete and missing fields, the O(n^2) complexity, etc.

Finally, he looked at me and said: "Have you tried fuzzy matching on name: maybe that would work?"

I had the leave the park early.