Google TAPAS. The Data Science Job Market. Tecton. Data Science @ Lyft. Pinot Supports SQL. [DSR #225]

Another two weeks! I’ve become rather convinced that, while there will be attempts to start “re-opening”, my life is going to look roughly like it does currently for kind of a long time. I suspect that many of yours will too. It’s fortunate that many of us on this newsletter have that option, but it’s also a pretty intense thing to come to terms with.

Hoping that you and yours are well. Let’s talk about data!

- Tristan

❤️ Want to support this project? Forward this email to three friends!

🚀 Forwarded this from a friend? Sign up to the Data Science Roundup here.

This week's best data science articles

Google AI Blog: Using Neural Networks to Find Answers in Tables

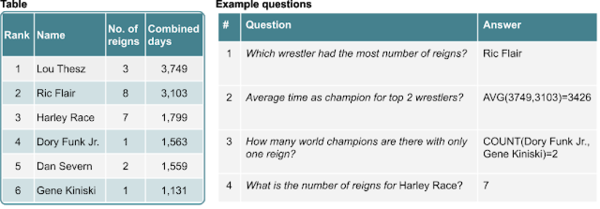

Querying relational data structures using natural languages has long been a dream of technologists in the space. With the recent advancements in deep learning and natural language understanding(NLU), we have seen attempts by mainstream software packages such as Tableau or Salesforce.com to incorporate natural language to interact with their datasets. However, those options remain extremely limited, constrained specific data structures and hardly resemble a natural language interaction. At the same time, we continue hitting milestones in question-answering models such as Google’s BERT or Microsoft’s Turing-NG. Could we leverage those advancements to interact with tabular data? Recently, Google Research unveiled TAPAS (Table parser), a model based on the BERT architecture that process questions and answers against tabular datasets.

This could potentially be a big deal. Take a look at the image above for examples of what TAPAS can do.

One of the biggest problems in data is the “last mile” problem—getting answers to front-line employees who don’t have a ton of data skills. This is the problem that sunk a thousand ships…er…BI companies. But BERT + Google has about the best chance I could imagine.

While this may not be something you’d personally use, just imagine how it would change your life! Better last-mile tooling frees up data professionals to focus on more interesting work.

What’s happened to the data science job market in the past month

I work at a company that mentors data scientists for free until they’re hired. Because we only make money when our data scientists get hired, we get a very detailed view of the North American data science job market and how it’s evolving in real time. We know who is getting hired where, how much they’re being offered, the details of the offer negotiation process, and lots of other data.

In this post I’ll break down what we’ve been seeing in the data science job market over the past month. I originally released some of this information in a tweetstorm last week, but I’ll be going into greater detail in this post.

H/T to Josh Laurito for this one; I hadn’t seen it until his newsletter. Good post. Its conclusions will likely only be relevant for the very near future as things are changing so quickly, but if you’re in the market right now this is a must-read. Also: if you’re currently looking, Fishtown Analytics is hiring (fully remote).

towardsdatascience.com • Share

Tecton: Why We Need DevOps for ML Data

We need to apply MLOps practices to the ML data lifecycle to get features to production quickly and reliably.

This is Tecton’s launch / fundraise post (they raised $25m from a16z, congrats!). It spends most of its word count on the problems of ML workflow, with the core viewpoint being that DevOps best practices need to be applied to the machine learning lifecycle, especially for the parts of the lifecycle that are outside the model.

Readers of this newsletter will know that I wholeheartedly agree with this viewpoint, so I’m excited to see a well-funded team working hard on this problem. And I’ve been excited about Michelangelo, Uber’s platform from which Tecton grew, since it was first announced.

All of those kind words are, of course, setting up a “but…”. My worry about the approach here is that Tecton seems to be trying to solve too much. Take a look at the end of the post; it’s really a massive scope for a single product. DevOps tooling didn’t grow out of one company that had a single platform to manage the entire workflow; it grew out of a set of interlocking products that all shared a complimentary mentality: containers, source control, CI, infrastructure-as-code, etc. I’ve been involved with companies in the past that tried to “do too much” and just worry that that’s what’s happening here. The analytics world is very much adopting a best-of-breed approach to building products, while it seems that ML is going more integrated.

That said, some problems need to be tackled in a full-stack way, and maybe this is one of them. The founders know this problem space way better than I do, and I’d certainly love to live in the world that they lay out.

Lyft has assembled a team of 200+ Data Scientists with a variety of backgrounds, interests, and expertise in order to make the best possible decisions, and thus build the best possible product.

This post discusses the revamped structure of the data science team @ Lyft. I linked to their earlier post describing how they thought of the “data scientist” title—essentially, Lyft calls both analysts and scientists “data scientists"—and so wanted to revisit this here as I think the company does think deeply about this stuff. There are really two main interesting points:

They separate "Decisions” and “Algorithms” into separate titles. This might initially seem like they’re recreating the analyst / scientist divide, but I actually think that it’s a more useful distinction to focus on the outcome as opposed to the tooling. Often, data scientist means “I use Python” whereas analyst means “I use SQL"—this is silly. Creating roles based on the desired output is a much better idea.

They’re fully embedded. There is no central ticket-queue; all data scientists are attached to a particular area of the business, allowing them to develop deep context around the particular business problems. This is, IMO, now best-practice, but it’s still not a widely deployed model. In part, it’s just hard to staff a team that’s big enough to do it well, but at 200 data scientists, Lyft can do this.

Introducing Apache Pinot 0.3.0

I linked some time ago to a post that talked about the next generation of exciting OLAP data stores—Clickhouse, Druid, and Pinot—and I continue to follow this space with interest. This release from the Pinot team @ Linkedin caught my eye for this specific improvement:

We moved from custom PQL to Calcite SQL. Apache Calcite is a popular open source framework for building databases and data management systems. It includes a SQL parser, an API for building expressions in relational algebra, and a query-planning engine. We have leveraged the Calcite SQL parser to parse queries in SQL format. However, Pinot continues to support only a subset of SQL; for instance, joins and nested queries are not supported. This is a design choice in Pinot to focus on providing fast analytics on a single table.

This is a big deal! SQL support allows Pinot to enter the analytics ecosystem in a first-class way.

engineering.linkedin.com • Share

Capturing a sense of the impact of the global pandemic and social distancing measures on society, the economy, and the environment.

Well this may be the first time I’ve linked to a Google Slides presentation! It’s a great resource; you’ve likely seen several of these already (I’ve linked to a few) but it’s the most complete compilation of timeseries data I’ve seen yet on the world’s evolution since the beginning of the pandemic.

Line charts are pretty damn effective.

Speaking of COVID graphs…this was a new one to me. My conclusions after looking at several and playing with the tool that this author publishes are very much in line with what they said:

But for workaday data analysis, or straightforward data communication, I think it’s easier to read an old-fashioned x-y graph.

Certainly an interesting foray into visual experimentation though.

Thanks to our sponsors!

dbt: Your Entire Analytics Engineering Workflow

Analytics engineering is the data transformation work that happens between loading data into your warehouse and analyzing it. dbt allows anyone comfortable with SQL to own that workflow.

Stitch: Simple, Powerful ETL Built for Developers

Developers shouldn’t have to write ETL scripts. Consolidate your data in minutes. No API maintenance, scripting, cron jobs, or JSON wrangling required.

The internet's most useful data science articles. Curated with ❤️ by Tristan Handy.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue

915 Spring Garden St., Suite 500, Philadelphia, PA 19123