Is OSS the future of AI?

Do shocking performance gains of smaller open models in the last few months upset the Big Tech AI oligopoly?

I recently spoke on a panel focused on AI. The content bothered me, honestly. It was high-level and of a “neither right nor wrong” character…things that sound smart but were generic enough to be hard to disagree with.

On some level that’s fine—we need to be having conversations like “will AI take all the jobs” in rooms full of humans that aren’t steeped in the details of the innovation that’s happening. This is, I suppose, a part of the process.

But there are real, important, substantive questions that impact how the future is shaped and that we are capable of having informed conversations about today. My experience on that panel made me yearn for that more substantial conversation, and this particular newsletter was just what I needed. Not because it was necessarily correct, but because it took substantive positions on the right questions.

Which made me think: what are the most important questions of the moment? Below are what I believe to be the questions with the right level of specificity to actually be engaged with today; they’ll be the most impactful when deciding the next-3-years future. If we want to argue about something, let’s argue about these.

Will large models (in the hundreds of billions of parameters) necessarily dominate? Or is their size an impediment because it slows / limits iteration / experimentation? Can we get near-equivalent performance from smaller models?

What is the relative importance of fine tuning vs. the size / quality of the underlying foundation model?

Will open or closed win? This is largely an output of the above two questions: if smaller models, fast iterations, and lots of user-generated fine-tunings perform as well or better than massive closed models, then OSS has more of a shot than I previously thought.

What proprietary datasets will be important to train models for what specific use cases? Will it still be Google / Facebook with the world’s most monetizable datasets or will it turn out that high-value AI use cases are powered by different datasets?

Is it possible—literally, is it possible—to regulate open source AI? If cutting edge AI looks like trading small customized layers via open communities, that becomes quite challenging to figure out how to regulate, even if we decide that regulation is desirable.

Is it possible to regulate proprietary models in a way that doesn’t unacceptably damage international competitiveness of those countries that decide to impose such regulations?

What undesirable societal effects can we predict with confidence in the near term and are there realistic mechanisms to mitigate any of these effects?

My belief is that these questions will determine the trajectory of the next 2-3 years and that we can have intelligent conversations about them today.

Here’s a poor question: “Will AI put humans out of work?” Maybe! On some timeframe it is hard to rule that out. But it’s just not answerable today as a binary. Someone will point back at the history of technology over the past several hundred years and someone else will say “this time is different.”

Let’s move towards better conversations.

The article I linked above does just that. It has a take on the first three questions I mention above. It’s titled Google: "We Have No Moat, And Neither Does OpenAI." It’s a publication of a leaked document from inside of Google; the authors of the newsletter start with this:

The text below is a very recent leaked document, which was shared by an anonymous individual on a public Discord server who has granted permission for its republication. It originates from a researcher within Google. We have verified its authenticity. The only modifications are formatting and removing links to internal web pages. The document is only the opinion of a Google employee, not the entire firm. We do not agree with what is written below, nor do other researchers we asked, but we will publish our opinions on this in a separate piece for subscribers. We simply are a vessel to share this document which raises some very interesting points.

So: this isn’t gospel, but it’s an actual perspective from a real human at Google. And my guess is that if this got leaked, it’s something that at least a vocal minority of Google employees find interesting.

Let’s leave aside whose perspective this is for the moment and any credibility or lack thereof. Instead, let’s just evaluate the underlying claim.

The author is posing answers to my questions #1, 2, and 3 above. Its answers, in order:

Large models are not meaningfully better; today’s smaller models can achieve near-equivalent performance while having other superior properties (cost / iteration speed / accessibility).

Fine tuning is ultimately more important than foundation model quality.

Given the above, open source will dominate.

This is a cohesive perspective, and at a surface level it is certainly plausible. My initial emotional reaction to it is that I want this to be true, which is exciting but triggers my internal skeptic. The paper does seem to be quite well-cited, and I’ve now spent some time with many of the sources.

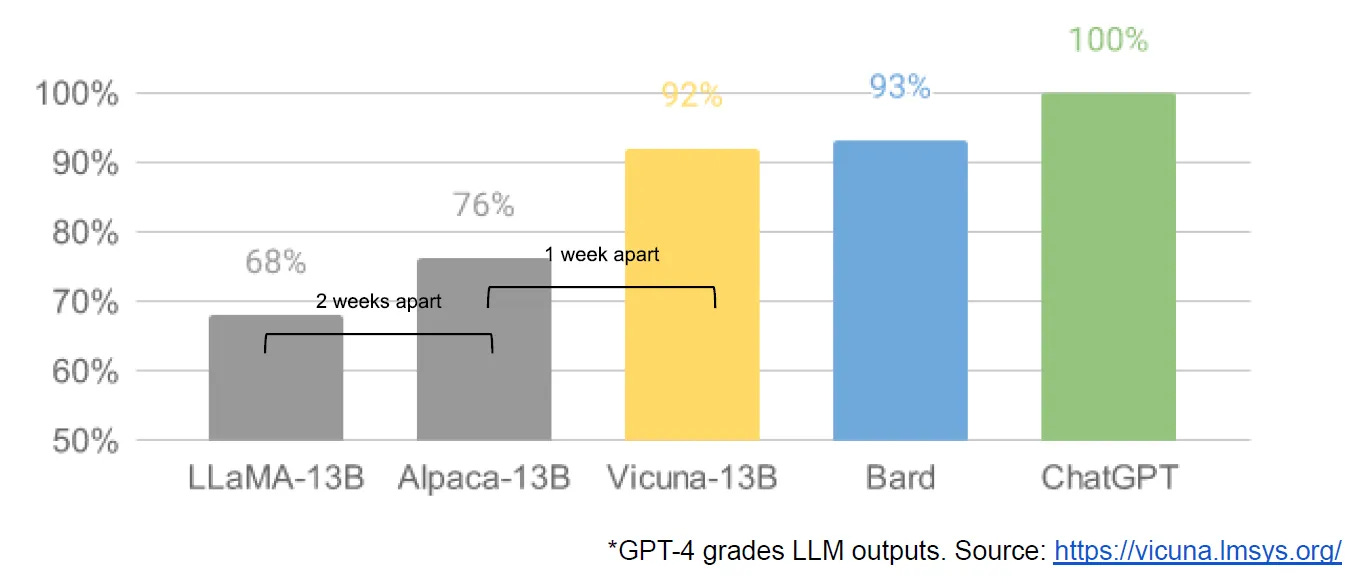

The headline image is here:

That’s a stunning improvement in only three weeks. What I found truly fascinating about this image is exactly how these scores were achieved. In short, the authors of the Vicuna model asked questions of all five of the above models, fed those responses into GPT-4, and asked it to rate them:

Our initial finding indicates that GPT-4 can produce highly consistent ranks and detailed assessment when comparing chatbots’ answers (see above example of GPT-4 judgment).

I replicated this experiment for myself. Here’s my prompt:

I am trying to evaluate two different AI assistants.

Let's call them Assistant 1 and Assistant 2. I gave both of them the following prompt:

Question: Compose an engaging travel blog post about a recent trip to Hawaii, highlighting cultural experiences and must-see attractions.

Below I will share their responses:

[paste in responses shared in Vicuna article]

Please rate each of these responses on a scale of 1-10 and provide the reasoning for your rating.

Here’s the response I got:

Assistant 1: Rating - 6/10

Reasoning: Assistant 1 provides an overview of the blog post's content, but the response lacks the details, enthusiasm, and engaging language that is expected in a travel blog. The text reads more like a summary than an actual blog post, which may not be as interesting or engaging for readers.

Assistant 2: Rating - 9/10

Reasoning: Assistant 2 delivers a much more engaging and detailed response, capturing the excitement and essence of a travel blog post. The response uses vivid descriptions, personal anecdotes, and specific recommendations, giving the reader a better sense of what a trip to Hawaii would entail. The post also incorporates some Hawaiian words and phrases, which adds authenticity to the post. The only point deducted is due to the potential for even more detailed descriptions or additional recommendations for experiences and attractions.

The idea that you can use the industry-leading model to evaluate performance of all other models is a 🤯 idea and certainly worthy of additional research. It feels problematic as a place from which to assert truth, but it’s certainly interesting.

Leaving that aside, the performance increase in the span of three weeks and the performance of smaller models relative to larger models are very impressive.

The original paper is honestly not that long and it’s quite accessible. The timeline at the end is particularly informative if you just want to get up to speed on recent developments. Rather than attempt to share my very neophyte thoughts here you’re better off reading the piece yourself. Whether or not this perspective is correct will be determinative for how the next 1-2-3 years plays out, and potentially far more. As my co-host on the Analytics Engineering Podcast, Julia Schottenstein, said in Slack when discussing this paper:

Open source is a powerful, unavoidable force in the tech industry. Whether you sell/create open source software yourself or your competitor does, you have to "deal" with it. There's no stopping the open source offering from being created, so take it as a given, and the strategy comes with how you embrace and respond to the open source force.

Yep. In some software categories, OSS dominates. In others it does not. The question is: which camp will AI be in?

From elsewhere on the internet…

Closer to our neck of the woods, AI is starting to do a passable job of analyzing data. Not the wrote task of writing analytical code given a specific prompt, but the creative task of being given a dataset and told “tell me what you find”:

I have similarly uploaded a 60MB US Census dataset and asked the AI to explore the data, generate its own hypotheses based on the data, conduct hypotheses tests, and write a paper based on its results. It tested three different hypotheses with regression analysis, found one that was supported, and proceeded to check it by conducting quantile and polynomial regressions, and followed up by running diagnostics like Q-Q plots of the residuals. Then it wrote an academic paper about it. (…)

It is not a stunning paper (though the dataset I gave it did not have many interesting possible sources of variation, and I gave it no guidance), but it took just a few seconds, and it was completely solid.

The article contains the full abstract, which is about what you would expect from an academic paper on census data.

One more pull quote from this article:

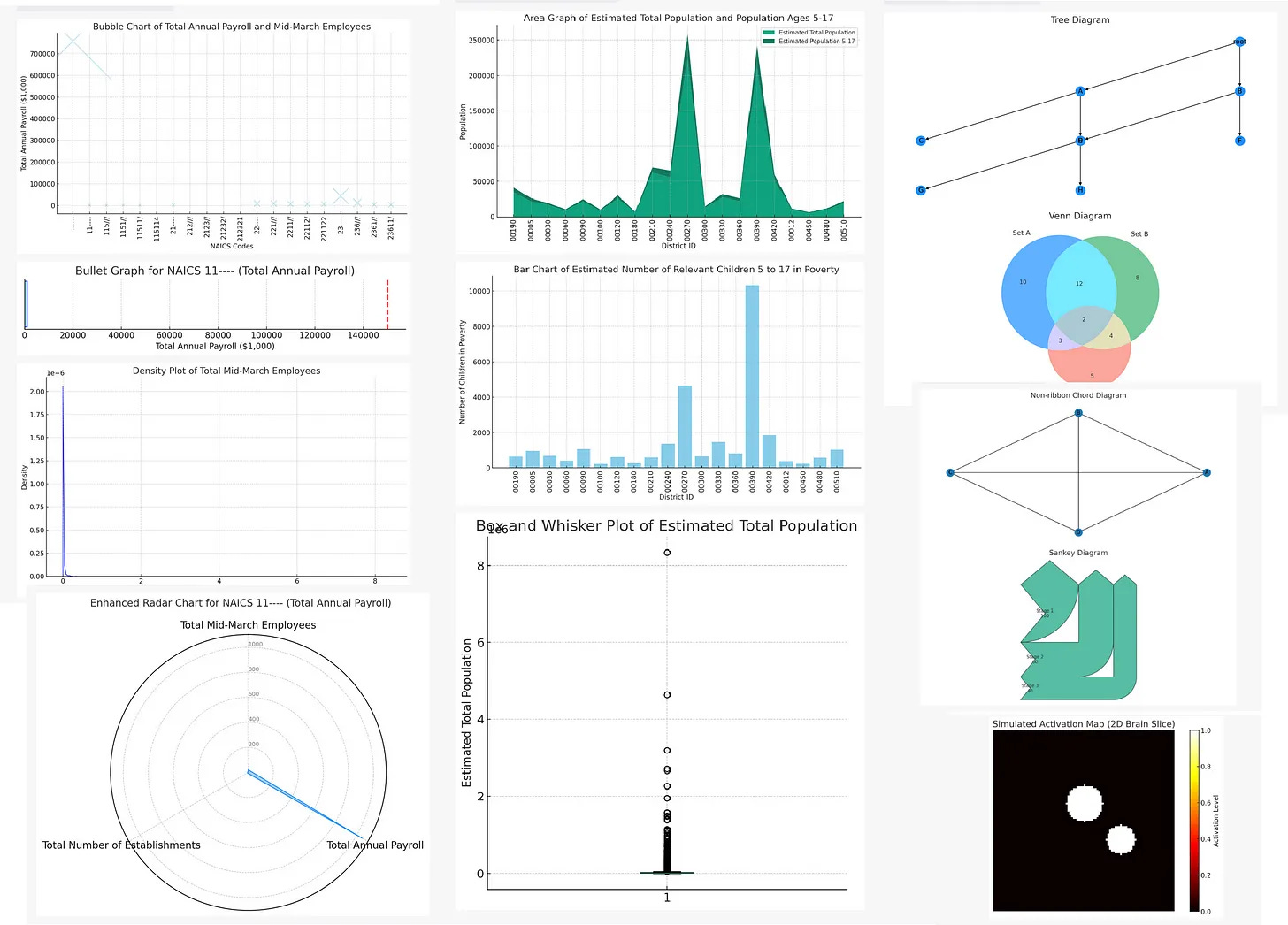

ChatGPT is going to change how data is analyzed and understood. It can do work autonomously and with some real logic and skill (though mistakes creep in they are rarer than you expect). For example, it does every data visualization I can think of. Below, you can see a few - I actually asked it to generate fake data for these graphs to show them off, and it was happy to do so.

Here’s the image:

All of this is based on two new ChatGPT capabilities: Code Interpreter (lets the model execute Python) and the ability to both upload and download files.

I have no doubt that the “query data in a database” plugin is not far behind…it’s fundamentally not that different than interacting with a 100mb csv. How will that change our profession?

—

No offense, obviously, because I appreciate that this post is a thoughtful accounting of the current situation with AI/LLM but man the mumbo jumbo levels here are at near crypto currency levels. This stuff is so far afield from anything that could be useful in the real world.

"7. What undesirable societal effects can we predict with confidence in the near term and are there realistic mechanisms to mitigate any of these effects?"

This one certainly suffers from a deficit of insights with actionable value. I took a crack at the first half of the point in a post on the economics of efficiency gains. Most of the article is about framing the problem, then describing the context, and then delivering specific predictions about the job market. Didn't bother going into solutions, but perhaps someone working towards some will find the analysis useful.

https://tarro.work/blog/trading-suits-for-sofas