Metaflow. "Why I'm Leaving Data." NYT Data Viz. SQL. Writing Clean Python. [DSR #209]

❤️ Want to support this project? Forward this email to three friends!

🚀 Forwarded this from a friend? Sign up to the Data Science Roundup here.

This week's best data science articles

Netflix: Open-Sourcing Metaflow, a Human-Centric Framework for Data Science

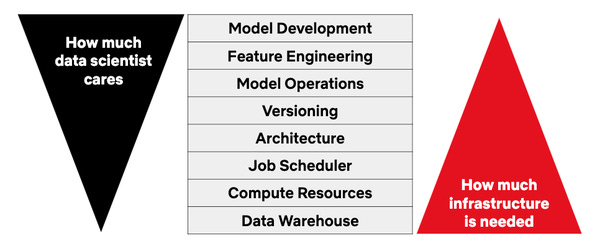

Is this a big new open source release from Netflix(🤩) or just Yet Another Data Pipeline Framework(😞)? Metaflow has certainly become widely used within Netflix, but only time will tell if it receives meaningful community attention. Here’s the most useful section of the post:

There are many existing frameworks, such as Apache Airflow or Luigi, which allow execution of DAGs consisting of arbitrary Python code. The devil is in the many carefully designed details of Metaflow: for instance, note how in the above example data and models are stored as normal Python instance variables. They work even if the code is executed on a distributed compute platform, which Metaflow supports by default, thanks to Metaflow’s built-in content-addressed artifact store. In many other frameworks, loading and storing of artifacts is left as an exercise for the user, which forces them to decide what should and should not be persisted. Metaflow removes this cognitive overhead.

There is a lot of movement in this space at the moment; the article didn’t list newcomers Dagster and Prefect, both of which are doing interesting things. Lots going on.

This is an extremely insightful post. My guess is that the author is still struggling with her own thinking on the topic, and as a result the post is a bit of a brain dump. But there’s real gold in there, and it’s about topics that we don’t talk about much as an industry. Here are a few selected quotes:

you can deliver your best piece of analysis but there will be no guarantee that it will be commensurate with the impact you want it to have

you have no skin in the game

you don’t really work for anybody in the same sense as engineers report to an engineering lead

While being critical, the author is absolutely not negative on the field and points out a lot of the wonderful aspects of the job as well. The negative things she’s pointing to, though, are meaningful structural challenges to building a career in data today that I see manifested in many organizations. We really have not figured out how to build data teams / careers yet.

towardsdatascience.com • Share

NYT: The New York City Subway Map as You’ve Never Seen It Before

Wow—this is impressive. You don’t necessarily need to click through the whole thing unless you’re a subway enthusiast, but I highly recommend spending at least 30 seconds exploring this. The visualization and overall user experience is bespoke and tightly interwoven with the intent of the piece itself, to an extent I’ve rarely seen. Very impressive work.

Modern Data Practice and the SQL Tradition

In this essay, I collected some of my thoughts on the topic of SQL and how it fits into modern data practice. It was motivated after a wave of disbelief I faced when at a presentation last week I claimed that modern data projects could benefit immensely by adopting SQL early on and sticking with it: a “start with SQLite, scale with Postgres” philosophy.

I had not read Florents Tselai but am now an immediate fan for life. This post is a perspective from a data engineer / scientist who wants to see people utilize SQL more heavily in their data pipelines. My only difference in perspective is that I think it typically makes sense to skip Postgres and go straight to RS/BQ/SF. Same advantages, much greater scalability.

Down with technical debt! Clean Python for data scientists.

I often talk about how critical (and overlooked) code quality is in data science, but I’ve never before seen a piece that provides such clear tactical guidance on the topic. If you want to immediately level up the quality of your code, focus on your process and tooling: linters, auto-documentation, type checking, etc.

As you incorporate these tools into your production process you’ll find your entire team constantly nudged in the direction of writing good code. Improving code quality isn’t achieved by a heroic refactoring effort, rather by consistent gradual improvement.

towardsdatascience.com • Share

The single question that has a bigger impact than any other in marketing is “How did you hear about us?” This excellent post by a data scientist at Squarespace walks through the entire process they used to redesign their survey, responsible for routing many millions of dollars in annual ad spend.

engineering.squarespace.com • Share

Thanks to our sponsors!

dbt: Your Entire Analytics Engineering Workflow

Analytics engineering is the data transformation work that happens between loading data into your warehouse and analyzing it. dbt allows anyone comfortable with SQL to own that workflow.

Stitch: Simple, Powerful ETL Built for Developers

Developers shouldn’t have to write ETL scripts. Consolidate your data in minutes. No API maintenance, scripting, cron jobs, or JSON wrangling required.

The internet's most useful data science articles. Curated with ❤️ by Tristan Handy.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue

915 Spring Garden St., Suite 500, Philadelphia, PA 19123