Quasi-mystical arts of data & the modern data experience.

What's this, another round up already? Why yes, yes it is.

The Roundup is going back to landing in your inbox weekly, with a twist. I'm Anna, your new co-pilot and I'll be tag-teaming these updates with Tristan every other week or so. So buckle up, put your chair backs in their upright positions, and turn on your reading lights — this issue is going to be a trip.

-Anna

The Modern Data (Developer?) Experience

Let's start at the end, with the Modern Data Experience:

To most people [...] the modern data stack isn’t an architecture diagram or a gratuitous think piece on Substack or a fight on Twitter. It’s an experience—and often, it’s not a great one. It’s trying to figure out why growth is slowing before tomorrow’s board meeting; it’s getting everyone to agree to the quarterly revenue numbers when different tools and dashboards say different things; it’s sharing product usage data with a customer and them telling you their active user list somehow includes people who left the company six months ago; it’s an angry Slack message from the CEO saying their daily progress report is broken again.

Benn paints a vivid picture above of a place every data professional has likely been before. The experience of working with and using data is broken — both for the humans producing data insights, and the humans who consume them.

The modern data experience is one of the most important pieces of writing over the past few months. This is not because it contains a 🪄 recipe to the perfect data experience — it doesn't, although it has some decent principles to start from. It is one of the most important pieces of writing because it takes a trend we have been seeing (the proliferation of data tooling that makes data management more complex rather than less so) and reframes it in the context of a familiar paradigm: the experience of using a product.

I want to take this one step further. If we should think of data as a product, then we should think of data as a software product. This in itself is not novel. Much has been written in this very Roundup about applying principles of software development to data workflows. We simply need to do much, much more of this.

Benn is asking a very important question: what does a first class data experience look like for an end user of a data product? The answer, it turns out, is not that controversial; but when the answer seems obvious, that's how you know the question is the right one. And it is only the first of several other important questions:

What exactly is a data product? We talk about running our data teams like product teams a lot — but do we all agree on exactly what a data product looks like? Are we the producers of a data product or its users?

When should data products be developed like micro-services? (No, we're not done talking about data mesh yet 😉)

How do existing roles on a data team map to those of a healthy unit responsible for a software product? When does this comparison make sense? Where does it fall down, and why?

What is a first class data developer experience? How is it different from the experience of a data end user? What are the right tools for each of these problem spaces?

As we answer these questions together, it is helpful to articulate the assumptions we are bringing to the conversation and ground them in familiar patterns from other industries — in my case, this will be software development.

1. What exactly is a data product?

I won’t attempt to answer this question definitively in one newsletter. But I do invite you to join me in thinking about the space of possible answers so that we can start developing a common language for talking about them. So, then, what is a data product?

Is it like a classic, intangible service? A common model of organizing data teams does involve a service oriented analytics team that burns down tickets like a help desk and/or furthers the goals of an entirely different business unit via embedded experts. Let’s call this the Analytics Consultant model.

Is it like software as a service? We can imagine a different model here, in which an analytics engineering workflow produces data applications as a service. Here the “data product” is a generalized data application that is built for a broad organizational audience. Familiar examples of this will look a lot like interactive dashboards designed to be filtered, drilled down, and explored non linearly (Customer 360s anyone?). The problem (or maybe opportunity?) with this model is that Cloud data service providers are readily taking up these generalized data apps as part of their product offerings (e.g. see Hightouch.io's latest Audiences feature as a formalization of something data professionals may have built in-house in the past). In which case, what is our product?

Finally, is it... insight? The argument for this model is that it is the most impact oriented, but it is also the most squishy of the lot. If our goal is to help others build a deep understanding of an issue in a way that drives decision making, how do we measure success? And do we have everything we need within the ranks of a data team to influence this goal? In software production, the person responsible for driving alignment towards these types of objectives is a Product Manager. What does it mean to be a Product Manager of... insights? This orientation of a data product probably necessitates the collapse of BI and Data Science in the ways that Benn suggests in his article, but creates new fun challenges like breaking down what Jason Ganz has called the quasi-mystical arts of data science 👏 into trustworthy and transparent systems.

2. When should data products be developed like micro-services?

Answering the first question (what is a data product?) should produce an obvious answer to this second question, and until we do, the best answer we'll have here is — "it depends". If your answer to "what is a data product" is the Analytics Consultancy, your version of mesh is going to look a lot like data marts connected by a common data platform. But if your answer is "data as a service", your version of mesh may look more like micro-services architecture does in software engineering (that is, loosely coupled fine-grained services of limited scope connected by lightweight protocols). And if your answer to “what is a data product” is “insight”, you probably don’t care much about the data mesh at all.

3. How do existing roles on a data team map to those of a healthy unit responsible for a software product?

I joked recently that an Analyst (or for the purist, she who produces data insights) is, in many ways, also a UX designer — especially when watching someone use something she’s built for the first time 😛. The joke was born of a real observation, in that the type of Analyst we frequently hold up as the (very high bar) of success, is often playing multiple shadow roles:

a UX designer ensuring the right information is obtained from their work product, and in the most direct, accessible and attractive way;

a systems engineer traversing the entire metric data lineage from source application to dashboard to understand where something goes wrong;

a persuasive writer translating findings into ideas for action and then selling those to a decision maker;

and increasingly, a product manager who spends their time articulating the ROI of investment in data products that aren't driven by questions of today, but will improve the experience of decision makers with questions of tomorrow.

It's little wonder we spend so much time tossing things over the wall at each other, and get such inconsistent results from our data investments — instead of building cross functional teams of experts in different areas, we ask our data professionals to be experts in all of them (and when this inevitably fails, we ask them to say no, a lot); instead of clear contracts between tools that enable decentralized ownership and reduce the amount of context a team needs to understand to be successful, we ask our data professionals to be human interfaces, constantly negotiating a matrix of complex organizational dependencies.

When the boundaries of your role and responsibilities constantly need to be negotiated, it can start looking like what you do is a lot of everything. “Wait, aren't you Ms. data generalist?” you may ask. Yes, yes I am. This isn't a call for more role specialization, rather an acknowledgement that the way we distribute work on data teams is misaligned with the social contracts (i.e. the human interfaces) that underpin traditional software production. This should be giving us pause. I also don't think the answer here lies with better tools, or better tools alone, as Erik suggests in his specialization piece from a few weeks ago — we need to be careful not to reinvent the wheel where well established fields of practice already exist (like UX).

Instead, we should be thinking more about what are the right social contracts/human interfaces between the people who participate in the creation of a data product, and how do we best facilitate them.

4. What is a first class data developer experience?

Another related and important assumption that we should bring to the surface: when we speak of concrete improvements to the modern data experience, are we describing improvements to the data product end user experience (whatever product here means to us 🙃)? Or are these improvements to the experience of the humans working on developing the data product? Let's call this second thing the Data Developer Experience.

This is an important distinction that Benn's current writeup doesn't make, and I would love to see our data Community expand on it. It's important because it will help us situate some of our disjointed tooling very squarely in its right place in the workflow. Some examples:

Is data documentation a data end user experience or data developer experience? If you say, "why can't it be both?" perhaps it can, but the solutions for each audience will probably look different because they represent different goals. To borrow another analog from the world of software development: technical documentation provides developers references for function definitions, overall architecture, and contracts for technical interfaces with other parts of the application or external dependencies like modules/API calls. End user documentation, on the other hand, provides the customers of a product with manuals for getting started, common troubleshooting tips, and means of getting support. Right now, we imagine data documentation tools as doing all of these things but do they need to?

What does 'trustworthy' data mean to a data product end user versus a data developer? Is it very different from what ‘trustworthy’ means to the end user of a software product versus a software developer? We often talk about trustworthy data as fresh data, but how many software application end users think about whether the information they are looking at in an application is stale? Usually, if this comes up something somewhere else is very wrong. For a software developer, on the other hand, a trustworthy system is one that generates consistent and expected outputs for given inputs. The majority of the work that goes into software development is building trust between components through peer review, unit and integration tests, continuous integration, and yes, version control that leaves a digital trail. Test driven development is an extreme example of putting trust at the center of the development process.

I'm intentionally leaving you with more questions than answers. I want to hear from you! What assumptions do you hold when you speak of data products? What are important elements of the developer experience to you and how do they differ from the experiences of a data end user? Reply to this e-mail, hit me up on Twitter, or drop into the dbt Community Slack and let's talk about this some more.

From the rest of the Internet…

💸 Congratulations Databricks on your Series H!

📈 Metriql — a new metrics layer kid on the block! I'm excited to see more tools (❤️ Lightdash) riffing on dbt's YAML configurations for defining metrics, although metriql's choice of a Business Source license is an interesting one.

📊 This story of how one person made the choice between Data Science and Analytics Engineering, and what this means for what we value as an industry. An excellent read from Jason Ganz.

🧑💻 The role of git in an Analytics workflow. I'm not convinced that "Should we use git for exploratory Analytics work?" is the right question to answer to help scale analytics performance. Version control shouldn't be scary or an impediment to moving fast — it should be a background process one does not think about (see Data Developer Experience above 😏). But I do think this is another aspect of our workflow where we bring different assumptions to the conversation and we should talk about those assumptions more explicitly. I think the right questions to get at those assumptions are: "When should we request peer review in the process of producing insights?" and "What is the atomic commit analog in the data world?". I’m excited to hear your takes!

🖥️ In The data OS, Benn responds to Tristan's writing from last week on Contracts. I'll save my opinions here for the blue bird site, and let Tristan dive into the meat of this next time 😉

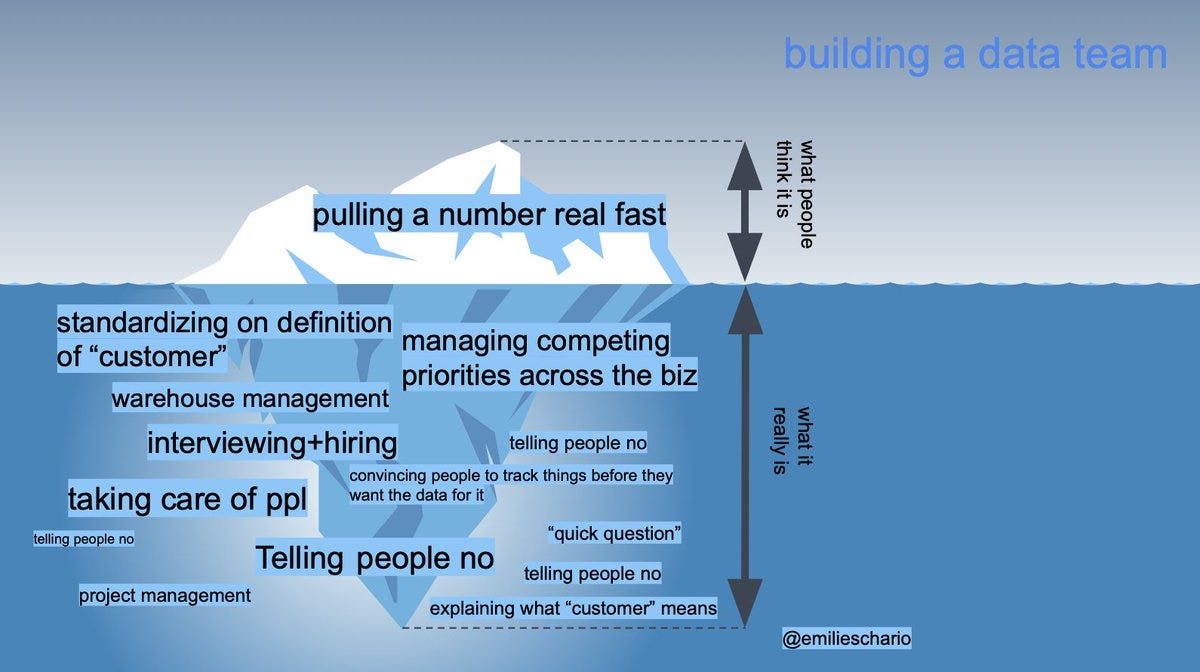

🖼️ Finally, every once in a while, someone makes a meme that really hits home, and recently that someone is Emilie Schario. For your weekend enjoyment: