The Data Diaspora.

The Evolution of Data Engineering. Two posts on AI and LLMs (wild stuff). My thoughts on notebooks.

Episode 34 of the Analytics Engineering Podcast just dropped! In it, Julia and I talk to Prukalpa Sankar and Chad Sanderson. Copying from the episode text:

WARNING: This episode contains in-depth discussion of data contracts.

😬 It is perhaps the best conversations on this topic that I’ve participated in, so I hope you enjoy it!

In other news, we’re wrapping our annual dbt Community survey. This helps us with planning as we figure out what investments to make over the coming year. If you haven’t had 5 minutes to participate yet, this is your last opportunity! Thanks to everyone who has already contributed :D

Enjoy the issue!

- Tristan

This is not really about data and it’s explicitly anti-useful, but it is Benn at his finest.

This is madness, and I’m being devoured by it. I wake up, I read the news, and I retrieve my jaw from the floor. Surely, this pace cannot sustain—I said in January of 2021, when the internet frothed up a mob to storm the capitol; I said in February of 2021, when the internet frothed up a mob to break the stock market; I said in April of 2021, when the internet frothed up a mob to put all of their savings in dogecoin; I said in April of 2022, when the internet frothed up the richest man in the world to buy Twitter.

The whole post is short, and is maybe the most spot-on summary of my experiences living through the past couple of years in our online ecosystem. It’s impressionism…built up dot after dot after dot; you step back and realize “holy shit that is wild.”

I don’t know about you, but I’m grateful that my plans for the day involve going on a walk in the woods. Also, go join a friendly Mastodon server. It actually is better.

Ok…I promise the rest of this issue will actually be focused on data.

—

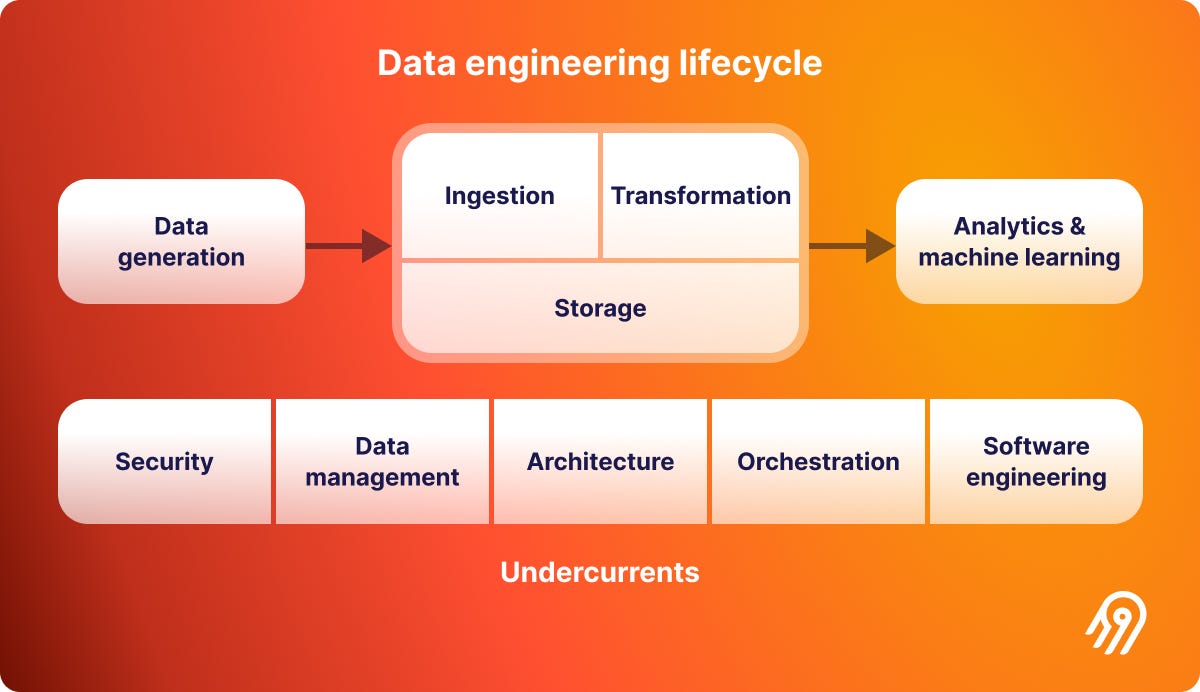

Thalia Barrera writes perhaps the best overview of the state of data engineering since Max B’s canonical post. If you read this newsletter regularly, you’ll likely find that a lot of the content here is well-known: increasing abstraction, focus on software engineering best practices, bringing together stakeholders… But this is such a very important story, and as I find myself having conversations with folks further and further away from the “early adopter” part of the curve, it’s just so critical to have the whole story together in one place.

I cannot tell you the number of times I run into resistance from practitioners who feel that higher-leverage tooling is threatening (it shouldn’t be!). Or from leaders who aren’t familiar with the grand arc of history that is playing out here over the course of multiple decades. This future is already here, it’s just not evenly distributed. And it’s storytelling like this that brings everyone along.

—

This post by Mikkel Dengsøe really hits hard for me. I’m so interested right now by the question who is really doing data work? and this is one of the more interesting things I’ve seen on the topic. While Mikkel comes at it from the perspective of recruiting others in the org to actually join the data team, I don’t actually think that this is the most interesting reason to identify these folks.

I have this sense that the “data team” as currently construed is not something that will be commonplace in the future (let’s say a decade from now). I’m not ready to really make this argument for real, but I just have this multi-year-long gut feeling about it at this point.

The short version of the argument: every data practitioner shouldn’t sit on the data team. That wouldn’t make any sense. That’s like saying “the only people who should program should sit in software engineering.” This is not true. Programming is the practice of telling computers what to do. Appreciably everyone at the company should, at some point, know how to program at some level.

Programming is like numeracy…a skill that most humans didn’t have and then society realized that should change. My oldest will apparently learn how to program when she gets to third grade according to her school’s curriculum.

Using data is a core part of the skillset of every single person who makes decisions. I certainly agree that we do need specialists to sit at the very middle and do the most complex tasks, but I also think we should pay a lot more attention to the data diaspora.

—

I like to link to the State of AI report every year. Especially as I get more and more ensconced in the world of analytics engineering and further from the advances taking place in AI, I find that this report is a tremendous way to catch up on the things I may have missed from the past year. Here’s the exec summary:

—

This post made me 👀. Titled The Near Future of AI is Action-Driven, the post outlines how current tech, including Large Language Models (LLMs, discussed in the report above), make some pretty wild stuff possible today. This is one of the most impressive demo of LLM capabilities I have seen (and there are many):

Pop out the screenshot and see what it’s doing…impressive.

I don’t include this here because I think you’re likely working directly with LLMs (although maybe you are!). Rather, it’s because I think that this type of workflow marries incredibly well with analytics engineering and I’ve spoken to several startups working at this intersection.

This type of question-answer flow works incredibly well on top of clean, validated datasets, but even more so on top of well-defined metrics. It’s nearly impossible to ask a question on top of a giant pile of data piped in from who-knows-where, all in raw form. This isn’t a technical limitation, it’s a limitation of language—we just can’t specify enough detail in a concise enough way to ask a single question and get a reasonable answer back if a large corpus of definitions hasn’t yet been built.

Definitions, in language, allow us to work at higher levels of abstraction. And what are analytics engineers doing but building a large catalog of definitions? We are fundamentally librarians, defining things, creating order from chaos. The more powerful the interactive question-answer loop is that sits on top of our work, the higher leverage that work is.

Related: what can large language models do that “smaller” models can’t? One author finds 137 distinct abilities.

—

This won’t be the first time you read a history of notebook programming and it likely won’t be the last. But I think it’s a really useful time to check in on the state of notebooks and prognosticate about the future. I’ll assume you’ve read (or at least scanned!) the above post before reading on, as it’s a good overview.

Here’s my belief: notebooks are just a mechanism to author a series of ordered computations. The notebook medium is not interested in what the computations are, it is instead a UX paradigm to develop / order / execute / present them. We tend to associate notebooks with Julia, Python, and R, the languages that made up the original Jupyter anagram, but if you’ve used other, more recently-developed notebook products you realize that this is no longer true.

Take Hex, the notebook that I am personally the most familiar with today. Cells are totally arbitrary computation. Cell A can be Python, cell B can be SQL, and cell C can be dbt-SQL (or dbt-Python!) run through the dbt Semantic Layer. One could as easily imagine a “spreadsheet cell,” where a dataset is loaded up into a spreadsheet interface and arbitrary operations are performed on top of it, only to be read back into downstream cells. Talk about a multi-persona data product!

The only rules in this world:

All computations must happen on top of a data frame interface

Each cell must be able to read in data from any data frame produced earlier in the graph

Each cell may output 0 to 1 data frames.

If you’ll note, this feels a lot like the rules of dbt:

All computations must happen inside of a single data platform

Each model must be able to read in data from any model earlier in the graph

Each model may output 0 to 1 tables

The hard part is making the interop work. dbt sidesteps this problem by using the cloud data platform as its processing layer, but most notebook products are unwilling to accept this constraint. Much of Hex’s tech goes into making the interop between different cells feel totally invisible…it just kinda works.

And that, to me, is the other part of the notebook transition: it has to be in the cloud. The type of infra required to deliver on a magical polyglot experience is truly non-trivial. Whether you’re using Arrow as the glue or DuckDB or Substrait, it’s real work to make all of these different compute paradigms play nicely together.

So, here’s my take on the future of notebooks:

Notebooks are a UX for authoring data flows, not a particular type of flow. This UX prioritizes interactivity and exploratory analytics.

Notebooks subsume all other types of computation as different cell types, and these cells can all exchange data with one another.

Delivering the ideal notebook experience requires a cloud-based product.