The Data Quality Issue. Shopify's dbt Extensions. A Data Engineer's Roadmap. [DSR #239]

I was on the a16z podcast recently with George Fraser and Martin Casado! It was a fun time :) Listen here. And a fun Twitter thread following up re: Data Mesh here.

This week’s issue, very much unintentionally on my part, focuses heavily on data quality. The topic certainly seems to be top-of-mind for a lot of folks throughout the industry. Hope you enjoy!

- Tristan

—–

❤️ Want to support this project? Forward this email to three friends!

🚀 Forwarded this from a friend? Sign up to the Data Science Roundup here.

This week's best data science articles

Shopify: How to Build a Production Grade Workflow with SQL Modelling

Shopify just wrote about their work with dbt!

I’ll show you how we moved to a SQL modelling workflow by leveraging dbt (data build tool) and created tooling for testing and documentation on top of it.

It’s been a real privilege to work with the team @ Shopify over the past months as they’ve really pushed dbt to its limits. Read how the org is rolling it out to transition some data processing out of PySpark and into BigQuery, and how they’ve extended its functionality when it comes to unit testing and CI. We’re highly aligned with their thinking on this stuff and really appreciate their willingness to move the ball forwards independently. Hope to see some of this stuff live for the entire community at some point!

Michelle and Chris, if you’re reading: I’d love to hear more about the incremental functionality you reference here:

dbt’s current incremental support doesn’t provide safe and consistent methods to handle late arriving data, key resolution, and rebuilds. For this reason, a handful of models (Type 2 dimensions or models in the 1.5B+ event territory) that required incremental semantics weren’t doable—for now. We’ve got big plans though!

This is an area that I have a long-standing interest in and I’d love to hear what you have cooking!

Programming: 10% writing code. 90% figuring out why it doesn’t work

Analyzing data and ML: 1% writing code. 9% figuring out why code doesn’t work. 90% figuring out what’s wrong with the data

Data Quality for Everyday Analysis

A while ago, a friend of mine presented a compelling analysis that convinced the managers in a mid-size company to make a series of decisions based on the recommendations of the newly-established data science team. However, not long after, a million dollar loss revealed that the insights were wrong. Further investigations showed that while the analysis was sound, the data that was used was corrupt.

You’re likely on board with the idea that pipelines need to be tested, so this post won’t be totally new ground. But: it’s a very practical post that contains a list of 6 dimensions of data quality and suggestions for how to test each of them. Probably the best overview I’ve read.

If you haven’t spent much time operationalizing data quality, this is a good intro. And also…if the above applies to you, you should get a full sprint on your team’s calendar between now and the end of the year to fix that.

towardsdatascience.com • Share

Data Quality at Airbnb. Part 1 — Rebuilding at Scale

As a company matures, the requirements for its data warehouse change significantly. To meet these changing needs at Airbnb, we successfully reconstructed the data warehouse and revitalized the data engineering community. This was done as part of a company wide Data Quality initiative.

I had heard from multiple sources that data @ Airbnb, from its august early days, had run into some challenges of late. This post made me really happy—the company has heavily invested in updating some of its practices and technology. It’s not a super-detailed post but I think it’s instructive in just the talking about the challenges that they’ve faced as they’ve grown. I can only imagine how much change and scale the org has gone through over the past ~6 years since the early days of Superset / Airflow.

Doing these types of overhauls is hard. Kudos, folks.

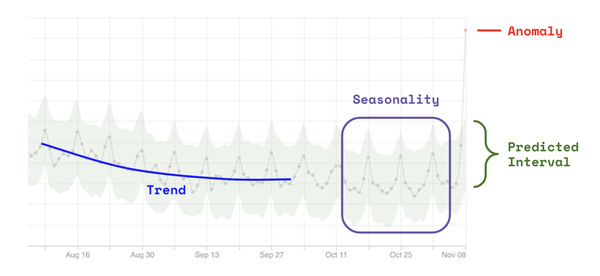

Dynamic Data Testing: Tests that Learn with Data

I love love love this post.

Dynamic testing strategies such as predicted ranges or unsupervised detection have some significant advantages. They are easier to set up and easier to maintain over time. They can also be used to test any data for any condition, regardless of the current quality of the data.

Most people don’t even have static assertions in their data pipelines. Those who do often find that they are brittle (✋), which makes it hard to maintain a commitment to them over time. This post walks through an example of a dynamic data test that can learn from data to minimize maintenance burden and false positives while still providing great test coverage.

Roadmap to becoming a data engineer in 2020

Roadmap to becoming a data engineer in 2020.

Huge image / infographic. IMO both the best actual checklist and such a useful graphical presentation of it. Share this with junior practitioners.

Data Organization in Spreadsheets

HAH! This really tickled me. It’s a full-on academic paper on how to use spreadsheets not terribly. Read the below summary and tell me if you’ve found yourself giving exactly this same advice to coworkers, spouses, friends, family:

The basic principles are: be consistent, write dates like YYYY-MM-DD, do not leave any cells empty, put just one thing in a cell, organize the data as a single rectangle (with subjects as rows and variables as columns, and with a single header row), create a data dictionary, do not include calculations in the raw data files, do not use font color or highlighting as data, choose good names for things, make backups, use data validation to avoid data entry errors, and save the data in plain text files.

Thanks, I never need to have that conversation again. And obviously given the recent UK / Excel / Covid issues, this isn’t widely-followed advice today.

Thanks to our sponsors!

dbt: Your Entire Analytics Engineering Workflow

Analytics engineering is the data transformation work that happens between loading data into your warehouse and analyzing it. dbt allows anyone comfortable with SQL to own that workflow.

The internet's most useful data science articles. Curated with ❤️ by Tristan Handy.

If you don't want these updates anymore, please unsubscribe here.

If you were forwarded this newsletter and you like it, you can subscribe here.

Powered by Revue

915 Spring Garden St., Suite 500, Philadelphia, PA 19123