Would you like your data stack bundled or unbundled?

Also in this issue: data team branding, snapshotting your metrics, building a culture of transparency, notebooks in production and even a little data mesh.

I nearly threw in the towel this week. I mean, what else is there to say after this tweet?

We had a good run, friends, but this is basically all of data Twitter that isn’t shitposting or cats.

And then Teej goes and puts the nail on the coffin of the thread with:

This is of course in reference to the very viral post from the fine folks over at fal.ai on the Unbundling of Airflow, and Dagster.io’s feature announcement they aptly called Rebundling the data platform.

A quick refresher:

Gorkem Yurtseven wrote earlier this week that

“a diverse set of tools is unbundling Airflow and this diversity is causing substantial fragmentation in [the] modern data stack.”

He compared the trend to the classic example of the unbundling of Craigslist by the likes of Airbnb, Thumbtack, Zillow and others. Unbundling means taking a platform that aggregates many different verticals, such as Craigslist, and splitting off one or more services into stand-alone businesses that are more focused on the experience of that niche customer base. Sometimes, as was the case with Airbnb, that experience is so much better it eclipses the market cap of the original business.

Gorkem argues that the same unbundling we saw with Craigslist is now happening with Airflow — different companies in the modern data stack are picking off pieces and focusing on making them better as standalone applications.

I like this comparison because it makes obvious for me how the repercussions of unbundling differ for Craigslist and Airflow:

With Craigslist, the customer doesn’t actually need to use all the available verticals together at once — you are unlikely to need to rent temporary housing, look for a job and be in the market to buy a house at the same time. In this case, you just want whatever is best at solving your problem in the moment. This is why even Airbnb is getting unbundled with even more niche services: the customer has no strong reason to be locked into the platform. If a better one comes along, the cost of switching is relatively trivial. Your previous usage history doesn’t need to move with the platform because it is unlikely to be predictive of future behavior — if you’re looking for a job or a date, the options you viewed last year or two years prior are unlikely to remain available and relevant to you. Also, you don’t have to contend with an ecosystem of other craigslist ‘unbundlers’ that you need to maintain integrations with, as you might with a tool like Airflow.

With Airflow, the ‘verticals’ (Extract, Load, Transform, Metrics, BI, Reverse ETL) are tightly interdependent. They must all exist in some form in an organization in order to manage data produced by the business and turn it into knowledge about the organization. Furthermore, it’s important that the ingestion layer talks to the transformation layer, and that the transformation layer talks to the metrics layer and so on. Unbundling Airflow is a little more like unbundling Photoshop into separate image cropping, raw photo processing and color correcting applications — not the best user experience if you’re a photographer and need to do all three things.

In fact, Nick Schrock of Dagster.io argues just that: the unbundling of Airflow leads to a poor user experience because it forces your data team to split their attention and maintain a growing cornucopia of tools:

“It results in an operationally fragile data platform that leaves everyone in a constant state of confusion about what ran, what's supposed to run, and whether things ran in the right order. “

In response, Dagster has built very cool new functionality that moves back towards “bundling”: the ability to consolidate EL and T steps in the pipeline and observe them alongside Dagster operations and workflows.

The question I find myself asking, however, is how much of the modern data stack does one human, or one team need to actually observe at once? My gut says that while all of the Airflow verticals are needed in one organization, they aren’t all used by one team or even one set of customers.

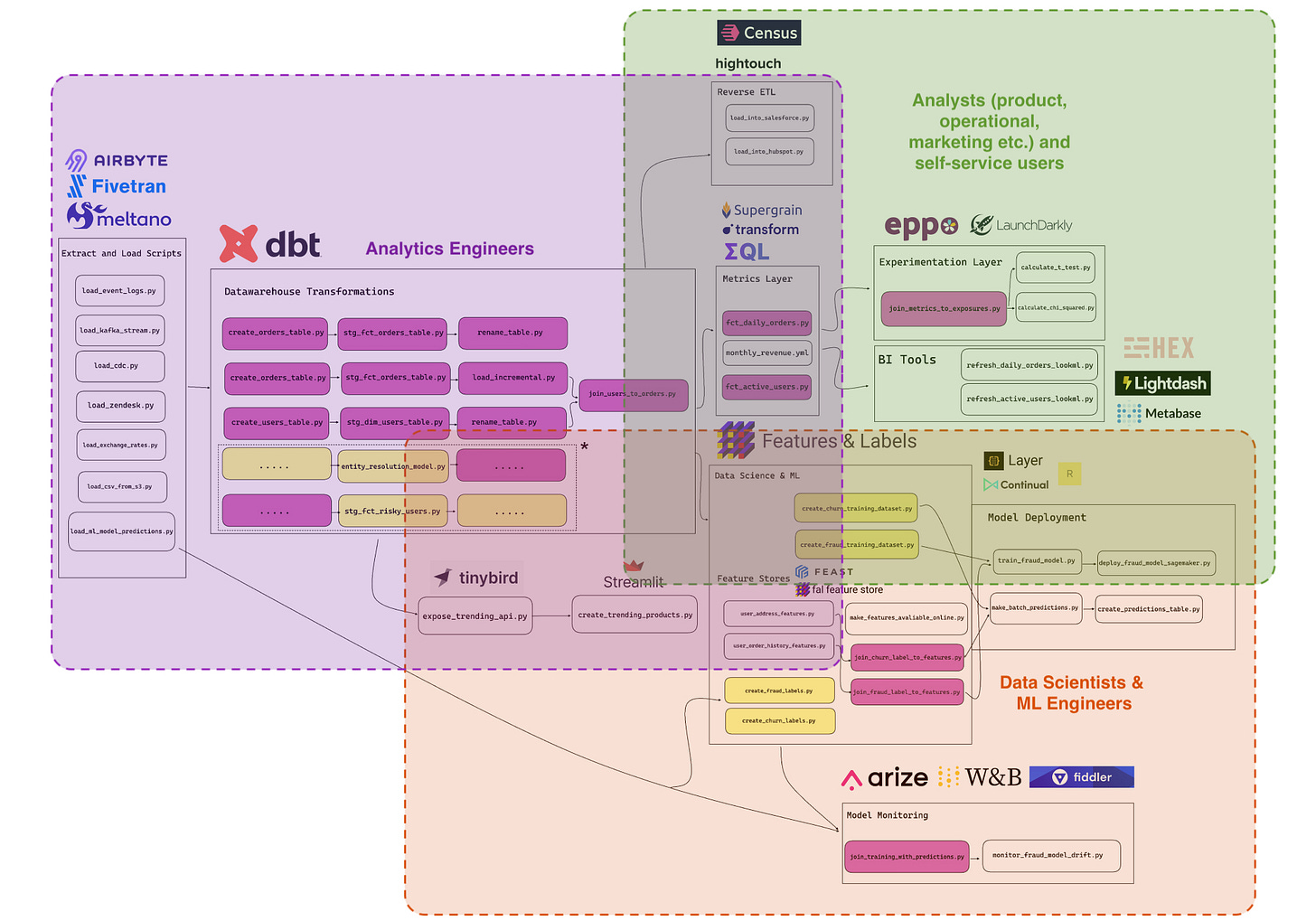

I made my own (very basic) version of Gorkem’s diagram to illustrate the point:

Analytics Engineers (our purple folx) spend the majority of their time organizing knowledge in two worlds — EL and T. To gain empathy for how their customers will use their data models, it helps for Analytics Engineers to understand how Data Scientists and Analysts work, what data is important to them and how it is shaped (let’s call this empathy organizational APIs). However, they’re unlikely to want/need to own ML infrastructure or be prescriptive about the specific workflows Analysts use. Their desire for observability will be limited to understanding who is affected by failures in different parts of the transformation layer — something the transformation layer already facilitates.

Analysts (regardless of their focus area) will spend the bulk of their time helping the business use data to make decisions. Their work will go a lot more quickly if they are able to make quick changes to the transformation layer, or debug a source of data inconsistency and apply a quick fix. They may even venture into the Data Science space if they can rely on a nicely modeled transformation layer to save on data munging time. But Analysts are unlikely to need to know about every dbt run — just the health of the data in the area they are currently concerned with. The same is true of self-service analytics users who explore data without support from a dedicated analyst — these folks are concerned with data that is relevant to their specific business area, its quality and timeliness and they are likely to want to reverse ETL some subset of data into the tools they use for their day to day (either independently or with help from an operations team).

Data Scientists will want to access a tidy, organized data model layer to power their feature stores and reduce the amount of time they need to spend munging data. They may want to make specific changes to EL or T layers to support their work, but their observability will be limited to the data they are using for their models. Your Data Science team is unlikely to spend a lot of time wondering if the core business metrics are up to date, because that’s not what they do :) They may occasionally work with the BI layer but in a very limited capacity compared to an Analytics team.

In short, there’s some overlap in the types of things these different humans might want to observe, but it’s far from 100% and usually sits nicely in the intersection of their workflows and interests.

And so when you look at the modern data stack from the lens of the jobs to be done with data in an organization, unbundling doesn’t look at all like “lunacy”. Not to me at least. To me it looks like it is actively empowering distributed data competency.

I already said this at the beginning of this post: Sarah will henceforth always have the last word, because everything can truly be reduced down to a debate about centralization vs decentralization:

If usage of data in your organization is centralized in the data team, and your knowledge graph is maintained by only one team, the unbundling we’re seeing in the modern data stack may feel a little like “lunacy”.

But if you are looking to decentralize the use of data to make decisions in your organization, to put data in the rooms where decisions are being made, then this unbundling is nothing short of empowering because it’s helping to meet people where they are and make the data available in the tools they use to make decisions.

I’ll wrap this with an excellent quote from Andrew Escay (Analytics Engineering extraordinaire at dbt Labs). Andrew hit the nail on the head this week as we mused about Airflow vs the modern data stack:

“I think people are interpreting the “unbundling of Airflow” as “to hell with airflow”. I think Airflow offers a great amount of flexibility and, especially for teams on a tight budget, allows you to get away with a lot more without having to pay for multiple tools to get similar value. The trade off is that the different “unbundled” tools are definitely better for the job in their respective fields, but I don’t think it’s […] “the path to getting rid of airflow”. […] Optionality I think is key here, and the real value I see is that these new tools that “unbundle airflow” are lowering the barrier to entry for a lot more teams that don’t have as much python engineering experience and help empower more people to build robust data stacks without investing a lot of time learning programming languages from scratch.” (emphasis mine)

What do you think? Do you agree with Andrew and myself, or Nick? :) Hit me up on Twitter or reply to this e-mail — I want to hear from you!

Elsewhere on the internet…

Data Team Branding

by Emily Thompson

Emily is a staple in my weekly reading list by now (check out her principles for self-serve analytics that I covered in detail two weeks ago).

This week she brings us some real talk on data team branding, that is how to solve this particular problem:

While they were raving about the data person they worked most closely with, it surprised me how often stakeholders would simultaneously express feelings that the rest of the data team must be dropping the ball, given that more of their stuff wasn’t getting prioritized. It was almost as though they didn’t understand or could really explain what the data team was responsible for, even though every other data person had a respective stakeholder who felt just as enthusiastic about them. (emphasis mine)

Much like her other writing, Emily offers some very concrete examples of what to do if you find yourself here, like:

a biweekly Data Gazette to showcase your team’s work, stimulate data insight discovery (and how she made it happen with relatively little extra overhead!); and

notes on approachability that should be called “Let them eat carrot cake”:

Marissa Gorlick, a former Data Scientist on my team who was exceptionally skilled at creating outstanding relationships with stakeholders, once said “We just need to give them some carrot cake.” She meant that sometimes we have to give stakeholders what they want in order to build that trust, but I especially liked the metaphor of getting them to eat their veggies (statistical rigor) by wrapping it in a delicious confection (who doesn’t want cake?). This is less of a tactical suggestion, but as data leaders, encouraging the team to serve up carrot cake is a healthy cultural shift to improve approachability.

Read the rest, it’s excellent as usual!

Snapshot Your Metrics Models

By Erika Pullum

I’m so so so excited to see a post from Erika Pullum. She always delivers insightful writing, and this week it’s a return to a deeply technical space that she manages to make entirely approachable: taking some old advice for how to snapshot important company metrics over time (and why this is important!) and updating it for new dbt features.

Leaders show their work

By Ben Balter

I have learned so much from Ben about how to work in asynchronous environments, and the value of writing things down to promote transparency in decision making. This article belongs on my wall, right in front of my monitor, to be reviewed at regular intervals because it captures so nicely a fundamental principle that enables scaling collaboration in distributed settings (and not just!) — writing things down, and how to do this well.

Transparency is an important value for dbt Labs and we practice this internally, but I haven’t seen anyone put this quite so well as Ben has in this piece.

Must read!

Notebooks in Production with Metaflow

By Hamel Husain

I want to make it a point in this newsletter to connect the discourse in analytics circles and machine learning circles. Hamel’s article is such a cross over from ML land — Metaflow is an open source MLOps platform that bundles (ha) most of what you need to build production grade workflows. Now, those workflows include Notebooks as a node that can be placed anywhere in the process.

This is such a great example of meeting humans where they are. Notebooks are the lingua franca of the Data Science world, and this change gives folks the ability to do more in the things they are comfortable in.

Data as Code — cutting things smaller

By Sven Balnojan

Finally, Sven brings us an article documenting how his team went from your regular centralized data model to data applications. Sven talks about what you gain and lose in this approach, and I really appreciated this simple data mesh implementation grounded in specific examples and diagrams.

That’s it for this week! 👋

My dream "bundle" would be dbt sign-up flow allowing me to choose which data warehouse I want as a backend but otherwise never needing to get my hands dirty with `GRANT xyz TO dbt_schema` type config.

Much the same way Snowflake abstracts away which storage+compute, dbt could hide away the admin side of the dwh.

Maybe not the bundling being described, but it is the one I think of quite often!