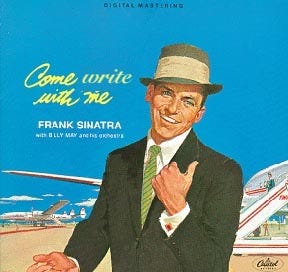

Come write with me

On breaking free from pandemic patterns, how Newton's laws relate to data storytelling, why data says Dune should have won best picture, and the Lagos data conference that broke data Twitter.

It’s always fun to write these late on a Saturday night as I watch data Twitter bubbling with a very much still developing conversation.

The source of the 🌶️ this week:

One of my favorite voices who never fails to give a solid reality check, had this to say about Benn’s piece:

I don’t recognize myself in this article.

I’m letting that sink in.

This has been theme of the last week: there’s been one (and only one) major data community gathering in person since the start of the pandemic, and when folks looked around the room, not everyone liked what they saw. In particular, we stopped recognizing ourselves. As a result, we’re now asking what’s going on? Why is it that when this community got together, it looked a lot like data tooling vendors and VCs and not the data practitioners we all expected to see? Is this why content lately has trended towards the future of data tooling, and is this a sign of things to come?

I don’t think so. I think a large part of what we’re seeing in in person gatherings and our online writing are the after-effects of a pandemic on our community of practice.

First, I think what we’re seeing are the effects of a social network that really hasn’t changed very much since before the start of the pandemic. We’ve all likely had varying success in keeping up with our existing social relationships during the pandemic, but I bet that universally we’ve had a very hard time making new relationships online. While some things are starting to come back to life, access to new social connections is not the same for everyone. For instance, folks with kids born before the pandemic who are still too young to be vaccinated continue to be isolated out of sheer necessity. The pandemic still sucks. (If you really want to dig in to how severe the social impacts of isolation can be, here’s a good article on the COVID generation.)

Second, the pandemic was full of life changing career events for many humans. Some businesses permanently shut down, or saw significant layoffs. Some folks were pushed to the point of burnout in unsafe environments and are seriously reconsidering their careers. In the early months of the pandemic, people spent a huge amount of time at home learning how to do new things. People had time to consider what is important to them. What they want next in their lives and in their careers. A shockingly booming stock market, growing valuations and a record number of IPOs have increased liquidity in a variety of ways, and a new generation of startups are emerging — a lot of them focused on solving data problems.

So the social network of data practitioners that gathered recently remained more or less the same, but a large number of folks changed jobs, or career orientations. And a non-trivial number of those humans (myself included) chose to join a technology company solving data problems, rather than continue to solve data problems as an IC or a data leader.

The question is, why?

The cynical take is we’ve failed at making actual change in our organizations and we’re hiding in the land of tooling. Those who can’t do, build tools for others? ;)

But the other take, the one I personally prefer, is that we’ve sat around long enough in our sweatpants during the pandemic, and decided to go out and fix some things that were broken… at scale. For every data team.

And while some of our roles and focus have changed, the practice of data hasn’t gone anywhere. There were over 128K jobs of various levels posted last week in the US alone.

Just as a noticeable number of humans have gone on to join or found data startups, many people have similarly learned a bunch of new skills and transitioned into a career in data during the pandemic. Others yet have stepped up into leadership roles vacated by the very humans who left to join these data startups.

This is a normal cycle in an industry. The folks that gathered last week are just one cohort. The folks starting new jobs in applied data today are another. All of us are going to have to work really hard over the next year to blur the lines between these different social networks.

Here’s what I’m doing personally:

Discovering new communities I haven’t been a part of before. (👋 Philly data people: gimme a shout if you’re doing anything in person in April.)

Organizing in person data events that are less tool specific, and more inclusive of folks with a variety of data careers and experiences.

Digging through lists of Medium and Substack articles looking for new voices and ideas (a few first time posts below!).

Encouraging more folks to write about their experiences as data practitioners. (Hat tip to Claire Carroll for calling this out as an important part of the development of our industry). As Tristan said, writing about data IC work is hard. But generalizing is possible. Writing about data team management and influence is also hard, and very important because many data leaders don’t have other humans in their organizations to form a community with.

So come meet new people with me.

And come write with me.

Want to write more but don’t know what to say? Check out some post ideas here, or reply to this e-mail and let’s jam on them together.

Have a lot to say but need a second pair of eyes on helping it land? Reply to this e-mail or find me in the Roundup channel, in the dbt Community Slack.

Elsewhere on the internet…

It looks like we’re not yet done talking about code vs no-code! :) A few articles on the topic this week:

In Under the hood, Sarah Krasnik takes the code/nocode black and white debate, and forces us to think in shades of grey. Specifically, Sarah points out abstractions are not created equal, and some abstractions are more useful than others. Sarah also poignantly explores what happens when we pick the wrong level of abstraction for a given data user audience.

In Synergy is the Future, Will Weld takes the “code/nocode is a spectrum” analogy all the way into the world of bright colors. Specifically, Will coins the word ‘purpleshifting’ to refer to data tools’ ability to help data practitioners with strong subject matter expertise (remember red people?) learn more blue (software engineering best practices). How? By combining the power of code based workflows with logical abstractions on top of them that allow new users to gently dip their toes in the waters of analytics engineering. Will is riffing off what Tristan wrote about a few weeks ago, and I love Will’s particular emphasis on the end-user in his writing. What I’m less sure of is if YAML is the new lingua franca for self-service data users ;) I think the argument of giving folks a configuration focused code language is an intriguing one, since that aligns more closely with what folks are trying to get done in their workflows. On the other hand, it’s easy to lose sight of the objective and end up with two semantic layers on top of one another: one in SQL, and another in an “easier language”.

This was Will’s first Substack post — go read it, it’s :chef-kiss-fingers-emoji:!

Speaking of code… turns out, software engineers love DAGs too! This week Redpoint announced an investment in a tool called (drumroll) Dagger that takes a messy software supply chain (translation: janky pastiche of configuration as code workflows) and standardizes it. Think Fivetran + dbt but for actual DevOps, or an open source version of GitHub Actions ;) Neat!

How NOT to reproduce analog inequalities in digital spaces by Jenna Slotin and Janet McLaren is an inspiring write-up on how merely making technology accessible (in this case, a major undersea internet cable landing in Africa) is not enough to solve for inequality in access to digital resources and spaces. This was a timely read and has many parallels to the conversations we’ve been having about better data tooling — yes, if we build awesome hybrid ‘purpleshifted’ code and no code solutions, we’ll make it a lot easier for many humans to work with data. But just like it’s not enough to run a giant cable across an ocean, there’s more work we need to do to unpack the collective pain, as Seth Rosen calls it, that’s behind our optimistic dreams about data tooling. Who are these humans we want to give access to faster and better data insights, and what’s currently actually stopping them from getting there?

Usually, when something goes viral on Twitter, it’s for all the wrong reasons. But every once in a while, something goes viral because it’s really freaking cool. Case in point, this Tweet from David Abu:

4 days layer, David and his team had a plan, a team, a prospectus, and sponsorship interest. See you in Lagos in November? ;)

This was a heavy issue for what was an April Fool’s week, and I’m a little disappointed there weren’t nearly enough modern data stack pranks (dbt Labs raises a Series E anyone?). So I found some fun data write-ups instead:

Chalamet Coughs, Dune Wins: Predicting Best Picture Winners Using Coughs and Sneezes. The authors of this paper stand by their methodology despite Academy Award results, and I think the potential for future work here is an absolute gas.

Can we apply Newton’s Laws of Motion to Data Visualization? This is actually a really insightful article. Hear me out — the author takes Newton’s three laws of motion, and translates them into really solid advice for communicating with data. It looks like the author’s first post, so it’s a little rough around the edges, but as I read the first few paragraphs it got more than a chuckle from me — some genuine ‘aha moments’ in here!

How to Analyze Formula 1 Telemetry in 2022 — A Python Tutorial. Formula 1 is back for another season, and there’s a Python library you didn’t know you needed to tell you that Lewis Hamilton will take the championship again in 2022. This is actually a fun way to learn how to work with data, and a nice change from endless Titanic examples.

That’s it for this week! 👋

Excellent compilation of thoughts, ideas and happenings.

My data rant : I have been to quite a few vendor offering pitches for data solutions, everyone talks about the cloud and how their products work with the cloud. I asked a question where i mentioned we are on perm, would you product work with such situations, they said no. My take is that there are lot of big firms still on legacy struggling to migrate to the cloud, why not offer something to help firms who are in transition and/or struggling with such initiatives. Buzz words will always be there,

but what is more important is how well can upcoming vendor products tackle variety of business use cases.