Modern data spaghetti. Brave data decisions. Data work ROI.

How the modern data stack has fueled a data sources soup and why this makes analysts work on "hard mode". Who needs to pay attention when analysts need to be brave. Emoji equations.

Greetings! 👋

This week’s issue once again changes up the format of this newsletter because there’s so much great content out there 🎉. This week you’ll find two meaty topics:

taming modern data spaghetti with an Open Source Data OS;

a riff on getting in the room and bravely making data informed decisions.

You’ll also find some shorter commentary on the ROI of data work and git workflows in reverse ETL.

Enjoy the issue!

-Anna

Psst! Smol plug: Tristan and I are speaking at Beyond.2021: Life after dashboards this week. You’ll hear from Tristan in the keynote first thing on Tuesday, and then from me a little later on the “Rise of the Analytics Engineer” alongside some friends. See you there! 👋

🍝 Modern Data Spaghetti and the open source data OS

It’s getting harder to be a successful data analyst. On the one hand, your success is measured by the speed with which you deliver insight1. On the other, in a bid to “break down data silos” and “accelerate the speed of data informed decision making”, you now need to contend with changes in an ever increasing array of data sources and destinations as you go about trying your darndest to do your job.

This week, Petr Janda shares a delightfully real story of what happens when someone DMs you on Slack and says: “Does this chart look right to you?” 🧐 Highly recommend reading the whole piece — it’s fantastic.

My favorite part though, is this diagram:

I love this diagram because it so precisely illustrates why analytics work today is getting harder. It is because analytics work today is ~10% delivering insight and ~90% going deeper into the matrix of data models and data sources to figure out “Can I trust this number?”. And thanks to the abundance of the modern data stack, the list of things to monitor and validate is constantly growing and changing.

Ian Tomlin, in an article that pairs very nicely with Petr’s, calls this phenomenon data spaghetti.

A quick recap of what data spaghetti is and how we got here:

Businesses are using more SaaS tools to get work done across departments like Sales, Marketing, Support. ✔️

A big promise of today’s data ecosystem is breaking down pesky data silos created by these SaaS tools, and the way we do that today is through more cloud data services for repeatable, predictable data workflows like ingestion, reverse ETL, etc. ✔️

This newfound and tantalizing ability to ingest ✨ all the data ✨ from ✨ all the SaaS tools ✨ a business uses today comes with, rather predictably, a new challenge: growing complexity in our pipelines. ✔️

Growing complexity in our pipelines leads to a new set of data problems: the need to resolve identities across these many systems, overly intricate data quality and availability monitoring across a growing number of sources and destinations, and (also rather predictably) adding even more tools to the SaaS stack that now help you manage these problems too. 👀

The end result is a procurement and business systems nightmare for everyone involved. Or as Petr puts it: “What a mess!” 🍝

👏👏👏 Yes, yes it is.

While many of us viscerally feel the pain of this problem, we’re not all (yet) coming to the same conclusions on what this means for the future of our data systems. Right now, we at least agree that we have a few paths in front of us:

Option 1: the data mesh, in which we resolve this complexity by splitting our systems into multiple vertical pillars — each with a much tighter scope — and creating interfaces between them.

✅ Pros: better vertical integration within a pillar means it’s easier to figure out why something “looks wrong” and “what changed”. Pillars get to choose their own tools without giving their head of IT a massive business systems integration headache.

🤔 Cons: developing shared constructs across these pillars for insights purposes (like resolving identities in a users table) remains very very hard.

Option 2: the data fabric (or sometimes called the application fabric), in which we resolve this complexity through one massive Platform-as-a-Service to rule them all (kind of like Datadog for data).

✅ Pros: one API, one vendor, one bill.

🤔 Cons: only one API, only one vendor, and one very large bill.

Option 3: the open source data OS, in which we resolve this complexity through open standards and shared protocols that enable different tools in the modern data stack to talk to one another.

✅ Pros: you’re not limited by the number of vendors you work with because the burden of integration and standardization is on them, and not on you, the data professional. Open standards and consistent definitions across vendors mean that you can contribute to a growing ecosystem of solutions that stitch various workflows together — and benefit from the ones that others create.

🤔 Cons: it’s not immediately obvious how procurement will work. We still need to agree on and build some of the pieces to make this real! 😬

My money (rather literally) is on Option 3 ;) What about yours?

Elsewhere on the internet…

👂On making decisions and getting in the room

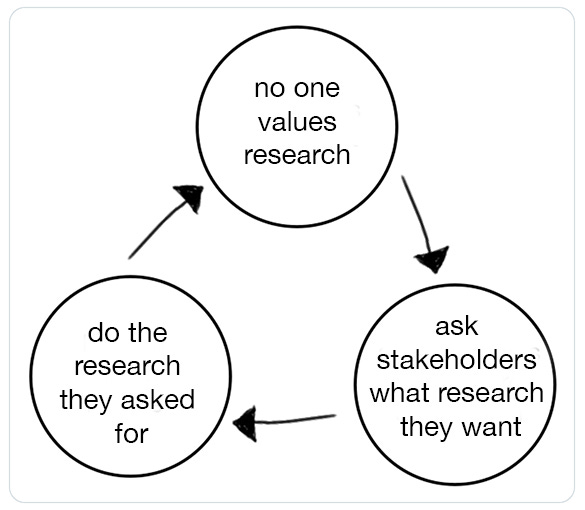

Cindy Alvarez cautions us this week on the trap we may fall into if we allow our stakeholders to drive our roadmaps. Though her focus is on qualitative research, the experiences she describes mirror those of analytics quite well. Take the below chart and replace the word ‘research’ with ‘data’ or ‘insights’ and you’ll have hit the heart of an ongoing values misalignment within organizations that want to be data informed.

Cindy’s recommendation to break out of this cycle is this:

The next step is that, as researchers, we need to eavesdrop and interrupt.

We need to skim docs and chat rooms and listen in on meetings until we hear some of those unchallenged assumptions and unsolved customer mysteries, and then volunteer to seek out answers. It’s great if stakeholders jump in and collaborate on that research; and even if they don’t, we aren’t asking for permission.

Our job is not to convince others of the value of research – it’s to create value through uncovering information that can change minds.

If this sounds familiar, then you have also done time on an analytics team in a semi large organization 😉 Analysts spend a lot of time simply trying to get into the room. When data informed decision making isn’t happening, we put it on ourselves to bust down the proverbial doors, and communicate in a way that changes minds.

This feels very different from the world Benn describes in his post this week, “Does data make us cowards?”. In Benn’s post, we see a world in which folks jump on data availability to help avoid making a difficult decision — a polar opposite experience. Where, you might ask, is the disconnect?

Both are actually symptoms of a similar problem. In Cindy’s example, a hypothetical organization is at a stage of maturity where assumptions are made without prior study. In Benn’s, the organization wants hard to be data informed, but the incentives to do so well are not fully realized. In both situations, we ask analysts/data scientists/researchers to step up and “be brave”:

In those moments, when the path ahead is foggy and people’s opinions are divided, it doesn't matter how smart we are if we're at the head of the table. When the room turns to us, they aren’t looking for a final insight to nudge one option ahead of the other. They need to know our opinion. They need to see our conviction. They need us to be courageous.

Without that courage, we’re just clever puppets, dancing to whatever tune our data sings to us, hoping nobody sees the wires we’re submitting to. To be real leaders, we have to prove ourselves brave enough to know when to walk on our own.

I completely agree that it is incredibly important for the folks in the room who have developed an educated opinion based on data/research to step up and share those opinions. I also think it is incredibly important for leaders to pay attention to systems of incentives, power and accountability in their organizations — asking analysts to step up and “be brave”, to “eavesdrop and interrupt” is asking them to apply guerrilla warfare tactics against a major opposition. It is a smell that can help point to the shape of that opposition in your organization — pay attention to it!

💲💲💲 Data Work ROI

Mikkel Dengsøe has admirable emoji game, and a timely and thoughtful piece on articulating the ROI of a data team’s work. We often talk about “customer facing data products” and “internal data analytics applications” as two entirely separate phenomena. Mikkel connects the dots in this post and reminds us of the different ways data roles on a team collaborate to create value:

The important takeaway for me is one of prioritization, especially for folks who sit closer on the systems side of the data jobs-to-be-done spectrum:

If you’re a Systems Person constantly evaluate how your work impacts downstream consumers (🖇), how many consumers you have (🎳) and how much time you spend (⌛️).

The implication of this is that an Analytics Engineer will have far greater ROI on their time from improving a data model used by 5 data scientists than by helping improve the workflow of only one. And a data engineer will have greater ROI on their time from improving the performance of the data platform because this platform supports every other data team member, and this has a multiplicative effect downstream:

Remember that everyone plays a role.

Data Scientists and Data Analysts work faster if they have high quality data and good data models. Analytics [Engineers] and Data Engineers have multitudes of impact if their data models are used by many.

Reverse ETL, now with version control

Finally, Hightouch.io dropped a Git integration this week that works with all your favorite software repositories. It’s great to see more and more pieces of data workflows adopting software engineering best practices! Highlights that I’m particularly excited about:

describing data workflows as code,

consistency across local and browser-based development workflows, and

interoperability with an open source protocol (git).

💳💥💥💥💳💥💥💥

👋 Until next time!