I unknowingly built a (bad) Semantic Layer in 2018

If you have a runaway success by accident, does it still count?

👋 An occasional theme of the Roundup is authors bemoaning the fact that it’s difficult to share experiences from the field, but this is a story I can tell without getting into any trouble. I hope you find it interesting.

I saw a question in the dbt Community Slack this week, and it took me back:

I don’t think we ever got up to 300 columns, but we absolutely had a variant of this problem while maintaining reports for our customer-facing teams. Originally we approached this by building task-focused tables which contained “just the right combination of columns”1 for each use case. Although we aimed to be flexible and accurate, we were barely achieving either.

Ultimately, we realised we needed to switch to an entity-based approach, and enable users to build their own reports from a highly validated and governed set of building blocks. (I’m going to refer to them as entities in this story for convenience, even though at the time I had no idea that’s what we were doing.)

Looking back now, our approach happens to align with a lot of the thinking described in Tristan’s Coalesce keynote last year, and the entities introduced in the new Semantic Layer spec, albeit significantly less robust2.

You’re probably wondering how I got myself into this situation

In 2018, I was working at an EdTech company on internal systems and reporting. Our homegrown reporting setup was based on parameterised stored procedures running against the production database3. This had some… problems.

Parameterisation was a two-edged sword: each rep instantly got a report filtered to their territory, but that report definition was shared across everyone. If someone wanted a column added or removed, it had to be changed for everyone. Adding a column usually meant removing another, to stay inside the runtime limits imposed on us to avoid taking the site down. We wound up spending as much time negotiating which changes to make as actually making the changes.

All stored procs were entirely independent but had overlapping properties, so getting consistent data between them was a nightmare. Every few months we would roll up our sleeves and bring everything into alignment through force of will, but then a new project would start with a copy-paste of a base report and the cycle began anew.

When our customer success team asked for an extra column for something like “students enrolled on paid licences”, the sales team would immediately request “students enrolled on trial licences”. And then someone would add those two numbers together across the two reports, realise they didn’t add up to the total number of students, and we’d spend an afternoon trying to work out why. (Spoiler: inconsistent definitions).

Cut out of the loop

Since requests for change would take a long time to resolve and be a painful experience for all involved, some enterprising staff started finding workarounds. For example, they would export multiple existing reports to CSV, stick them together with VLOOKUPs4, and then work from that until the data became too stale to be useful.

It was also becoming more and more common that instead of requesting changes to a report, people would ask for a spreadsheet export directly from the database that they could pivot on their own. This allowed everyone to move faster in the short term (including us!), until someone else came and asked for that same raw export and we couldn’t find the query anymore.

I realised that instead of frantically churning out different variants of the same handful of queries, we needed to create one stable, consistent dataset and empower people to consume it as they needed to. You might think this is going to be where we discover dbt, but we’re a few years away from that.5

Instead, we found a JS-based pivot table library which could read from JSON files stored on S3, meaning it was time to spin up what can only be described as the world’s worst data lake, fed by a recurring process to calculate the latest data.

Switching to entities

We created four different data sources which reflected different concepts in our CRM:

Schools

Their departments

Calls/emails made to teachers

Revenue lines on deals

Each entity had its own grain, and since this all the data was precalculated and stored – instead of truly understanding joins – we had to limit what other information could be brought through. Dimensions like Location, Sales rep, Health rating could be exposed across the board, but to avoid fanouts and overcounting we had to restrict things like questions answered this year or numbers of purchasing/trialling students to the school and department entities.

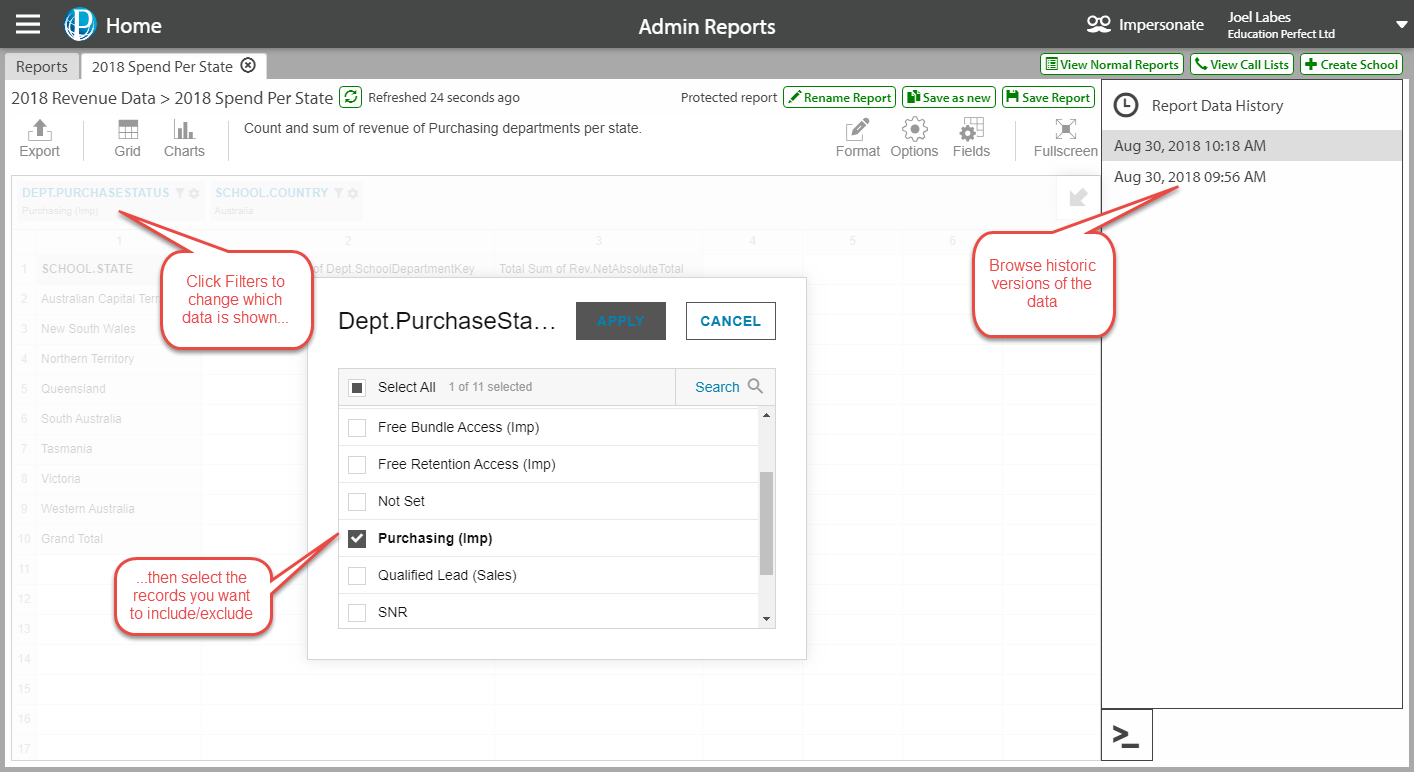

At launch, we created and published read-only foundational reports, but also enabled anyone to build on top of them and save their own copies. Since consumers were querying the files from S3, the main database caught a break, and we were able to let people browse old files to jump back in time and see the state of a school several months ago.

At the time, I described this change as splitting out our operational reports from our analytical reports. This was sort of true, although once people realised how much more flexibility and reliability the new system gave them, they started using it for their day to day operational work as well.

Looking back at it, the difference was not actually operational vs analytical, but that these reports were built with entities at their heart instead of a specific use case, so downstream users could build for many more use cases without our involvement6. Everyone understood what they were working with - the concepts mapped 1:1 with existing mental models, and they could audit the data themselves when it didn’t feel right.

I’m glad I’m not still using it

The setup wasn’t perfect. As described above, some datasets still needed to be exported and unified elsewhere if the join was too tricky to perform in advance. If we had tried to scale it out from a handful of tightly related entities to reflect more of the business, its rudimentary foundations would probably have collapsed under the load. And finally, in a classic “victims of our own success” story, people wanted this data everywhere else we exposed data, but since it was a bespoke setup it couldn’t be easily shared with other tools.

I’m proud of it7, but looking at what’s possible today, I don’t miss it.

From elsewhere on the web:

Julia Evans shares some tactics for writing in public, to “make the comments on my posts more informative and useful to me, and to try to minimize the number of annoying comments I get.”

Randy Au says we still build tools and should share them more. “Tools don’t need to be anything other than able to accomplish their job. The reality is that even the floppy plastic fork from lunch that you re-used to help dig and repot an office plant is a tool. The scrap of cardboard put under an uneven table leg is also a tool. Same goes for my simplistic colab notebook with more support text than code.”

Winnie made a video tour of her new Semantic Layer guide and makes a compelling case that how we think about our DAGs is going to change pretty soon. Worth your time and a big screen.

Yes, the scare quotes are foreshadowing.

Regardless, I demand my gold star for being ahead of the curve.

One sentence horror story challenge complete.

Even if we had found dbt, it would have made it easy to consistently build OBTs, but wouldn’t have helped at the consumption layer.

So much so that one of our board members could independently and successfully dive into the revenue data.

Especially the fact that multiple members of our finance team described it as “the highest ROI piece of software ever implemented”