Stories of and about data

It's true, spending time with other people's kids is the best.

I’m in the mood to revisit some of my old haunts this week…sources that I used to frequent but haven’t read as often of late. And I’m just so consistently impressed at the data visualizations that Nathan Yau creates that I’m sad that I haven’t spent as much time in Flowing Data of late as I should’ve.

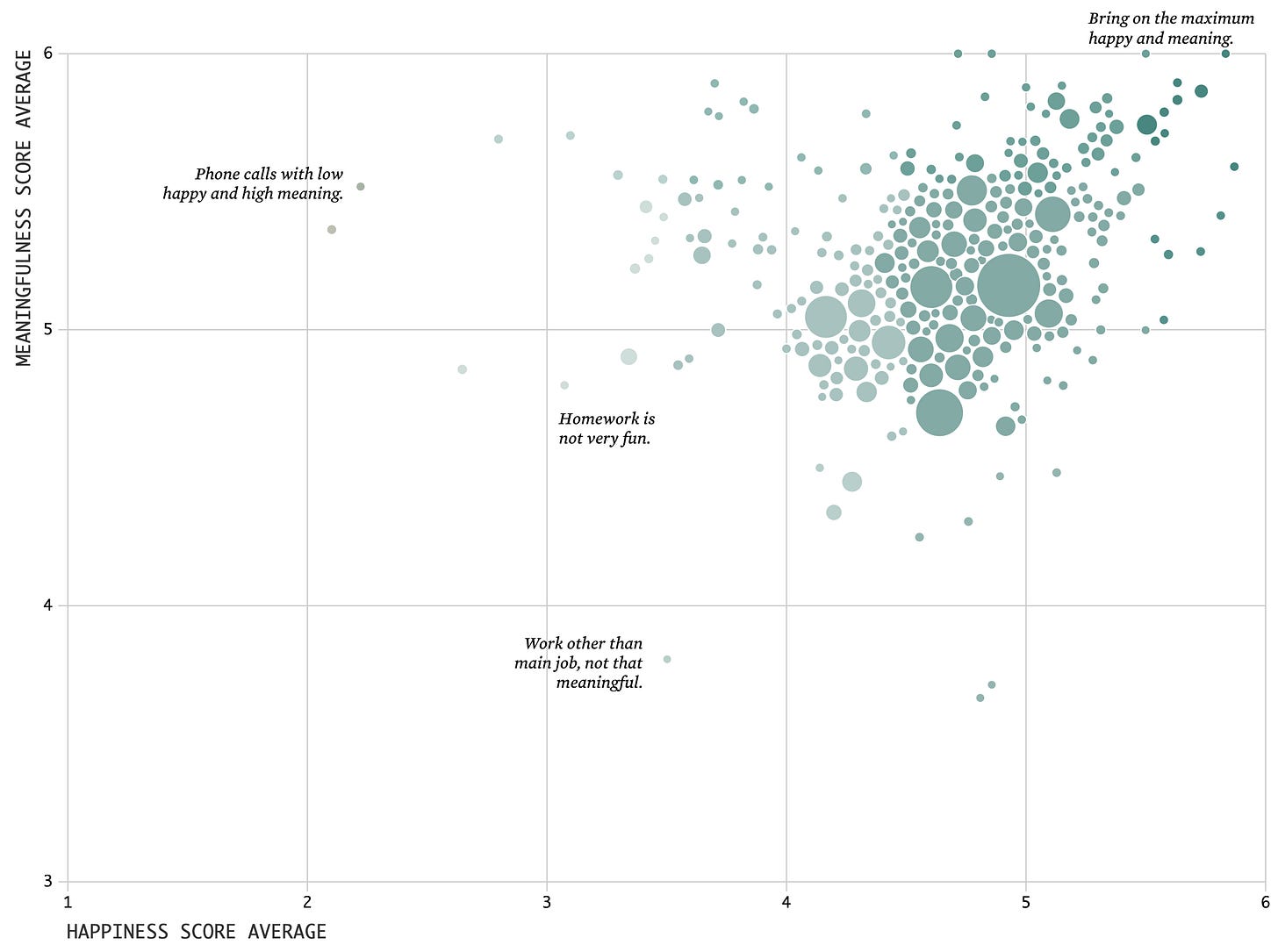

Here’s a fantastic one, entitled Happiness and Meaning in What You Do. It goes deeper on the time use survey data that went around the internet in many forms several months ago. It is simply a scatterplot the happiness / meaningfulness of a particular activity.

What I really enjoyed about this was how deep one could go in picking out stories here the more you dug. For instance, here’s one: while many childcare activities were highly ranked on both happiness and joyfulness, it turns out that playing with other people’s children ranks more highly on both dimensions than playing with your own. I’m writing this from a plane and lol’ed when I noticed that (look in the top right).

What stories do you see?

—

Telling stories using data is when I personally feel at the top of my game as a data practitioner. Do I love to act as the analytics engineer and model business concepts in dbt? Absolutely. Do I love to act the data engineer and figure out how to optimize operations on 10+ TB? That’s fun too. But nothing scratches that data itch for me as well as finding the story hidden in the data.

It’s why I love building spreadsheets that forecast the results of some particular business process. Having a spreadsheet that can reliably predict the future of some complex set of interactions is really magical when you see it play out in real time, and it’s fundamentally an act of storytelling. In building a model like this, you’re saying “Tell me a story about this complex messy thing. Ignore all of the non-essential details; what really matters here?”

Doing this well requires systems thinking that is so hard to teach. And it’s hard to talk about it, too, because all such models are highly proprietary. Which is why I’m really excited about this series from Equals:

Every company runs their business through a shortlist of models. Yet all of those models are private and every operator starts from scratch when building theirs.

We're excited to change that today. We're launching a new series to share the models built by the best operators in some of the most exciting businesses in technology, along with their stories.

We recently sat down with Sean Whitney of Craft Ventures, Figma’s 40th employee and second Strategic Finance hire, to learn how Figma built a Self Serve forecast model that consistently predicted ARR growth within 5%.

The really fantastic thing about this particular model (other than it being empirically successful) is that it shows a working example of how to integrate cohort behaviors into a forecast model. Often folks take a shortcut and don’t break out cohorts, which in my experience is fine for a back-of-the-napkin exercise but is just not sufficient to build something that can be relied upon.

Looking forward to more of these!

—

Some stories are told with the pace of an action movie: one plot point after another, gripping because you keep wanting to know what’ll happen next. Other stories create an impression in the mind that needs to be examined over and over to figure out what to take from it. Have you ever held a book open on the last page while staring off into the distance for several minutes? These are some of the best stories, although they require more work to appreciate.

These are the kinds of stories that Stephen Bailey tells—you just never know when starting to read exactly where you’re going to go. His most recent, The Modern Data Graph, is an epic, and it really resonated with me. I’m not going to try to do it justice by summarizing it here, but want to try to share why it resonated so deeply.

The big point: we should be focusing on the “modern data graph,” not the “modern data stack”.

Certainly, dbt has been highly identified with the modern data stack. This effort—modular, best-of-breed, interchangeable data systems—is very important because it frees practitioners to make choices about their tools instead of being captive to them.

But somewhere along the line, the popular narrative became about the tools themselves, the stack. And while this story is important, it’s not actually the more interesting one playing out in the industry right now. Stephen’s post does perhaps the best job of anything I’ve seen of explaining the interesting part.

I might say it a bit differently than him, though. Let me try my version, and I promise to keep it brief.

Because of the advances in data processing and software engineering tooling, we are now able to do a fundamentally new thing: to make organizational knowledge flows explicit rather than tacit. Previously, for literally all of history, organizations were emergent collective consciousnesses. No one person knew all of the details of how the collective acted, no one knew quite how to control it, and attempting to govern its behavior was more of an art than a science. This is because its core information processing capabilities were all locked up in individuals’ heads.

What we are doing when we build and test dbt models is much bigger than creating some tables in a warehouse. We are taking knowledge from our brains and encoding it in a way that other humans and computers can read and update it. The resultant artifacts are strongly typed—they aren’t mish-mashes of procedural code, they are descriptions of specific real-world artifacts. And the native structure of this knowledge, including the knowledge of people and processes that are not (yet?) described in dbt, is the graph.

The effort that we and the entire industry are undertaking right now is to encode our knowledge into a well-understood, governable, maintainable graph. We are in the very early innings of this effort, and it is the most interesting story in the industry today.

—

Too much? Here’s something lighter1— one of the most interesting stories I’ve seen told with data recently. The interesting thing about the data in this story is that it’s totally based on simulations that the reader creates on the fly.

Telling a story with your own data? Cool. Telling a story with a series of simulations that your reader can interact with? Even cooler.

—

Vicki Boykis tells a story about the state of modern software development. Here’s the heart of it:

Instead of working on the core of the code and focusing on the performance of a self-contained application, developers are now forced to act as some kind of monstrous manual management layer between hundreds of various APIs, puzzling together whether Flark 2.3.5 on a T5.enormous instance will work with a Kappa function that sends data from ElephantStore in the us-polar-north-1 region to the APIFunctionFactoryTerminal in us-polar-south-2.

This kind of software development pattern is inevitable in our current development landscape, where everything is libraries developing on top of libraries, all of which, in the cloud, need network configuration as big cloud vendor network topologies determine the shape of our applications.

Her personal example, building an ML application, illustrates the problem very effectively—reading as she describes her experience debugging one problem after the next feels like a slow descent into madness. While the capabilities of a single software engineer in question have undoubtedly grown dramatically, one’s time is spent differently.

How are we to feel about that? These Hacker News commenters have a lot of thoughts. Vicki’s conclusion, that we need to “step back from the cloud insanity ledge a little bit,” may be true. The other option is that we need to go further. If you’re stuck in a transitional state, you can either go forwards or backwards, but you can’t stay still.

This is a bad joke…the post is about the inevitability of extreme wealth inequality.