Winners and losers when you change a metric

Clowning around with data. Being very serious with data masking.

Kia ora! Joel here. For my first contribution to the Roundup, we’re doing it the old-fashioned way: no thinkpiece, just some links I think are fun. Let’s go!

The big change to NZ’s pop charts that’s infuriated two of our biggest artists

New Zealand’s music charts are changing how they calculate top performers in the streaming era. “Catalogue” albums (those older than 18 months) used to be allowed to stay in the top 40 charts until they dropped out. But unlike physical album sales, streams continue to accrue indefinitely, so the biggest names aren’t dropping out anymore:

Six60’s first two albums, for example, spent a combined 800 weeks on the [artists from New Zealand] charts – and its second album sat pretty at #1 in April, more than eight years after it was first released.

I always1 enjoy seeing data problems leak out of their sandboxes. If you want a metric to identify “popular new music”, how many people are buying it is a pretty good one! When people stop doing that in favour of renting their music instead, it seems like counting streams should work as a drop-in replacement. And yet.

‘Everyone got sick and tired of the same old song being on top of the charts, week in, week out. People will complain that it’s been fiddled with, but it’s always been fiddled with,’ says Shepherd. ‘It’s not a product of nature, it’s a created thing.’

That last sentence is the important bit. If you do a good enough job of defining an elegant metric, it might seem like a natural law. But when the world moves on, the abstraction starts to leak.

Data engagement through light clownery

I’ve really enjoyed Faith's writing this year, and this one about having fun with your data is an absolute gem.

Another data team member was sifting through the mountains of data we have to our name and found an interesting fun fact. They posed it to the #data channel as a game show-style question and offered 1,000 points to whoever got the correct answer.

This garnered terrific engagement. Who doesn’t want to compete for fake points that you can then lord over your coworkers’ heads? Beyond that, it also revealed interesting assumptions that my coworkers made about our data.

Beyond inventing a fun new game (brb, off to petition our team to turn their Data Nuggets series into a gameshow), knowing how your colleagues are thinking about the world from their answers helps tailor your messaging to them.

How Dynamic Data Masking works

I spent some time this week working on our internal dbt project, making some updates to our DEI dashboard. Obviously, this data is very sensitive; not only should I not see it in its raw form, I really don’t want to! Fortunately, our data team had already configured dynamic data masking (shoutout to the dbt_snow_mask package!) so all the specifics were hidden from me and I could build in peace.

I didn’t know anything about how data masking worked when I started, but after digging into this explainer from the phData team I’m both enlightened and impressed.

In a nutshell, a masking policy is a fancy case statement that the query engine transparently swaps into a querier’s command at runtime, based on their current privileges.

End users can query the data without knowing whether or not the column has a masking policy. For authorized users, query results return sensitive data in plain text, whereas sensitive data is masked, partially masked, or fully masked for unauthorized users.

The rewrite is performed in all places where the protected column is present in the query, such as in “projections”, “where” clauses, “join” predicates, “group by” statements, or “order by” statements.

My practical takeaway: without a solid CI setup, this would have been a nightmare to work on (our CI job has permissions for the unmasked data so could correctly generate the rollup tables where I couldn’t.) Even with the overhead of committing changes and waiting for them to build, I got my test results and table previews in a few minutes every time, and got to merge with confidence yesterday.

Behind the scenes at Lego Masters NZ

Officially this has nothing to do with data, but I bet there’s an overlap between Lego enthusiasts and DAG enthusiasts. “After two seasons of intense brick sorting, Jackson reckons she can identify Lego simply by touch, a skill she compares to touch typing.”

Building a Kimball dimensional model in dbt

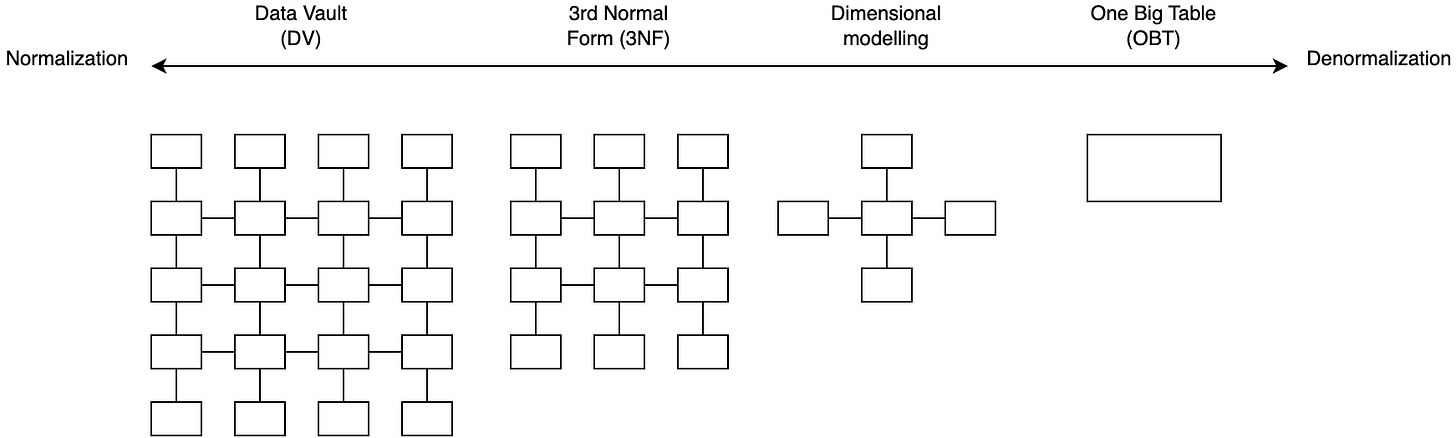

Very much to do with data: a fantastically practical writeup by Jonathan Neo. Include this on the list of documents that I wish existed at the start of my data career - the image at the top of the page did more to crystallise the different modelling philosophies for me than anything else I’ve seen.

Better still: it comes with a demo project you can work through as you follow the guide.

What’s the point of tech conferences?

I’m trying so hard to not just quote the whole post. Did you know “obliquity” was a word? Neither did I until now. Want to see it used in a sentence? That’s one of the bits I had to cut - just click through.

One of the key benefits Chelsea calls out:

Conferences become worth it from the conversations that happen between folks who otherwise might not have met, that endure beyond the event itself.

As someone who is much more reserved in person than online, this seems more than a lil scary! But as someone who spent all of Coalesce 2020 memeposting and making new friends in Slack, it checks out - chalk up another win for virtual conferences.

Whether you’re in person, or online, or opt out altogether, ✨community✨ has your back:

It turns out that I (and you) have almost certainly benefited from cons we’ve never been to and never heard of. Someone has helped us in some way because they met someone else at one of those cons.

Since we’re on the topic: remember to register for Coalesce! Third week of October, online or in-person.

Except when they’re my data problems